Course Overview

Course Description

This course explores computing beyond software. Students will go a level deeper to better understand the hardware, and see how computers are built and programmed. It aims to help students become better programmers by teaching the concepts underlying all computer systems. The course integrates many of the topics covered in other computer science courses, including algorithms, computer architecture, operating systems, and software engineering.

In this course, students will explore the entire system including hardware, operating systems, compilers, and networks. We will emphasize practical learning through the development and evaluation of real programs on actual machines. Key topics covered include data representations, machine-level representations of C programs, processor architecture, and program optimizations. Additionally, the course delves into the memory hierarchy, exceptional control flow (like exceptions, interrupts, and Unix signals), virtual memory, system-level I/O, basic network programming, and concurrent programming. These concepts are reinforced through engaging, hands-on assignments, providing a comprehensive understanding of computer systems. By the end of the course, students will develop a strong understanding of the relationships between the architecture of computers and software that runs on them.

Learning Outcomes

By the end of the course, you will be able to:

- Understand how hardware and software systems are built, and work together

- Explain how key abstraction layers in computers are implemented, and how higher level representations are translated into machine language

- Write assembler and machine code

- Design and build a general purpose computer system, including hardware and software, from the ground up

Instructor

- David Walter

- david@kibo.school

Please contact on Discord first with questions about the course.

Live Class Time

Note: all times are shown in GMT.

- Wednesdays at 5:30 PM - 7:00 PM GMT

Office Hours

- Instructor: Fridays at 7:00 PM - 8:00 PM GMT

Core Reading List

Nisan, N., Schocken S. (2021). The Elements of Computing Systems, second edition: Building a Modern Computer from First Principles. MIT Press

Carpinelli, John D. (2023). An Animated Introduction to Digital Logic Design. NJIT Library

Supplementary Reading List

- Amoroso, E. Amoroso, M. From Gates to Apps, Silicon Press 2013

- https://jvns.ca/blog/learn-how-things-work/

- http://www.bottomupcs.com/

- https://jvns.ca/blog/2016/09/11/rustconf-keynote/

- https://leaningtech.com/webvm-server-less-x86-virtual-machines-in-the-browser/

- https://pages.cs.wisc.edu/~remzi/OSTEP/

Live Classes

Each week, you will have a live class (see course overview for time). You are required to attend the live class sessions.

Video recordings and resources for the class will be posted after the classes each week. If you have technical difficulties or are occasionally unable to attend the live class, please be sure to watch the recording as quickly as possible so that you do not fall behind.

Assessments

Your overall course grade is composed of these weighted elements:

- 10% Participation

- 65% Assignments

- 25% Midterm Project

Assessment List

| Assignment | Due Date (by 11pm GMT) | Grade Weight | Late Submission Possible |

|---|---|---|---|

| Participation | Live Class Sessions | 10% | No |

| Assignment 1 | Monday Jan 15, 2024 | 9% | Yes |

| Assignment 2 | Monday Jan 22, 2024 | 9% | Yes |

| Assignment 3 | Monday Jan 29, 2024 | 9% | Yes |

| Assignment 4 | Monday February 5, 2024 | 9.5% | Yes |

| Midterm Project | Monday, February 19, 2024 | 25% | Yes |

| Assignment 5 | 9.5% | Yes | |

| Assignment 6 | 9.5% | Yes | |

| Assignment 7 | 9.5% | Yes |

Participation

Active engagement in the Live Class sessions is critical for this course. Students should think of these sessions as interactive engagements rather than lectures. As such, you are expected to participate by answering questions, completing the exercises, etc.

Assignments

Most weeks you will be given an Assignment, where you'll practice the concepts covered in the readings and lessons. The assignments let you practice with the topics you covered that week, explore applications and connections, and check your own understanding of the material.

Assignments will be distributed via GitHub classroom, and you will be asked to write significant code. Upon completion of the assignments, they will be submitted to Gradescope for grading.

Projects

You will have a midterm project and a final project in this course. Projects are larger in scale and will give you an opportunity to work with larger amounts of code to solve more complex problems. You will be given two weeks to complete each project. They represent a significant portion of your grade, and so it is important that you start these projects as early as possible.

Late Policy

You are expected to submit your work by the deadline. Each assignment page will include instructions and a link to submit.

The table above specifies the assignments for which late submission is possible. Any work submitted late will incur penalties in accordance with Kibo's Late Work Policy.

Getting Help

If you have any trouble understanding the concepts or stuck on a problem, we expect you to reach out for help!

Below are the different ways to get help in this class.

Discord Channel

The first place to go is always the course's help channel on Discord. Share your question there so that your Instructor and your peers can help as soon as we can. Peers should jump in and help answer questions (see the Getting and Giving Help sections for some guidelines).

Message your Instructor on Discord

If your question doesn't get resolved within 24 hours on Discord, you can reach out to your instructor directly via Discord DM or Email.

Office Hours

There will be weekly office hours with your Instructor and your TA. Please make use of them!

Tips on Asking Good Questions

Asking effective questions is a crucial skill for any computer science student. Here are some guidelines to help structure questions effectively:

-

Be Specific:

- Clearly state the problem or concept you're struggling with.

- Avoid vague or broad questions. The more specific you are, the easier it is for others to help.

-

Provide Context:

- Include relevant details about your environment, programming language, tools, and any error messages you're encountering.

- Explain what you're trying to achieve and any steps you've already taken to solve the problem.

-

Show Your Work:

- If your question involves code, provide a minimal, complete, verifiable, and reproducible example (a "MCVE") that demonstrates the issue.

- Highlight the specific lines or sections where you believe the problem lies.

-

Highlight Error Messages:

- If you're getting error messages, include them in your question. Understanding the error is often crucial to finding a solution.

-

Research First:

- Demonstrate that you've made an effort to solve the problem on your own. Share what you've found in your research and explain why it didn't fully solve your issue.

-

Use Clear Language:

- Clearly articulate your question. Avoid jargon or overly technical terms if you're unsure of their meaning.

- Proofread your question to ensure it's grammatically correct and easy to understand.

-

Be Patient and Respectful:

- Be patient while waiting for a response.

- Show gratitude when someone helps you, and be open to feedback.

-

Ask for Understanding, Not Just Solutions:

- Instead of just asking for the solution, try to understand the underlying concepts. This will help you learn and become more self-sufficient in problem-solving.

-

Provide Updates:

- If you make progress or find a solution on your own, share it with those who are helping you. It not only shows gratitude but also helps others who might have a similar issue.

Remember, effective communication is key to getting the help you need both in school and professionally. Following these guidelines will not only help you in receiving quality assistance but will also contribute to a positive and collaborative community experience.

Screenshots

It’s often helpful to include a screenshot with your question. Here’s how:

- Windows: press the Windows key + Print Screen key

- the screenshot will be saved to the Pictures > Screenshots folder

- alternatively: press the Windows key + Shift + S to open the snipping tool

- Mac: press the Command key + Shift key + 4

- it will save to your desktop, and show as a thumbnail

Giving Help

Providing help to peers in a way that fosters learning and collaboration while maintaining academic integrity is crucial. Here are some guidelines that a computer science university student can follow:

-

Understand University Policies:

- Familiarize yourself with Kibo's Academic Honesty and Integrity Policy. This policy is designed to protect the value of your degree, which is ultimately determined by the ability of our graduates to apply their knowledge and skills to develop high quality solutions to challenging problems--not their grades!

-

Encourage Independent Learning:

- Rather than giving direct answers, guide your peers to resources, references, or methodologies that can help them solve the problem on their own. Encourage them to understand the concepts rather than just finding the correct solution. Work through examples that are different from the assignments or practice problems provide in the course to demonstrate the concepts.

-

Collaborate, Don't Complete:

- Collaborate on ideas and concepts, but avoid completing assignments or projects for others. Provide suggestions, share insights, and discuss approaches without doing the work for them or showing your work to them.

-

Set Boundaries:

- Make it clear that you're willing to help with understanding concepts and problem-solving, but you won't assist in any activity that violates academic integrity policies.

-

Use Group Study Sessions:

- Participate in group study sessions where everyone can contribute and learn together. This way, ideas are shared, but each individual is responsible for their own understanding and work.

-

Be Mindful of Collaboration Tools:

- If using collaboration tools like version control systems or shared documents, make sure that contributions are clear and well-documented. Clearly delineate individual contributions to avoid confusion.

-

Refer to Resources:

- Direct your peers to relevant textbooks, online resources, or documentation. Learning to find and use resources is an essential skill, and guiding them toward these materials can be immensely helpful both in the moment and your career.

-

Ask Probing Questions:

- Instead of providing direct answers, ask questions that guide your peers to think critically about the problem. This helps them develop problem-solving skills.

-

Be Transparent:

- If you're unsure about the appropriateness of your assistance, it's better to seek guidance from professors or teaching assistants. Be transparent about the level of help you're providing.

-

Promote Honesty:

- Encourage your peers to take pride in their work and to be honest about the level of help they received. Acknowledging assistance is a key aspect of academic integrity.

Remember, the goal is to create an environment where students can learn from each other (after all, we are better together) while we develop our individual skills and understanding of the subject matter.

Academic Integrity

When you turn in any work that is graded, you are representing that the work is your own. Copying work from another student or from an online resource and submitting it is plagiarism. Using generative AI tools such as ChatGPT to help you understand concepts (i.e., as though it is your own personal tutor) is valuable. However, you should not submit work generated by these tools as though it is your own work. Remember, the activities we assign are your opportunity to prove to yourself (and to us) that you understand the concepts. Using these tools to generate answers to assignments may help you in the short-term, but not in the long-term.

As a reminder of Kibo's academic honesty and integrity policy: Any student found to be committing academic misconduct will be subject to disciplinary action including dismissal.

Disciplinary action may include:

- Failing the assignment

- Failing the course

- Dismissal from Kibo

For more information about what counts as plagiarism and tips for working with integrity, review the "What is Plagiarism?" Video and Slides.

The full Kibo policy on Academic Honesty and Integrity Policy is available here.

Course Tools

In this course, we are using these tools to work on code. If you haven't set up your laptop and installed the software yet, follow the guide in https://github.com/kiboschool/setup-guides.

- GitHub is a website that hosts code. We'll use it as a place to keep our project and assignment code.

- GitHub Classroom is a tool for assigning individual and team projects on Github.

- Visual Studio Code is an Integrated Development Environment (IDE) that has many plugins which can extend the features and capabilities of the application. Take time to learn how ot use VS Code (and other key tools) because you will ultimately save enormous amounts of time.

- Anchor is Kibo's Learning Management System (LMS). You will access your course content through this website, track your progress, and see your grades through this site.

- Gradescope is a grading platform. We'll use it to track assignment submissions and give you feedback on your work.

- Woolf is our accreditation partner. We'll track work there too, so that you get credit towards your degree.

Introduction to Computer Systems

Welcome to the first week of Computer Systems! This week, we will begin our exploration of the essential principles of computer systems, covering how we represent data using bits and bytes, exploring the architecture that makes up a computer, diving into the mechanics of how computers perform integer arithmetic, understanding the role of operating systems, acquainting ourselves with the C language (common programming language used for lower-level programming), and unraveling the art of debugging.

Topics Covered

- Bits, bytes, and data representation

- Hardware organization of a system

- Integer representation and arithmetic

- Operating System Responsibilities

- Introduction to C (gcc)

- Using a debugger (gdb)

Learning Outcomes

After this week, you will be able to:

- Understand the basic components of a computer system and their roles in data processing.

- Describe the von Neumann architecture and the fetch-execute cycle.

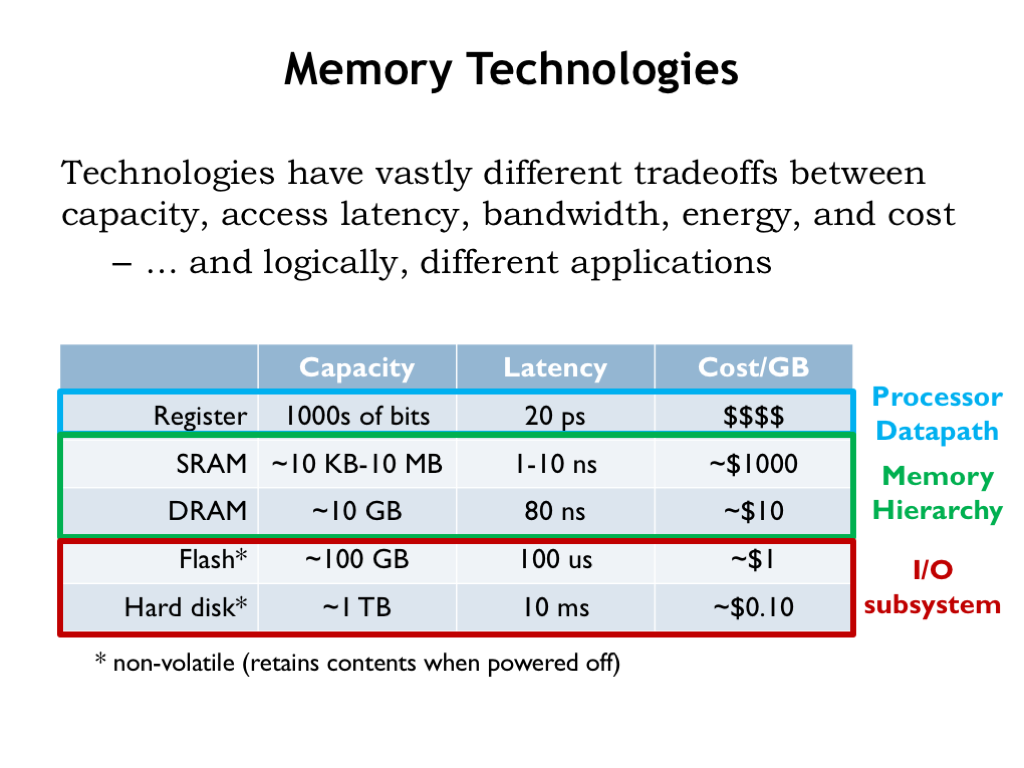

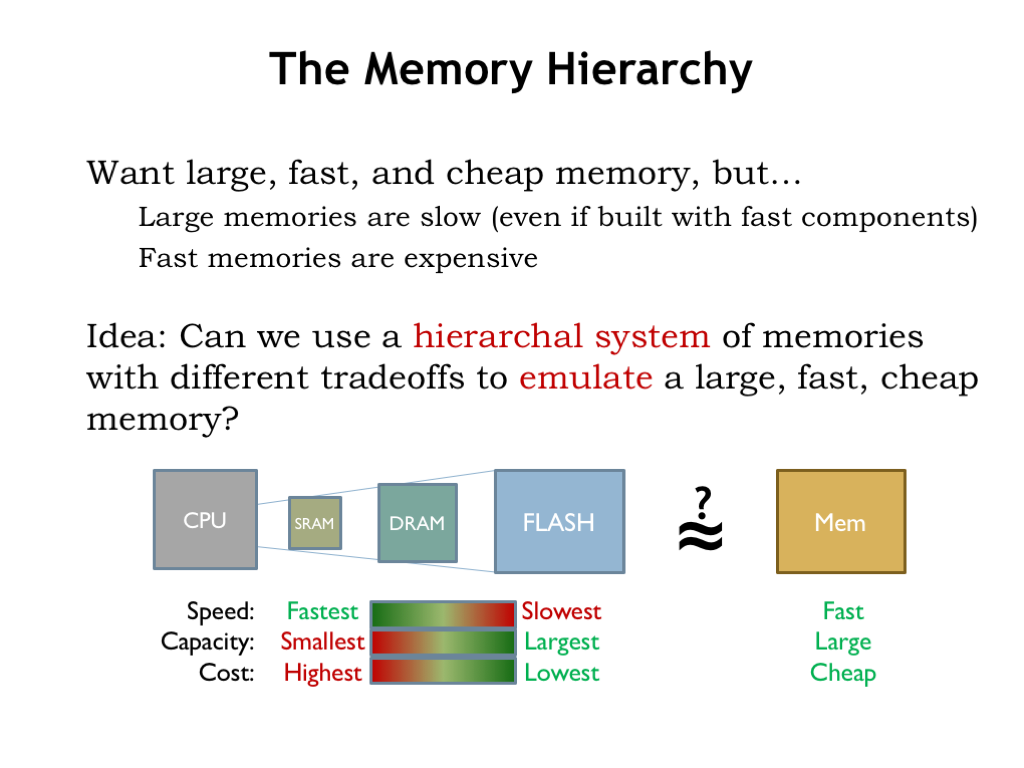

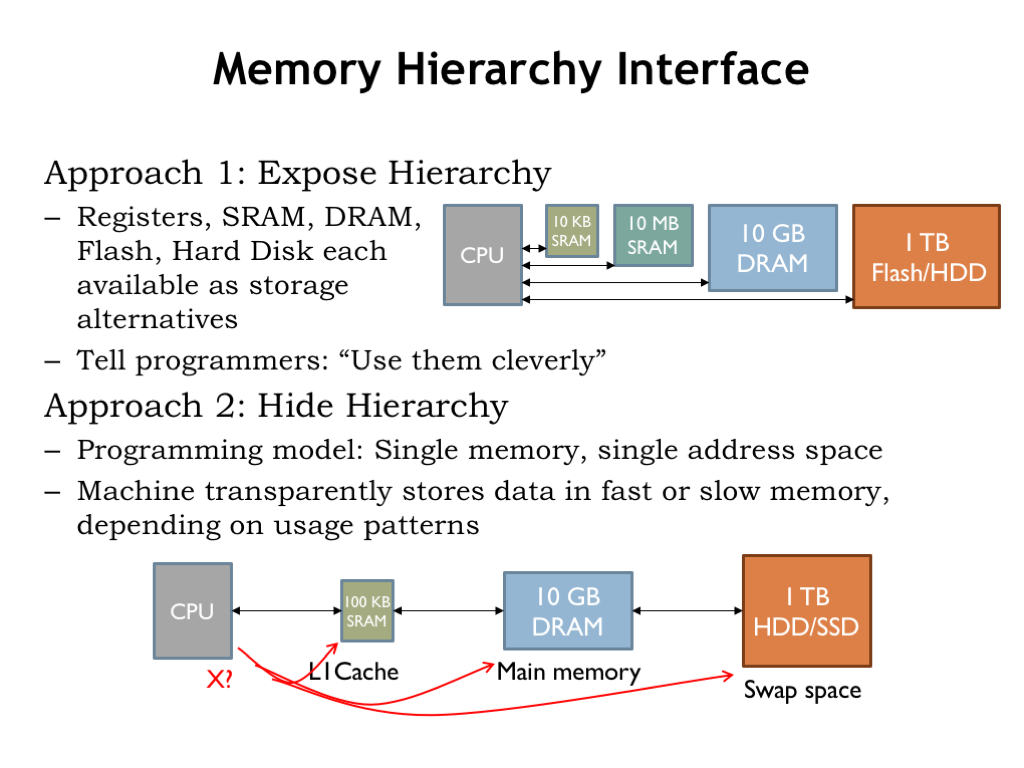

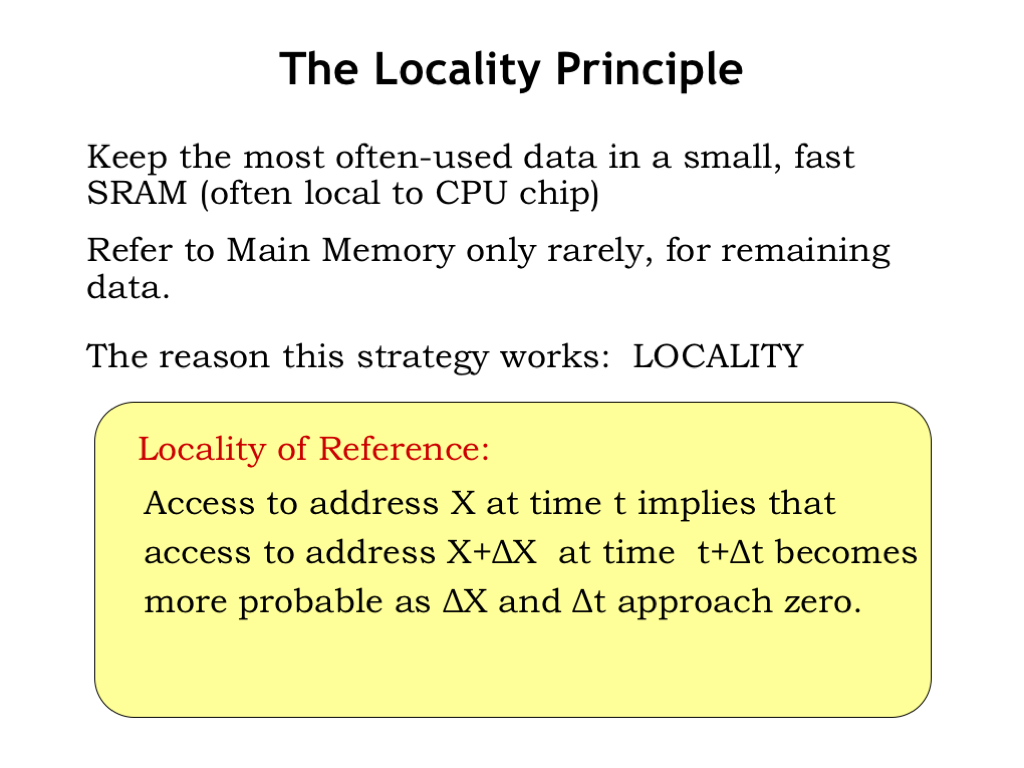

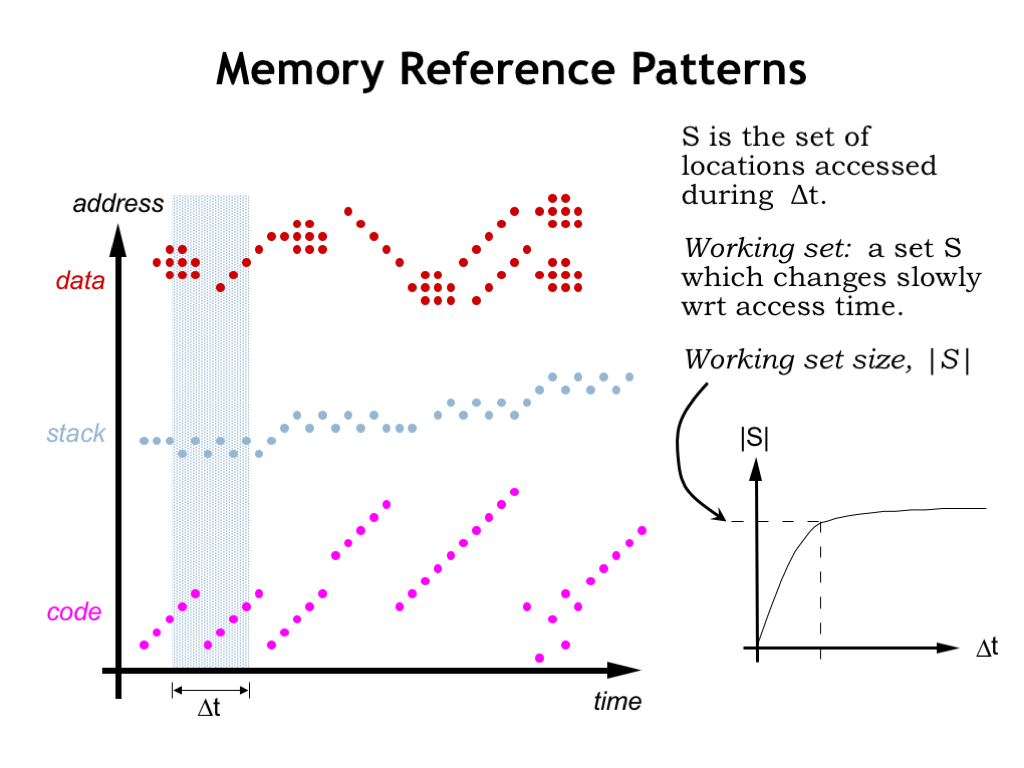

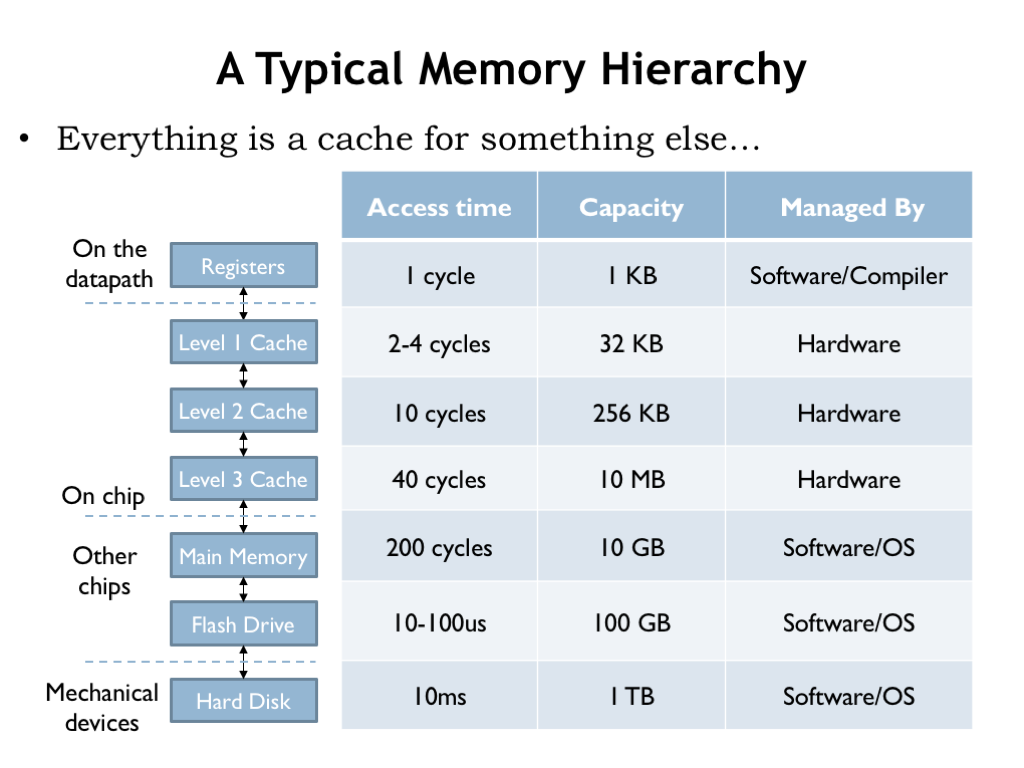

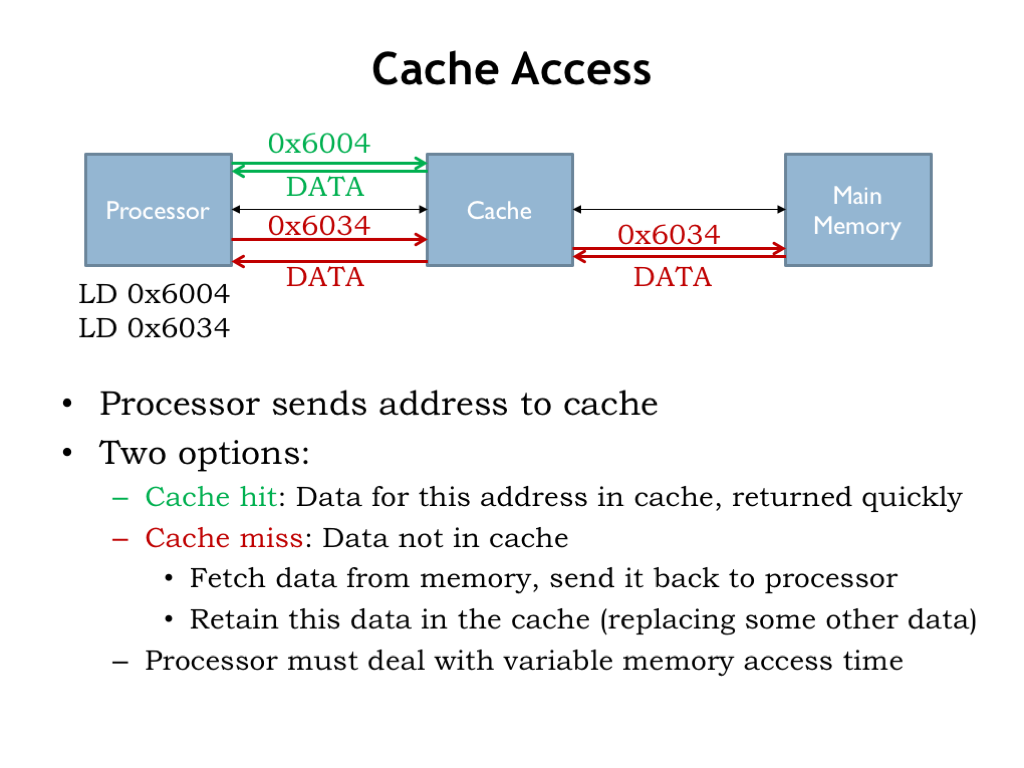

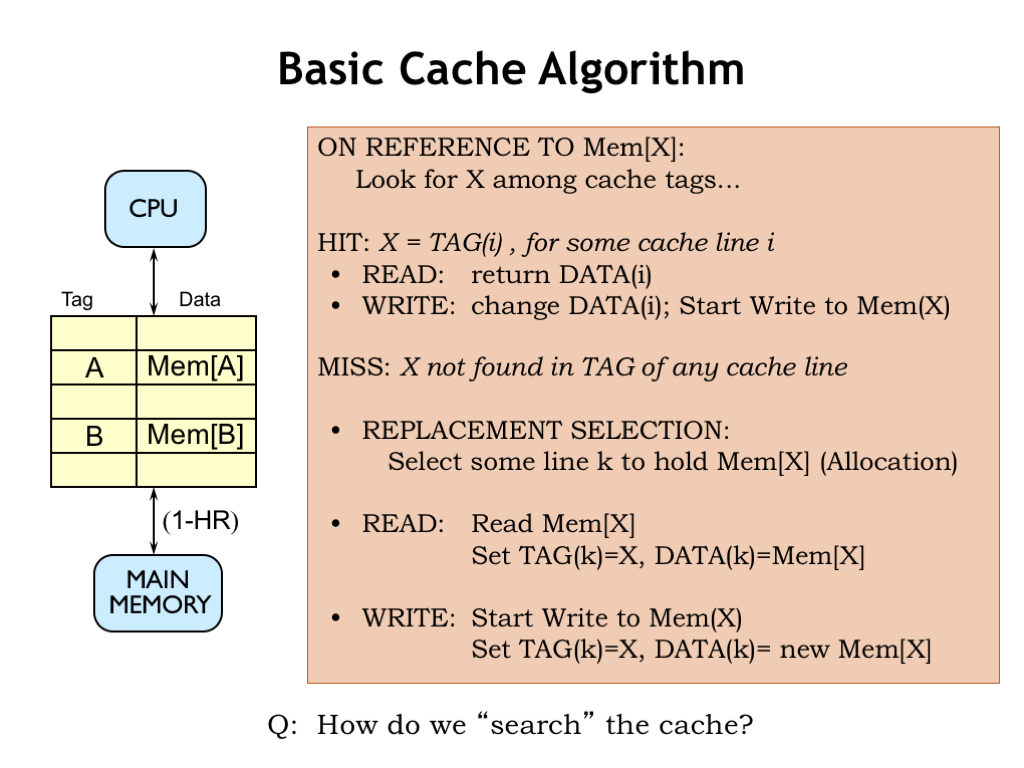

- Explain the hierarchical structure of memory and its impact on program performance.

- Analyze the various levels of programming languages and their relationships to computer hardware.

- Demonstrate proficiency in binary, hexadecimal, and decimal number systems.

- Convert between different number representations.

- Explain how characters and strings are represented in computer systems (ASCII, Unicode, etc.).

- Analyze bitwise operations and their applications in programming.

Bits, Bytes, and Data Representation

The Binary World

Every digital device speaks the language of bits, the tiniest units of data in computing. These binary digits, 0 and 1, are the foundation of all modern computer systems. A single bit is like a light switch—it can either be in the off position, represented by a '0', or in the on position, represented by a '1'. Despite their simplicity, when combined, bits have the power to represent any form of data—be it text, images, or sound.

❓ Test Your Knowledge

Consider a series of 8 light switches, each corresponding to a bit. How many unique patterns can you create with the switches being in either the on or off positions.

Bytes: Grouping Bits

While a bit represents a binary state, a byte, composed of 8 bits, is the standard unit for organizing and processing data. The 8 bits in a byte are enough to represent 256 different values, which can be anything from numbers to to floating point values to characters. Larger data measurements build upon bytes, with kibibytes (KiB), mebibytes (MiB), gibibytes (GiB), and so on. A KiB is 1024 bytes, a MiB is 1024 KiB (or 1024*1024 bytes), and so on.

You may be thinking, "I've always heard them referred to as kilobytes, megabytes, gigabytes, etc. What's with this kibi, mebi, gibi stuff?" Or you may be thinking, "why 1024 instead of 1000?". Well, you're correct to ask these questions? Historically, since computers are based on binary systems, we generally count in powers of 2, and 1024 is equal to 2^10. It also used to be the case that we used the prefixes kilo, mega, giga, etc. referring to these. But for marketing purposes, hard drive manufacturers stuck to counting bytes using a base ten system, claiming they just follow International System of Units, which created growing confusion among customers. To resolve the concerns, the idea of new prefixes for the base 2 counting was born (kibi, mibi, tebi, etc.) and standardized as IEEE 1541-2002. Many people haven't adopted the updated terminology and so you will hear both.

| Size in Bytes (Binary) | Binary Term (IEC Standard) | Size in Bytes (Decimal) | Decimal Term |

|---|---|---|---|

| 1 (2^0) | 1 Byte | 1 (10^0) | 1 Byte |

| 1,024 (2^10) | 1 Kibibyte (KiB) | 1,000 (10^3) | 1 Kilobyte (KB) |

| 1,048,576 (2^20) | 1 Mebibyte (MiB) | 1,000,000 (10^6) | 1 Megabyte (MB) |

| 1,073,741,824 (2^30) | 1 Gibibyte (GiB) | 1,000,000,000 (10^9) | 1 Gigabyte (GB) |

| 1,099,511,627,776 (2^40) | 1 Tebibyte (TiB) | 1,000,000,000,000 (10^12) | 1 Terabyte (TB) |

| 1,125,899,906,842,624 (2^50) | 1 Pebibyte (PiB) | 1,000,000,000,000,000 (10^15) | 1 Petabyte (PB) |

| 1,152,921,504,606,846,976 (2^60) | 1 Exbibyte (EiB) | 1,000,000,000,000,000,000 (10^18) | 1 Exabyte (EB) |

❓ Test Your Knowledge

If one book page contains approximately 2,000 characters, estimate the number of pages that can fit into 1MB of storage.

Encoding Characters: ASCII and Unicode

Characters on a computer are represented using character encoding standards. ASCII (American Standard Code for Information Interchange) was one of the first coding schemes, using 7 bits to represent 128 unique characters. However, with the rise of global communication, the need for more characters became evident. Unicode was introduced to resolve this, providing a comprehensive character set that supports most of the world’s writing systems.

ASCII Characters Unicode Characters

❓ Test Your Knowledge

Encode your name in both ASCII and Unicode, noting any differences in the process or results.

Integer Representation and Arithmetic

Computers use the binary number system, which consists of only two digits: 0 and 1. This system, i.e. "Base 2", is the foundation for storing and manipulating data in computing devices. This section covers the binary representation of numbers, including both unsigned and signed integers, and explains binary arithmetic operations such as addition and subtraction. Even though you have previously covered this material in Mathematical Thinking, and will do so again in Discrete Mathematics, we will review it here.

Binary Representation of Integers

Unsigned integers are binary numbers that represent only positive values or zero. For example, in an 8-bit unsigned

integer, the binary number 00000001 represents the decimal value 1, while 11111111 represents 255.

The value of an unsigned binary number is calculated by summing the powers of 2 for each bit position that contains a 1.

For instance, 1010 in binary (assuming a 4-bit system) is equal to (2^3 + 2^1 = 8 + 2 = 10) in decimal.

Sometimes it helps to visualize this as a table. Where each column, starting from the right is a power of 2. The presence of a 0 or 1 in the binary representation indicates if we should include that column in the calculation.

| Powers of 2 | 2^3 | 2^2 | 2^1 | 2^0 |

|---|---|---|---|---|

| Place | Eights | Fours | Twos | Ones |

| * | * | * | * | |

| Binary Value | 1 | 0 | 1 | 0 |

| = | = | = | = | |

| Product | 8 | 0 | 2 | 0 |

And finally, 8 + 2 = 10.

For comparison, the decimal system behaves the same way, as in the example below.

| Powers of 10 | 10^3 | 10^2 | 10^1 | 10^0 |

|---|---|---|---|---|

| Place | Thousands | Hundreds | Tens | Ones |

| * | * | * | * | |

| Decimal Value | 4 | 0 | 3 | 2 |

| = | = | = | = | |

| Product | 4000 | 0 | 30 | 2 |

❓ Test Your Knowledge

Base 2 (Binary) and base 10 (Decimal) systems aren't special. In fact, we could have base 3 or base 4 systems, or even Base 8349 systems. In fact, in computer science, we commonly use base 8 (Octal) or base 16 (hexadecimal) systems also. Given the octal value 2631, what is this in decimal?

Representing Negative Values

So how do we represent negative numbers in binary? When humans do math, we usually just throw a negative sign in front

of the value to show that it is negative. However, computers don't have negative signs! Instead, we use a

representation known as Two's Complement. In two's complement, negative numbers are represented differently from

their positive counterparts. The highest bit (most significant bit) is used as the sign bit: 0 for positive numbers and

1 for negative numbers. To find the negative of a number, invert all the bits (changing 0s to 1s and vice versa) and

then add 1. For example, to represent -3 in an 4-bit system, start with the binary of +3 (0011), invert the bits

(1100), and add 1 (1101).

This seems a bit funky. Why does this work? To build some intuition, let's start with a smaller version that's easier

to think about; say 3 bits. If we use those three bits to represent only unsigned values, the minimum value we can

represent is 0 (000) and the maximum value we can represent is 7 (111). Now, let's look at what all the possible

3-bit values are in twos complement (remember, flip the bits and add 1):

| Signed Decimal | -4 | -3 | -2 | -1 | 0 | 1 | 2 | 3 |

|---|---|---|---|---|---|---|---|---|

| Twos Complement | 100 | 101 | 110 | 111 | 000 | 001 | 010 | 011 |

| Unsigned Value | 4 | 5 | 6 | 7 | 0 | 1 | 2 | 3 |

From this table, you can see that now the minimum number we can represent is -4 (100) and the maximum number that we

can represent is 3 (011). So we are basically mapping all the binary values that begin with a 1 to a negative value.

Twos complement makes doing binary arithmetic with signed number very convenient as well. We will see some examples in

a bit.

❓ Test Your Knowledge

Convert the decimal number -14 to an 8-bit two's complement binary number. If your answer was interpreted as an unsigned binary value instead of a twos complement value, what would its decimal value be?

Binary Arithmetic

Binary addition and subtraction are fundamental operations that are performed by the Arithmetic Logic Unit (ALU) of a computer's CPU. Understanding how these operations work in binary gives insight into the basic functioning of computers.

Addition

- Process: Binary addition works similarly to decimal addition but with two digits. You add bit by bit, starting from the least significant bit (rightmost bit).

- Carry Over: If the sum of two bits is 2 (binary 10), the 0 is written in the sum, and a 1 is carried over to the next higher bit.

Example:

1101 (13 in decimal)

+ 0111 (7 in decimal)

-------

10100 (20 in decimal)

Subtraction

This is where the beauty of twos complement comes in! Subtraction is simply the addition of a positive number and the negation of another number. In other words, to subtract B from A, all we need to do is add A to the two's complement of B.

Example:

A = 1010 (10 in decimal)

B = 0101 (5 in decimal)

Two's complement of B = 1011

Now add A and two's complement of B:

1010

+ 1011

-------

10101 (The leftmost bit is the carry, which is discarded in an 4-bit system, resulting in 0101 or 5 in decimal)

In summary, computers use binary representation to handle all sorts of data. Understanding binary arithmetic, including the representation of unsigned and signed integers, is fundamental to grasping how computers perform basic arithmetic operations.

❓ Test Your Knowledge

Perform the binary addition of

1101and1011. What happens if there is an overflow (when the number of bits that we have is insufficient to represent the sum)?

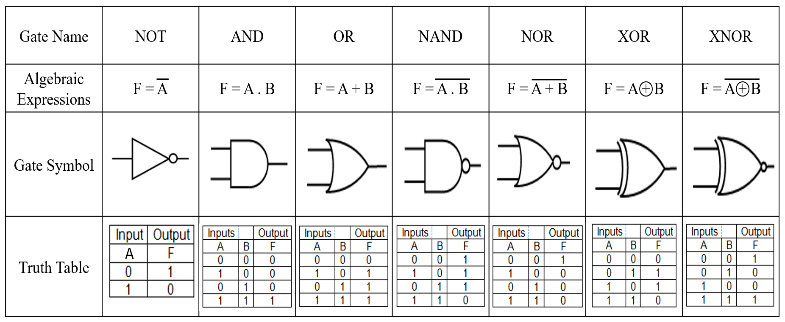

Digital Systems and Numbers

Now that we have introduced some of the basics of binary and integer representation, you should be well-prepared to dive a bit deeper into why this is relevant in computer architecture.

Please read Chapter 1 of the following material, which covers Digital Systems and Numbers.

Carpinelli, John D. (2023). An Animated Introduction to Digital Logic Design. NJIT Library.

After you have read this material, work through the exercises at the end of the chapter. We will revisit some of these during the live class session.

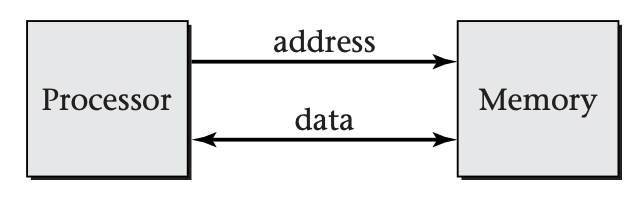

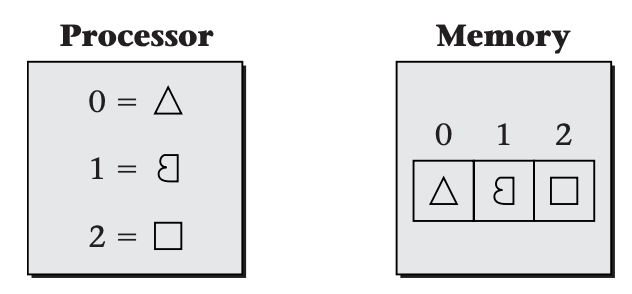

Hardware Organization of a System

You may be wondering why we care about binary and number representation. It turns out that understanding the relationship between bits, bytes, data representation, and integer calculations is crucial because all modern computer hardware is fundamentally built on the binary system, where bits (binary digits) are the smallest unit of data. Whether it's representing simple integers or complex data structures, these bytes are processed and manipulated by the CPU, specifically within its Arithmetic Logic Unit (ALU) for calculations and its Control Unit (CU) for orchestrating the sequence of operations. Let's dive into the architecture of these systems!

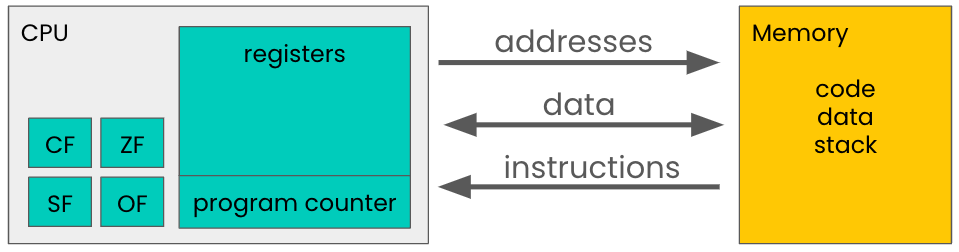

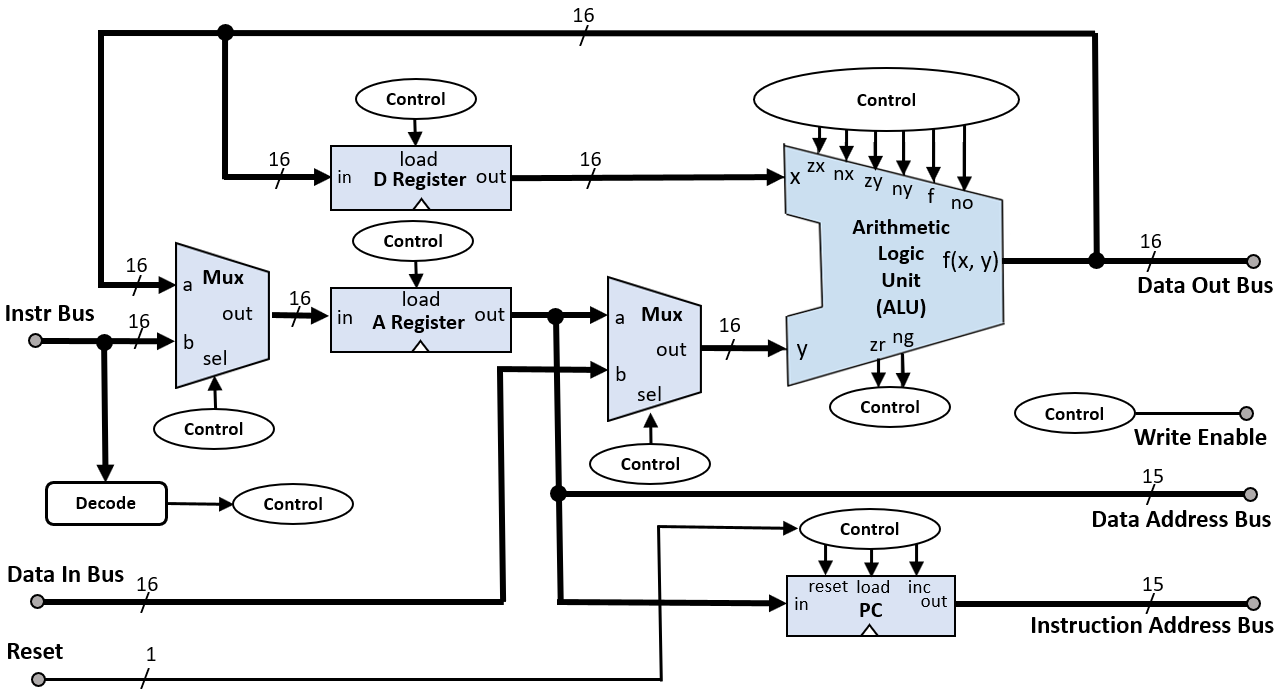

The von Neumann Architecture

The von Neumann architecture lays out the basic blueprint for constructing a functional computer system. It consists of four main subsystems: the arithmetic logic unit (ALU), the control unit (CU), memory, and input/output (I/O) interfaces. All these are interconnected by a system bus and operate cohesively to execute programs.

Here's a description of each component:

Central Processing Unit (CPU):

- Arithmetic Logic Unit (ALU): Think of the ALU as a chef in a kitchen. Just as a chef precisely follows recipes to prepare a variety of dishes (arithmetic and logical operations), the ALU follows instructions to perform various calculations and logical decisions. Arithmetic operations include basic computations like addition, subtraction, multiplication, and division. Logical operations involve comparisons, such as determining if one value is equal to, greater than, or less than another.

- Control Unit (CU): The CU can be likened to an orchestra conductor. An orchestra conductor directs the musicians, ensuring each plays their part at the right time. Similarly, the CU directs the operations of the computer, ensuring each process happens in the correct sequence and at the right time. The CU fetches instructions from memory, decodes them to understand the required action, and then executes them by coordinating the work of the ALU, memory, and I/O systems.

- Registers: These are small, fast storage locations within the CPU used to hold temporary data and instructions. Registers play a key role in instruction execution, as they store operands, intermediate results, and the like. Registers are like the small workbenches in a workshop, where tools and materials are temporarily kept for immediate use. These workbenches are limited in size but allow for quick and easy access to the tools (data and instructions) that are needed right away.

Memory:

- Primary Memory: This includes Random Access Memory (RAM) that stores data and instructions that are immediately needed for execution. RAM is volatile, meaning its contents are lost when the power is turned off. Think of RAM as an office desk. Items on the desk (data and programs) are those you are currently working with. They are easy to reach but can only hold so much; when the work is done, or if you need more space, items are moved back to the storage cabinets (secondary storage).

- Cache Memory: Located close to the CPU, cache memory stores frequently accessed data and instructions to speed up processing. It is faster than RAM but has a smaller capacity. Cache memory is like having a small notepad or sticky notes on the desk. You jot down things you need frequently or immediately so you can access them quickly without having to search through the drawers (RAM) or cabinets (secondary storage).

Input/Output (I/O) Mechanisms:

- Input Devices: These are peripherals used to input data into the system, such as keyboards, mice, scanners, and microphones. These are like the various ways information can be conveyed into a discussion or meeting – speaking (microphone), writing (keyboard), or showing diagrams (scanner).

- Output Devices: These include components like monitors, printers, and speakers that the computer uses to output data and information to the user.These are akin to how information is presented out of a meeting – through a presentation (monitor), printed report (printer), or announcement (speaker).

- I/O Controller: This component manages the communication between the computer's system and its external environment, including handling user inputs and system outputs.

Storage: Storage can be thought of as a special case of input/output devices.

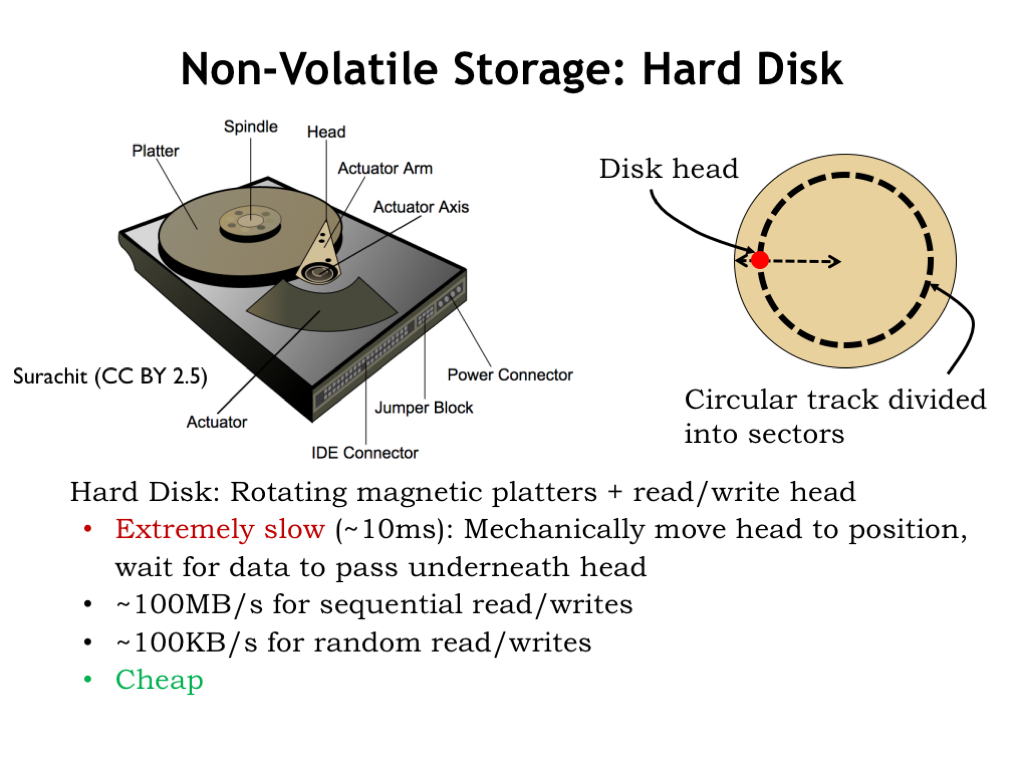

- Secondary Storage: Unlike primary memory, secondary storage is non-volatile and retains data even when the computer is turned off. Examples include hard disk drives (HDDs), solid-state drives (SSDs), and optical drives (like CD/DVD drives). This is like the filing cabinets in an office where documents are stored long-term. Unlike the items on the desk, these files remain safe even if the office closes for the day (computer is turned off).

- Storage Controllers: These are akin to the office clerks who manage the filing cabinets, responsible for filing away documents (writing data) and retrieving them when needed (reading data).

❓ Test Your Knowledge

Consider a simple computer task such as opening a music file from a hard drive and listening to it it. Explain how each of the following components of a computer contributes to this task: ALU, Control Unit, Registers, Primary Memory, Cache Memory, Input/Output Mechanisms, and Secondary Storage. Include the roles they play and how they interact with each other during the process.

The Fetch-Execute Cycle

The fetch-execute cycle is the process by which a computer carries out instructions from a program. It involves fetching an instruction from memory, decoding it, executing it, and then repeating the process. This cycle is the heartbeat of a computer, underlying every operation it performs. This video walks you through the fetch-execute cycle:

Here's a summarized overview of the steps involved in this cycle:

-

Fetch:

- The CPU fetches the instruction from the computer's memory. This is done by the control unit (CU) which uses the program counter (PC) to keep track of which instruction is next.

- The instruction, stored as a binary code, is retrieved from the memory address indicated by the PC and placed into the Instruction Register (IR).

- The PC is then incremented to point to the next instruction in the sequence, preparing it for the next fetch cycle.

-

Decode:

- The fetched instruction in the IR is decoded by the CU. Decoding involves interpreting what the instruction is and what actions are required to execute it.

- The CU translates the binary code into a set of signals that can be used to carry out the instruction. This often involves determining which parts of the instruction represent the operation code (opcode) and which represent the operand (the data to be processed).

-

Execute:

- The execution of the instruction is carried out by the appropriate component of the CPU. This can involve various parts of the CPU, including the ALU, registers, and I/O controllers.

- If the instruction involves arithmetic or logical operations, the ALU performs these operations on the operands.

- If the instruction involves data transfer (e.g., loading data from memory), the necessary data paths within the CPU are enabled to move data to or from memory or I/O devices.

- Some instructions may require interaction with input/output devices, changes to control registers, or alterations to the program counter (e.g., in the case of a jump instruction).

-

Repeat:

- Once the execution of the instruction is complete, the CPU returns to the fetch step, and the cycle begins again with the next instruction as indicated by the program counter.

This cycle is fundamental to the operation of a CPU, enabling it to process instructions, perform calculations, manipulate data, and interact with other components of the computer system. The speed at which this cycle operates is a key factor in the overall performance of a computer.

❓ Test Your Knowledge

In a typical fetch-execute cycle of a CPU, describe the specific role of the Program Counter (PC) and the Instruction Register (IR). How would the cycle be affected if the PC does not increment correctly after fetching an instruction?

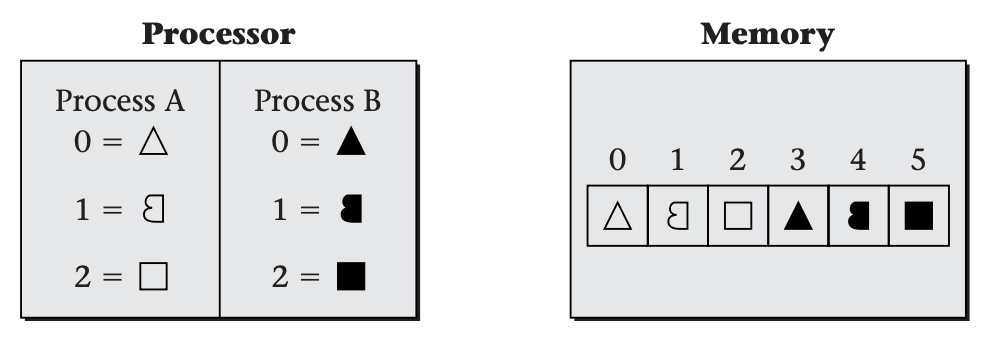

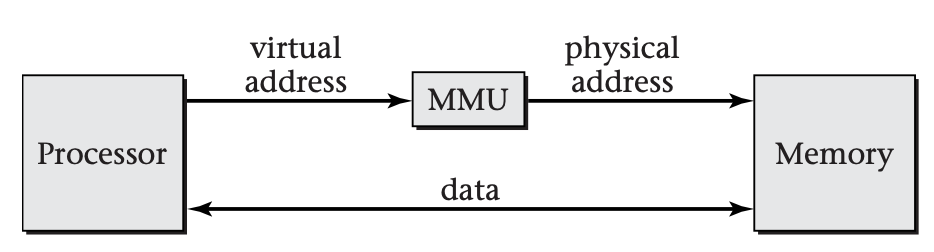

Operating System Responsibilities

Since we just learned about the hardware architecture of computers, we should also think about the software systems that are managing the hardware. These systems are known as Operating Systems (OS) (clever name, eh?).

Let's explore what happens when we run a simple "Hello, World!" program. This example will show how the OS manages the resources and acts as the conductor as the program is executed.

When you execute the "Hello, World!" program, the first thing the OS does is allocate the necessary resources, such as assigning memory for the program to reside in and determining the CPU time required for execution. This allocation is crucial for the program to run smoothly, and the OS handles it efficiently, ensuring your program has a space in the RAM and adequate processor time among the many tasks the computer is handling.

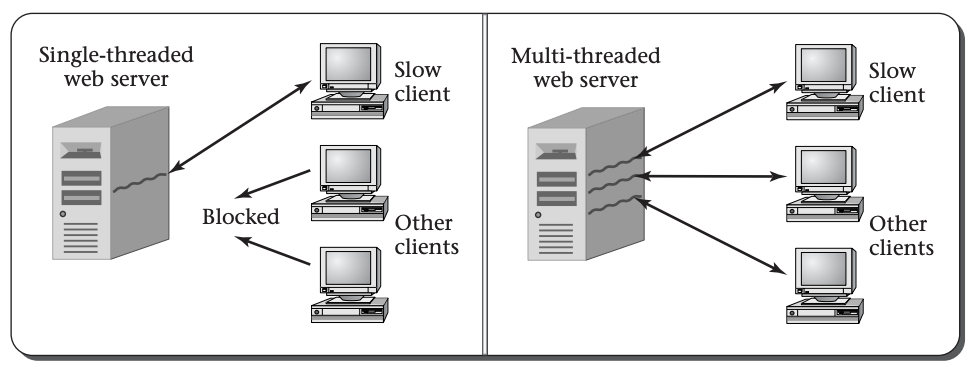

As the program starts running, the OS's task as a scheduler comes into play. Each processing core of the processor can really only do one thing so this scheduling is particularly important in a multitasking environment where numerous applications are vying for processor time. The OS balances these needs, allocating time slices to your program so it can execute its instructions without hogging the CPU.

In parallel, the OS manages the memory allocated to your program. It ensures that the "Hello, World!" program utilizes memory allocated only to it and that there's no overlap with other programs. This management includes allocating memory when the program starts and reclaiming it once the program ends, thus preventing any memory leaks or clashes with other applications.

As your program reaches the point of outputting "Hello, World!" to the console, the OS's role in input/output management becomes evident. It acts as a mediator between your program and the hardware of your computer, especially the display. The OS translates the program's output command into a form understandable by the display hardware, allowing the text "Hello, World!" to appear on your screen.

Throughout this process, the OS also upholds its responsibility for security and access control. It ensures that your program operates within its allocated resources and permissions.

Finally, if an error were to occur during the execution of the "Hello, World!" program, the OS's error detection and handling mechanisms kick in. These mechanisms are designed to gracefully manage errors, such as terminating the program if it becomes unresponsive, and then cleaning up to maintain system stability.

Through the lifecycle of running a "Hello, World!" program, the OS demonstrates its critical role in resource management, task scheduling, memory management, input/output handling, security enforcement, and error management. Each responsibility is interconnected, forming a cohesive and efficient system that underpins the functionality of our computers. As we continue in this course, we'll delve deeper into each of these areas.

❓ Test Your Knowledge

List and describe the major responsibilities of an operating system. Provide an example of each responsibility in action.

Diving Deeper

We want to understand the real details of how computers operate, so a common theme in this course will be "diving deeper". Above was a general description of the major components of an operating system, but only in abstract terms. We will reference the open textbook "Operating Systems: Three Easy Pieces" by Remzi Arpaci-Dusseau and Andrea Arpaci-Dusseau throughout the term to get more deeply into the details.

So, before moving to the next section, please click on this link and read Chapter 2: Introduction to Operating Systems.

Introduction to C (gcc)

Overview

Let's take a break and get to writing some code! Throughout this term, we are going to spend our time working with the C programming language. C is highly valued in systems programming due to its close-to-hardware level of abstraction, allowing programmers to interact directly with memory through pointers and memory management functions. This proximity to hardware makes C ideal for developing low-level system components such as operating systems, device drivers, and embedded systems, where efficiency and control over system resources are paramount. Additionally, C's widespread use and performance efficiency, combined with a rich set of operators and standard libraries, make it a foundational language for building complex, high-performance system architectures.

Using gcc

gcc stands for GNU C Compiler (and g++ is the GNU C++ Compiler). We will give a quick introduction to get you

started with the compiler. Instructions for installing gcc on your platform are provided in the homework assignment.

Let's say that you have a simple "Hello, World" program as follows:

#include <stdio.h>

void main ()

{

printf("hello");

}

You can compile and run this from your prompt as follows:

$ gcc hello.c

This will create an executable file called "a.out", which you can run using the following command:

$ ./a.out

Alternatively, you can specify the name of the executable that gets generates by using the "-o" flag. Here's an example:

$ gcc -o hello hello.c

If there are any syntax errors in your program, the compilation will fail and you'll get an error message letting you know what the error is (thought it may be a bit cryptic).

This should get you started with the very basics of using gcc.

C Language Tutorial

This video will provide a good introduction to much of the syntax of the language. You'll probably notice that the video is four hours long! Don't worry, though, you only need to watch the first hour for now. I encourage you to revisit this video over the coming weeks to learn about some of other syntax that may come in handy in the following weeks.

Also, as you are approached learning C, remember that you already know how to program and that you can apply that knowledge to C. In other words, at this point, you don't need to learn what an if statement is or how to use if statements to construct more complex logic, you just need to learn how to write an if statement in C. Don't be overwhelmed!

As always, it's best to try to follow along on your own with the tutorial as you go. Try entering the programs on your computer and running them yourself, then experiment with how you can make the sample programs perform other operations!

References

There are a ton of really good references available to you as you learn C. The one that I highly recommend is the book

that is commonly referred to as "K & R" for its authors, Brian Kernighan and Dennis Ritchie, the creator of the C

language. The book is very well written, thorough in its coverage, provides lots of good practice problems.

You can view the full text of the book linked below:

Kernighan, B.W.; Ritchie, D.M. The C Programming Language. Prentice Hall Software Series.

Exploration

In the introduction of this section, I mentioned that C was often used in systems programming due to its

"close-to-hardware" level of abstraction. Let's explore a very simple example, which will demonstrate some of these

operations. Try walking through the below example and writing down on paper, the bits that correspond to the value of

x at each step of executing the program and what the corresponding integer value is. After you have stepped through

it manually, enter the program and see if your predictions match the output.

#include <stdio.h>

// Function to set the nth bit of x

unsigned int setBit(unsigned int x, int n) {

return x | (1 << n);

}

// Function to clear the nth bit of x

unsigned int clearBit(unsigned int x, int n) {

return x & ~(1 << n);

}

// Function to toggle the nth bit of x

unsigned int toggleBit(unsigned int x, int n) {

return x ^ (1 << n);

}

// Function to check if the nth bit of x is set (1)

int isBitSet(unsigned int x, int n) {

return (x & (1 << n)) != 0;

}

int main() {

unsigned int x = 0; // Initial value

printf("Initial value: %u\n", x);

// Set the 3rd bit

x = setBit(x, 3);

printf("After setting 3rd bit: %u\n", x);

// Clear the 3rd bit

x = clearBit(x, 3);

printf("After clearing 3rd bit: %u\n", x);

// Toggle the 2nd bit

x = toggleBit(x, 2);

printf("After toggling 2nd bit: %u\n", x);

// Check if 1st bit is set

printf("Is 1st bit set? %s\n", isBitSet(x, 1) ? "Yes" : "No");

// Check if 2nd bit is set

printf("Is 2nd bit set? %s\n", isBitSet(x, 2) ? "Yes" : "No");

return 0;

}

❓ Test Your Knowledge

Did your answers match the actual output? If not, try experimenting with the Memory Spy tool to see how the computer sees the data, though you'll have to the program into smaller pieces. For example, this example shows the result of performing a basic shift operation and "anding" it to another value.

Week 1 Participation

During the live class session this week, you will be asked to complete some of the problems that we went through in this material.

Your completion of these questions and answers will make up your Weekly Participation Score.

Assignment 1: Introduction to C

Click this link to clone the repository: Assignment1-Intro-to-C

Overview

In this first assignment, you will get your system set up to be able to compile and run C programs, begin to familiarize yourself with the C programming language (we will use C throughout this term), and apply some of what you have learned about how your computer represents numbers.

Set Up Your Environment

Some general instructions for getting up and running with C on your computer are provided below. However, at this point in the program, we expect that you will be able to figure out some of the details yourself. If you get stuck, please reach out on Discord!

Windows 10 or 11

Mingw is a Windows runtime environment for GCC, GDB, make and related binutils. To

install mingw, open up a Windows Terminal and run the following scoop command. This assumes that you already have

scoop installed. If you don't, following the instructions at scoop.sh to install it.

> scoop install mingw

OS X

Install gcc and gdb using brew. If you don't have brew installed, first following the instructions at

brew.sh to install it.

> brew install gcc

> brew install gdb

Alternatives: Linux Virtual Machine, Dual Boot, or WSL

The GNU tools work particularly well in Linux, Unix, and FreeBSD -based environments. If you are running Windows, you can get up and running with a full Linux environments a number of different ways. Refer to the following article for information about those options How to Install Ubuntu on Windows.

Test Your Setup

-

Next, let's test your setup to make sure that everything is installed properly. Create a file that contains the following basic C program. You may use whatever editor you chose (e.g., notepad, emacs, vi, VSCode, etc.). Name your file

hello.c.#include <stdio.h> int main() { printf("Hello, World!\n"); return 0; } -

From within your shell, make sure that you have navigated to the directory containing the file you just created and run the following commands:

> gcc hello.c -o helloThis command uses the

gcccompiler to compile your C program into an executable calledhello. You can now run your compiled program as follows:> ./helloIf all goes well, your program should print out the message "Hello, World!" to the prompt. If all didn't go well, please post your errors or questions to the course's Discord Channel.

Assignment

Objective:

Develop a C program to parse command-line arguments and determine if they match specific patterns. This assignment is designed to introduce you to C while applying some of what you have learned about number representations and challenge your problem-solving skills.

Task:

Implement a program in ANSI C that takes command-line arguments and checks if each argument matches a specific

pattern based on a command-line flag: -x, -y, or -z, with -x being the default. The program should handle a -v

flag for a variant output.

Output:

By default, the program prints “match” for each argument that matches the pattern and “nomatch” for those

that do not, each on a separate line. If the -v flag is provided, the program instead performs a transformation on

matching arguments (specified below) and prints nothing for non-matching arguments.

Flags:

- At most one of

-x,-y, or-zcan be provided. - The

-vflag can appear before or after the pattern flag. - All flags precede other command-line arguments.

- Non-flag arguments do not start with

-. - All arguments are in ASCII.

Patterns and Conversions:

-

-x mode:

- Match a sequence of alternating digits and letters (e.g.,

1a2b3), ending with a digit. - For matches with

-v, convert each character to its ASCII value. - Example:

1a2b→1-97-2-98-3

- Match a sequence of alternating digits and letters (e.g.,

-

-y mode:

- Match any string where the sum of ASCII values of all characters is a prime number.

- For matches with

-v, convert the string into its hexadecimal ASCII representation. - Example:

abb(sum = 293, not a prime number) →61-62-62

-

-z mode:

- Match strings that form a valid arithmetic expression using digits and

+,-,*,/(e.g.,12+3-4). - For matches with

-v, print the number of+operations, followed by the number of-operations, followed by the number of*operations, followed by the number of\operations. - Example:

12+3-4→1100 - Note that depending on your shell, you may have to surround the expression with quotes when passing it through the command line. In Unix-like shells,

*is interpreted as a wildcard character.

- Match strings that form a valid arithmetic expression using digits and

Constraints:

- Compile without errors using

gccwith no extra flags. - Do not depend on libraries other than the standard C library.

- Do not use

regex.h, multiplication, division, or modulo operators. Bitwise operations are allowed. - Hand in a single

assignment1.cfile.

Examples:

$ ./assignment1 -x 1a2b3

match

$ ./assignment1 -x -v 1a2b3

1-97-2-98-3

$ ./assignment1 -y abb

match

$ ./assignment1 -z 12+3-4

match

$ ./assignment1 -z "1+2*3*2"

1020

Grading

Total Points: 70

-

Correctness and Functionality (55 Points)

- Pattern Matching (-x, -y, -z) (45 points)

- Correctly identifies matching and non-matching patterns for each mode. (15 points per mode)

- Transformation with -v Flag (10 points)

- Correctly performs the specified transformations for matching patterns.

- Pattern Matching (-x, -y, -z) (45 points)

-

Code Quality and Style (10 Points)

- Readability and Comments (5 points)

- Code is well-organized and readable with clear, concise comments explaining complex logic.

- Conventions and Syntax (5 points)

- Consistent naming conventions and adherence to standard C syntax and idiomatic practices.

- Readability and Comments (5 points)

-

Efficiency (5 Points)

- Algorithmic Efficiency (5 points)

- Uses efficient solutions and avoids unnecessary computations.

- Algorithmic Efficiency (5 points)

Submitting Your Work

Your work must be submitted Anchor for degree credit and to Gradescope for grading.

- Ensure that you

commitandpushyour local code changes to your remote repository. (Note: In general, you should commit and push frequently, so that you have a backup of your work, so that there is evidence that you did your own work, and so that you can return to a previous state easily.) - Upload your submission to Gradescope via the appropriate submission link by selecting the correct GitHub repository from the drop-down list.

- Export a zip archive of your GitHub repository by visiting your repo on

GitHub, clicking on the green

Codebutton, and selecting "Download Zip". - Upload the zip file of your repository to Anchor using the form below.

Machine-Level Representations Part 1

Welcome to week 2 of Computer Systems. This week, we will continue learning about the lowest levels of computer architecture, focusing primarily on Instruction Set Architectures and x86-64 assembly.

Topics Covered

- Introduction to assembly language (x86-64 as an example)

- Data movement instructions

- Arithmetic and logical operations

Learning Outcomes

After this week, you will be able to:

- Understand the instruction set architecture (ISA) and its role in computer organization.

- Interpret and decode machine instructions.

- Explain the use of registers and memory addressing modes in machine-level programming.

- Perform simple arithmetic and logical operations using machine instructions.

A Couple of Notes

Before we fully explore Instruction Set Architectures, we want to introduce a couple of concepts: data sizes and endianness. These both impact the binary representation of the data that the CPU will work with.

Basic Concepts of Data Sizes

Individual bits are rarely used on their own; instead, they are grouped together to form larger units of data. The most common of these is the byte, which typically consists of 8 bits. Bytes are the basic building blocks for representing more complex data types like integers, characters, and floating-point numbers.

The CPU architecture, particularly whether it is 32-bit or 64-bit, plays a significant role in determining the size of data it can naturally handle. A 32-bit CPU typically processes data in 32 bits (or 4 bytes) chunks, while a 64-bit CPU handles data in 64 bits (or 8 bytes) chunks. This distinction affects various aspects of computing:

- Memory Addressing: A 32-bit system can address up to (2^{32}) memory locations, equating to 4 GB of RAM, whereas a 64-bit system can address (2^{64}) locations, allowing for a theoretical maximum of 16 exabytes of RAM, though practical limits are much lower.

- Integer Size: On most systems, the size of the 'int' data type in C/C++ is tied to the architecture's word size. Therefore, on a 32-bit system, an integer is typically 4 bytes, while on a 64-bit system, it's often 8 bytes.

- Performance: Processing larger data chunks can mean faster processing of large datasets, as more data can be handled in a single CPU cycle. This advantage is particularly noticeable in applications that require large amounts of numerical computations, such as scientific simulations or large database operations.

Data Sizes in Programming

When programming, it's important to be aware of the sizes of different data types. For example, in C/C++, aside from the basic int type, you have types like short, long, and long long, each with different sizes and ranges. The size of these types can vary depending on the compiler and the architecture. There are also data types for floating-point numbers, like float and double, which have different precision and size characteristics.

Impact on Memory and Storage

Data sizes also have implications for memory usage and data storage. Larger data types consume more memory, which can be a critical consideration in memory-constrained environments like embedded systems. Similarly, when data is stored in files or transmitted over networks, the size of the data impacts the amount of storage needed and the bandwidth required for transmission.

Endianness

Endianness determines the order of byte sequence in which a multi-byte data type (like an integer or a floating point number) is stored in memory. The terms "little endian" and "big endian" describe the two main ways of organizing these bytes.

-

Little Endian: In little endian systems, the least significant byte (LSB) of a word is stored in the smallest address, and the most significant byte (MSB) is stored in the largest address. For example, the 4-byte hexadecimal number

0x12345678would be stored as78 56 34 12in memory. Little endian is the byte order used by x86 processors, making it a common standard in personal computers. -

Big Endian: Conversely, in big endian systems, the MSB is stored in the smallest address, and the LSB in the largest. So,

0x12345678would be stored as12 34 56 78. Big endian is often used in network protocols (hence the term "network order") and was common in older microprocessors and many RISC (Reduced Instruction Set Computer) architectures.

Why Endianness Matters

The importance of understanding endianness arises primarily in the context of data transfer. When data is moved between different systems (e.g., during network communication or file exchange between systems with different endianness), it's crucial to correctly interpret the byte order. Failing to handle endianness properly can lead to data corruption, misinterpretation, and bugs that can be difficult to diagnose.

Practical Implications

- Network Communications: Many network protocols, including TCP/IP, are defined in big endian. Hence, systems using little endian (like most PCs) must convert these values to their native byte order before processing and vice versa for sending data.

- File Formats: Some file formats specify a particular byte order. Software reading these files must account for the system's endianness to correctly read the data.

- Cross-platform Development: Developers working on applications intended for multiple platforms must consider the endianness of each platform. This is especially important for applications dealing with low-level data processing, like file systems, data serialization, and network applications.

Programming and Endianness

In high-level programming, endianness is often abstracted away, and developers don't need to handle it directly. However, in systems programming, embedded development, or when interfacing with hardware directly, developers may need to write code that explicitly deals with byte order. Functions like ntohl() and htonl() in C are used to convert network byte order to host byte order and vice versa.

Introduction to ISA

[Instruction Set Architecture (ISA)] (https://en.wikipedia.org/wiki/Instruction_set_architecture), is the interface that stands between the hardware and low-level software. In this module, we will delve into various types of ISAs and their usage.

Let’s first refresh our memories about basic CPU operation:

Definition of ISA

The Instruction Set Architecture (ISA) is essentially the interface between the hardware and the software of a computer system. The ISA encompasses everything the software needs to know to correctly communicate with the hardware, including but not limited to, the specifics of the necessary data types, machine language, input and output operations, and memory addressing modes.

Role of ISA in Computer Organization

The ISA is what defines a computer's capabilities as perceived by a programmer. The microarchitecture, or the computer's physical design and layout, is built to implement this set of functions. Any changes in the ISA would necessitate a change in the underlying microarchitecture, and conversely, changes in microarchitecture often foster enhancements in the ISA.

The ISA--via its role as an intermediary between software and hardware--enables standardized software development since software developers can write software for the ISA, not for a specific microarchitecture. As long as the microarchitecture correctly implements the ISA, the software will function as expected.

Programmers using high-level languages such as C or Python are often insulated from these lower-level details. However, understanding the connection can aid programmers in creating more efficient and effective code.

Check Your Understanding: What is the role of Instruction Set Architecture (ISA) in a computer system?

Click Here for the Answer

ISA is essentially the interface between the hardware and the software of a computer system. It outlines the specifics needed for software to correctly communicate with the hardware.

ISA Types

ISAs are classified into three essential types - RISC, CISC, and Hybrid.

RISC

Reduced Instruction Set Computer (RISC) has simple instructions that execute within a single clock cycle. Notable examples include ARM, MIPS, and PowerPC. Generally speaking, since the instructions are simpler in RISC architectures, each individual instruction teds to complete more quickly. The downside is that it takes more instructions to complete a task. In addition, it allows room for other enhancements, such as advanced techniques for pipelining and superscalar execution (to be discussed later this term), contributing to a higher performing processor.

CISC

Complex Instruction Set Computer (CISC) has complex instructions that may require multiple clock cycles for execution. A notable example is the x86 architecture. CISC operates on a principle that the CPU should have direct support for high-level language constructs.

Hybrid

Hybrid or Mixed ISAs combine elements of both RISC and CISC, aiming to reap the benefits of both architectures. In such cases, a Hybrid ISA might contain a core set of simple instructions alongside more complex, multi-clock ones for certain use-cases. An example of a hybrid ISA is the x86-64, used in most desktop and laptop computers today.

Usage of ISAs

RISC ISAs are traditionally used in applications where power efficiency is essential, such as in ARM processors often found in smartphones and tablets. They enable these devices to have long battery life while providing satisfactory performance.

On the other hand, CISC ISAs are commonly employed in devices needing high computational power. Your desktop or laptop computer likely employs a CISC ISA, such as the x86 or x86-64 architecture.

To illustrate this, consider a MOV command in an x86 assembly language, a form of a CISC ISA:

MOV DEST, SRC

This single instruction moves a value from the SRC (source) to DEST (destination). It's a complex operation wrapped in one instruction, embodying the CISC philosophy to reduce the program size and increase code efficiency.

In comparison, an equivalent operation in a RISC ISA like ARM might require several instructions:

LDR R1, SRC

STR R1, DEST

In this example, data is loaded from the memory address SRC into a storage location within the microprocessor, and then separately stored to memory address DEST.

Check Your Understanding: How does ISA influence software development?

Click Here for the Answer

The ISA standardizes software development. Developers write software for the ISA, not for a specific microarchitecture. This allows the same software to operate on different hardware as long as the hardware implements the ISA correctly.

Overview of x86 ISA

Perhaps the most dominant ISA in the computing world is the x86 instruction set. It is a broad term referring to a family of microprocessors that share a common heritage from Intel. The ISA began with the 8086 microprocessor in the late 1970s and was later extended by the 80286, 80386, and 80486 processors in the 1980s and early 1990s. The naming convention for this series of processors is where the 'x86' terminology comes from.

The x86 ISA has been repeatedly expanded to incorporate capabilities needed for contemporary computing, such as 64-bit data bus width and multi-core functionality. Today, the majority of personal computers and many servers utilize the x86 ISA or its extensions.

For a more detailed journey into the world of the x86 ISA, check out this video:

For a more practical understanding, let's examine a simple scenario using C and x86 assembly language. Suppose we have a C program with the following line of code:

int a = 10 + 20;

The corresponding x86 assembly code could look like:

mov eax, 10

add eax, 20

Here, the C language line involving addition of two integers translate to two assembly instructions - mov and add. mov loads our first integer into the EAX register and add performs the operation of adding 20 to the value in EAX.

This direct correspondence doesn't always exist. Real-world code is much more complex and doesn't always translate directly since compilers apply optimizations, high-level languages have abstractions that don't map directly onto assembly, and real-world assembly code takes advantage of hardware features such as pipelining and cache memory.

Check Your Understanding: What is the significance of the x86 ISA?

Click Here for the Answer

x86 ISA is a dominant ISA in computer systems, commonly used in personal computers and many servers due to its continual expansion to address contemporary computing needs.

Reading and Decoding Machine Instructions

Basics of Machine Instructions in x86 Instruction Set Architecture (ISA)

To begin, we must first understand what a machine instruction is. In essence, they are the lowest-level instructions that a computer’s hardware can execute directly. Now, among many ISAs available, we are going to focus on x86 ISA due to its widespread adoption and its complex yet powerful nature.

In x86 ISA, an instruction is broken into several parts: the opcode, which determines the operation the processor will perform; potentially one or two operand specifiers, which determine the sources and destination of the data; and sometimes an immediate, which is a fixed constant data value. This design of the x86 ISA is known as CISC (Complex Instruction Set Computing) architecture, where individual instructions can perform complex operations.

For an illustrative example, consider:

movl $0x80, %eax

In this x86 instruction, movl is the opcode representing a move operation, $0x80 is an immediate value operand, and %eax is a register operand.

Interpreting Machine Instructions

So, how does the machine interpret these instructions? Each opcode corresponds to a binary value, and the processor interprets these binary values to perform operations. The operands are also represented as binary values and can be either a constant value (immediate), a reference to a memory location, or a reference to a registry.

Continuing with the aforementioned movl instruction, it's converted into machine understandable format as follows:

b8 80 00 00 00

The first byte b8 corresponds to the mov opcode. The four bytes that follow represent the constant value $0x80 in little-endian format.

There are numerous resources like the x86 Opcode and Instruction Reference where you can find detailed mappings of opcodes and instructions.

Decoding Techniques and Importance

The process of converting these machine language instructions back into human-readable assembly language is known as decoding. There are several high-level methods including manual calculation and the use of tools like objdump, a tool that can disassemble and display machine instructions.

Let's continue with the movl instruction example. If we use objdump -d on the binary file containing our instruction, it would output:

00000000 <.text>:

0: b8 80 00 00 00 mov $0x80,%eax

While manual calculation can be informative for learning purposes, tools like objdump can simplify and expedite the process, especially with larger, more complex programs.

Decoding is not only a crucial aspect when it comes to understanding pre-existing assembly code, but also in computer security, debugging, and optimization. By examining the binary, one can identify bottlenecks, study potential security vulnerabilities, and understand at a deeper level how high-level language structures get translated into machine code.

Check Your Understanding: What are the main components of an x86 instruction and what do they represent?

Click Here for the Answer

An x86 instruction mainly consists of an opcode, which represents the operation to be performed and the operands, which represent the sources and destinations of the data. It may also include an immediate value which is a fixed constant data value.

Whether you're a software engineer looking to optimize an algorithm, a hacker aiming to exploit a system vulnerability, or a student keen to understand how your code manifests in the machine world, understanding and being able to decode machine instructions can open up a whole new perspective on programming.

Registers and their use in Programming

Definition and Purpose of Registers

On the most elementary level, registers are small storage areas in the CPU where data is temporarily held for immediate processing. At any moment, these small storage areas contain the most relevant and significant information for a current instruction. The purpose of registers is to provide a high-speed cache for the arithmetic and logical units, the parts of the CPU that perform operations on data.

Check Your Understanding: What is the purpose of registers in a CPU?

Click Here for the Answer

Registers provide a high-speed cache for the arithmetic and logical units of the CPU to perform operations on data. They temporarily store the most relevant information for the current instruction being executed.

Types of Registers

There are several types of registers depending on the CPU, serving different purposes.

For the x86 architecture, these include (https://en.wikibooks.org/wiki/X86_Assembly/X86_Architecture):

-

Accumulator Registers (AX): These are used for arithmetic calculations. For instance, in x86 assembly language, the

acccommand utilizes the accumulator register for performing operations. The accumulator register stores the results of arithmetic and logical operations (such as addition or subtraction). -

Data Registers (DX): Data registers are utilized for temporary storage of data. They are typically involved in data manipulation and holding intermediate results.

-

Counter Register (CX): It is used in loop instruction to store the loop count.

-

Base Register (BX): It behaves as a pointer to data in data segment.

-

Stack Pointer (SP): It points to a location in the stack segment.

-

Stack Base Pointer (BP): Used to point at the base of the stack.

-

Source Index (SI) and Destination Index (DI): SI is used as a source data address in string manipulation and DI as a destination data address.

-

Instruction Pointer (IP): It keeps track of where the CPU is in its sequence of tasks.

In the realm of x86 architecture, these registers have evolved from 8-bit to 16-bit (AX, BX, CX, DX), later to 32-bit (EAX, EBX, ECX, EDX), and currently to 64-bit versions (RAX, RBX, RCX, RDX).

General-purpose registers are key to operations like arithmetic, data manipulation, and address calculation. The x86 architecture presents eight general-purpose registers denoted by EAX, EBX, ECX, EDX, ESI, EDI, EBP, and ESP.

Special-purpose registers oversee specific computer operations—examples include the Program Counter (PC), Stack Pointer (SP), and Status Register (SR).

Refer to this wiki on CPU Registers to explore more on the topic

Registers in x86 Instruction Set Architecture (ISA)

Here's an illustrative example of how registers are used in x86 assembly language:

section .text

global _start ;must be declared for using gcc

_start:

mov eax, 5

add eax, 3

In the above example, the EAX register is utilized to store and perform arithmetic operation on values. Initially, the value 5 is moved into EAX register. Then, 3 is added to the value currently in EAX register, making the final value in EAX as 8.

Watch this video for a deeper understanding of the use of registers in x86.

Check Your Understanding: Why do you think the EAX register is often used in programming?

Click Here for the Answer

EAX is often referred to as an "accumulator register," as it is frequently used for arithmetic operations. Its value can easily be modified by addition, subtraction, or other operations, making it a versatile choice for programmers.

Memory Addressing Modes

As we get deeper into computer systems, we need to understand how data is transferred and how it gets into and out of the computer's memory. This is where memory addressing modes come into focus.

Purpose and Importance of Memory Addressing

At the heart of every computation is data, and data resides in memory. Memory addressing modes are the methods used in machine code instructions to specify the location of this data. Understanding memory addressing modes provides insight into optimizing code, debugging, and understanding the subtleties of system behavior (as we have seen with some Python examples in previous terms).

The instruction set architecture (ISA) of a computer defines the set of addressing modes available. In this section, we'll explore the intricacies of addressing modes using x86 assembly language.

Principle of Memory Addressing Modes

There are various modes such as immediate addressing, direct addressing, indirect addressing, register addressing, base-plus-index and relative addressing. Each of these modes is designed to efficiently support specific programming constructs, such as arrays, records and procedure calls.

Before continuing, read https://en.wikipedia.org/wiki/Addressing_mode for a full list and detailed explanation of memory addressing modes.

Check Your Understanding: Name at least three memory addressing modes.

Click Here for the Answer

Three memory addressing modes include immediate addressing, direct addressing, and indirect addressing.

Various Modes and Their Uses

Let's touch on a few modes for context.

- Immediate Addressing: The operand used during the operation is a part of the instruction itself. Useful when a constant value needs to be encoded into the instruction.

- Direct Addressing: The instruction directly states the memory address of the operand. This mode is beneficial while accessing global variables.

- Indirect Addressing: Here, the instruction specifies a memory address that contains another memory address, which is the location of the operand. Indirect addressing is crucial for implementing pointers or references.

It's equally important to contextualize these modes into real-world programming paradigms. For instance, when writing a for loop, the iterator variable often uses immediate addressing since the start, end, and step variables are usually predefined values.

Note: Each ISA has different addressing modes. The C programming language provides several of these, primarily via its pointer constructs.

Check out this video for more explanation about various Memory Modes:

Check Your Understanding: In which addressing mode is the operand part of the instruction itself?

Click Here for the Answer

Immediate Addressing.

Examples of x86 Addressing Modes

x86 assembly provides a rich set of addressing modes for accessing data. We'll dig into two of these - Immediate and Register Indirect.

-

Immediate Mode: This mode uses a constant value within the instruction. For instance,

mov eax, 1. Here1is an immediate value. -

Register Indirect Mode: A register contains the memory address of the data. For example,

mov eax, [ebx]implies that the data is at the memory address stored inebx.

Importantly, x86 supports complex addressing modes including base, index and displacement addressing methods combined, such as mov eax, [ebx+ecx*4+4].

In this mode, ebx is the base register, ecx is the index register, 4 is the scale factor, and the final 4 is the displacement. This mode is handy when dealing with arrays and structures.

Check Your Understanding: What is the addressing mode used when a register contains the memory address of the data?

Click Here for the Answer

Register Indirect Mode.

Arithmetic Operations

Arithmetic operations form the building blocks of most programmatic tasks. They are behind the computing of coordinates for graphics, calculating sum totals, adjusting sound volumes, and a myriad of other tasks. Broadly speaking, arithmetic operations allow manipulation and processing of data. In this section, we will delve into the mechanics of performing basic arithmetic operations using Machine Instructions and working with arithmetic operations in x86.

Addition and Subtraction

In x86 assembly language, the ADD and SUB instructions are used to perform addition and subtraction respectively. C provides the common symbols + and - for these operations.

Here is an example:

int sum = 5 + 3;

int diff = 5 - 3;

MOV EAX, 5

ADD EAX, 3 ; sum stored in EAX

MOV EBX, 5

SUB EBX, 3 ; difference stored in EBX

Multiplication and Division

Multiplication is accomplished using the MUL instruction and division uses DIV. In C, these operations are represented by * and / respectively. Here's a simple example:

float product = 6 * 2;

float quotient = 6 / 2;

MOV EAX, 6

IMUL EAX, 2 ; product stored in EAX

MOV EBX, 6

IDIV EBX, 2 ; quotient stored in EBX

Check Your Understanding: If a program requires the multiplication of two numbers, which instruction can you use in x86 assembly code?

Click Here for the Answer

The `MUL` instruction is used to multiply two numbers in x86 assembly.

Modulo

Modulo operation, also referred to as modulus, is closely tied with division. It finds the remainder or signed remainder of a division, of one number by another (called the modulus of the operation).

In programming realms, especially in conditions like looping over a circular buffer or implementing some hash functions, the modulo operation plays a vital role.

Unlike other processors, x86 doesn't have a specific instruction for modulo. It is usually calculated from the remainder part after executing the DIV instruction.

Here's an example in C:

int dividend = 15;

int divisor = 10;

int quotient = dividend / divisor; // Division

int remainder = dividend % divisor; // Modulo

In this case, the quotient would be 1, and the remainder is 5 which is the result of the modulo operation.

Overflow

In the realm of computer arithmetic operations, particularly those that deal with signed and unsigned integers, special situations arise when implementing addition, subtraction, multiplication, and division operations. These situations, often referred to as "overflows", can potentially cause unexpected and undesired behavior in a system.

When we perform arithmetic operations, the state of the result determines the flag settings. Overflow is a condition that occurs when the result of an operation exceeds the number range that can be represented by the permitted number of bits.

Overflow Flags

When it comes to handling overflows, the processor leverages a dedicated bit, known as the Overflow Flag (OF), to indicate the occurrence of an overflow during an arithmetic operation (Michael, 2016).

For instance, consider a signed 8-bit integer variable (with the range of -128 to 127) and the operation 100 + 50. The result, being 150, exceeds the maximum positive value 127 and thus we say an overflow has occurred. The overflow flag would be set in this case.

Flag Usage in Instructions

You can use instructions including JO (Jump if Overflow) and JNO (Jump if Not Overflow) for manipulating program control flow based on the state of the overflow flag. These instructions are useful for implementing effective error handling mechanisms in your code.

Let's consider this example, using x86 assembly language:

mov eax, 0x7FFFFFFF ; Move maximum 32-bit signed integer to EAX

add eax, 1 ; Add 1 to EAX

jo overflow_occurred ; Jump to overflow_occurred if Overflow Flag is set

jmp operation_success ; Jump to operation_success if Overflow Flag is not set

overflow_occurred: