Neural Networks

Neural networks, or more specifically, artificial neural networks (ANNs), represent a computational approach inspired by the human brain's structure and function. They are designed to learn from data, making them powerful tools for tasks such as classification, prediction, and more.

Neurons: The Building Blocks

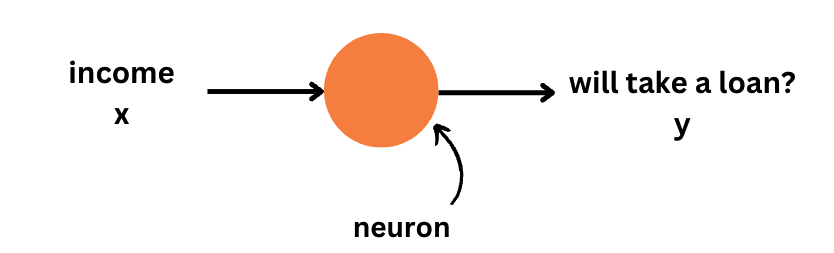

At the heart of a neural network is the neuron. A neuron receives input, processes it through computation, and then outputs a result. It is the fundamental unit that makes up a neural network.

Consider a neuron that takes an individual's income as input and predicts whether they are likely to take out a loan.

You already know how to do similar tasks using linear regression. A neuron is like a linear regression model with a few additional components. As in linear regression, a neuron takes an input (income), multiplies it by a weight, adds a bias, and generates an output. However, the neuron also includes an activation function, which introduces non-linearity to the output.

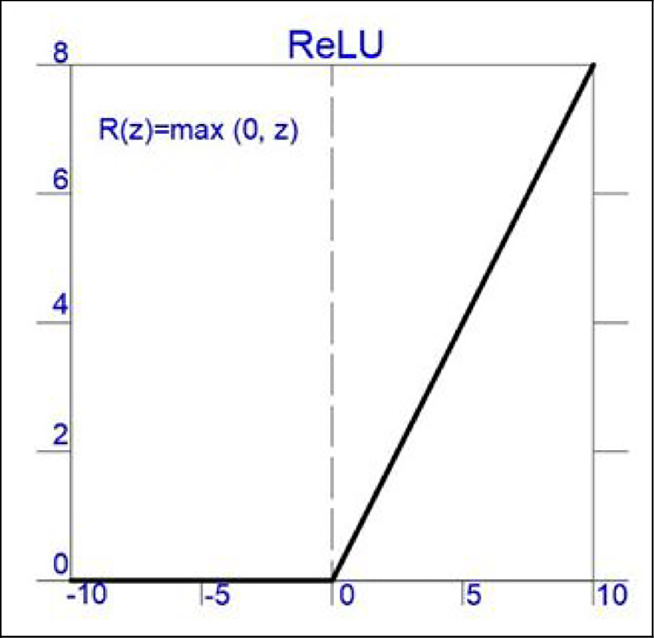

Activation Functions: Introducing Non-linearity

AActivation functions play a critical role in introducing non-linearity to the neuron's output. This is crucial because it allows the model to handle complex, non-linear data. A commonly used activation function is the Rectified Linear Unit (ReLU), which outputs the input value if it is positive and zero otherwise.

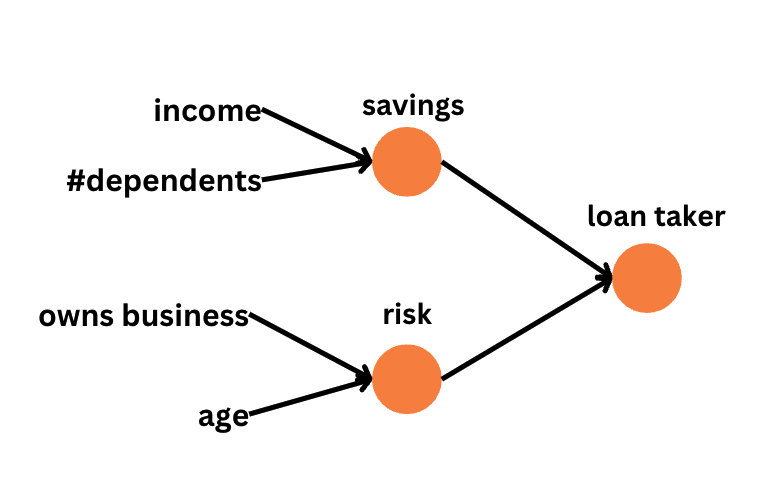

Constructing a Simple Neural Network

A neural network is essentially a series of neurons connected together. The output of one neuron feeds into the input of another. For example, in a simple network:

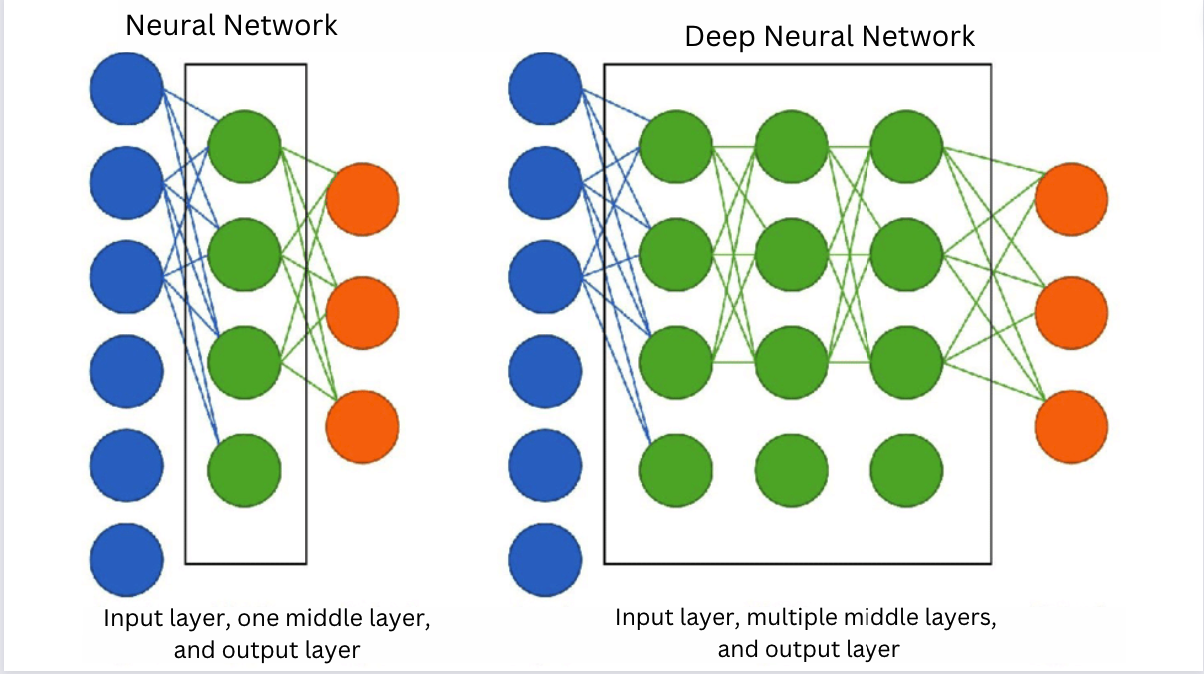

Deep Neural Networks: Beyond the Basics

When a neural network includes multiple layers of neurons, it becomes a deep neural network. These layers allow for the modeling of complex relationships and patterns in the data.

Watch the following video for a reiteration of the concepts we discussed above using a different example.

Coding a Neural Network Layer

To grasp the inner workings of neural networks, you can implement a layer in Python with just a few lines of code:

import numpy as np

class Layer:

def __init__(self, input_size, output_size):

self.weights = np.random.rand(input_size, output_size)

self.bias = np.random.rand(output_size)

def output(self, input):

return np.dot(input, self.weights) + self.bias # Linear operation plus bias

This simple example initializes a layer with random weights and biases, demonstrating how input is transformed through the layer.

[Optional] Here's an interesting video that demonstrates how to code a neural network layer from scratch using Python.

Note that the previous two examples don't involve learning. Learning, as we know, involves adjusting the weights and biases in a neural network to improve its performance. We will learn more about this in the next sections.