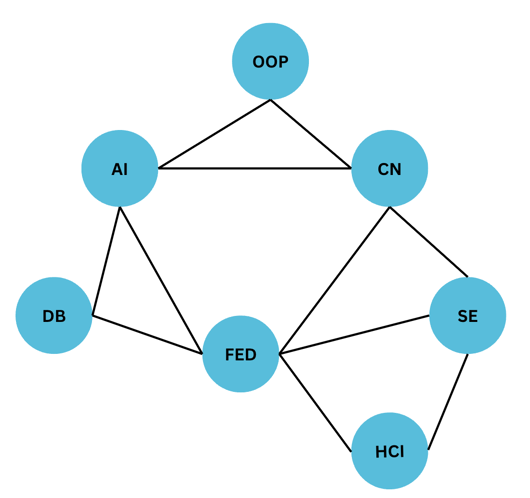

Course Overview

Welcome to CSE005 , Artificial Intelligence (AI). You are joining a global learning community dedicated to helping you learn and thrive in the AI era.

📺 Watch this welcome video from your instructor.

Course Description

Artificial Intelligence (AI) aims to teach students the techniques for building computer systems that exhibit intelligent behavior. AI is one of the most consequential applications of computer science, and is helping to solve complex real-world problems, from self-driving cars to facial recognition. This course will teach students the theory and techniques of AI, so that they understand how AI methods work under a variety of conditions.

The course begins with an exploration of the historical development of AI, and helps students understand the key problems that are studied and the key ideas that have emerged from research. Then, students learn a set of methods that cover: problem solving, search algorithms, knowledge representation and reasoning, natural language understanding, and computer vision. Throughout the course, as they apply technical methods, students will also examine pressing ethical concerns that are resulting from AI, including privacy and surveillance, transparency, bias, and more.

Course assignments will consist of short programming exercises and discussion-oriented readings. The course culminates in a final group project and accompanying paper that allows students to apply concepts to a problem of personal interest.

Topics

- Intelligent Agents

- Search strategies

- Game Playing

- Knowledge and Reasoning

- Natural Language Processing

- Computer Vision

- Ethics and safety

How the course works

There are multiple ways you'll learn in this course:

- Read and engage with the materials on this site

- Attend live class and complete the activities in class

- Answer practice exercises to try out the concepts

- Complete assignments and projects to demonstrate what you have learned

Active engagement is necessary for success in the course! You should try to write lots of programs, so that you can explore the concepts in a variety of ways.

You are encouraged to seek out additional practice problems outside of the practice problems included in the course.

Learning Outcomes

By the end of the course, students will be able to:

- Demonstrate understanding of the foundation and history of AI

- Explain basic knowledge representation, problem solving, inference, and learning methods of AI

- Develop intelligent systems to solve real-world problems by selecting and applying appropriate algorithms

- Explain the capabilities and limitations of various AI algorithms and techniques

- Participate meaningfully in discussions of AI, including its current scope and limitations, and societal implications

Instructor

- Mohammed Saudi

- mohammed.saudi@kibo.school

Please contact on Discord first with questions about the course.

Live Class Time

Note: all times are shown in GMT.

- Wednesday, 15:00 - 16:30 GMT

Office Hours

- Tuesday, 15:00 - 16:30 GMT

Core Reading List

- Norvig P., Russell S. (2020). Artificial Intelligence: A Modern Approach. Pearson, 4th e. (Chapters 1-12, 23, 24, 25,27)

Supplemental Reading List

- Zhang A, Lipton Zn, Li M, Smola A. (2021) Dive into Deep Learning

Live Classes

Each week, you will have a live class (see course overview for time). You are required to attend the live class sessions.

Video recordings and resources for the class will be posted after the classes each week. If you have technical difficulties or are occasionally unable to attend the live class, please be sure to watch the recording as quickly as possible so that you do not fall behind.

Live Classes Feedback Form: Feedback Form

| Week | Topic | Live Class | Slides | Code |

|---|---|---|---|---|

| 1 | Intelligence Via Search | video | slides | code |

| 2 | Game Playing | video | slides | |

| 3 | CSPs | video | slides | code |

| 4 | Reinforcement Learning | video | slides | code |

| 5 | Supervised Learning | video | slides | code |

| 6 | CNN | video | slides | code 1 code 2 |

| 7 | Logic | video | slides | code 1 code 2 |

| 8 | NLP | video | slides | code |

Assessments & Grading

In this course, you will be assessed on your ability to apply the concepts and techniques covered in class to solve problems. You will be assessed through a combination of programming assignments, quizzes, and a final project.

You overall course grade is composed of these weighted elements:

- Programming Assignments & Oral Exams: 40%

- Quizzes: 40%

- Final Project: 20%

Programming Assignments

Each week, you will be given a programming assignment to complete. These assignments will be graded based on the correctness of your solution, as well as the quality of your code. You will be expected to submit your code on GitHub, and your code will be reviewed by the instructor.

Late Policy

Please review the late submission policy for each assignment on the assignment details page.

Quizzes

There will be weekly quizzes to test your understanding of the material. These quizzes will be a combination of multiple choice, short answer, and programming questions. You will be expected to complete these quizzes on your own, without any external help.

Oral Exams

Based on your coursework and assignment submissions, you may be required to participate in an oral exam. This is designed to ensure that you have a solid understanding of the course material and have independently completed your work.

Final Project/Exam

A final project will be submitted by the end of the term. You will have the last two weeks to complete it. More details will be provided later.

Getting Help

If you have any trouble understanding the concepts or stuck on a problem, we expect you to reach out for help!

Below are the different ways to get help in this class.

Discord Channel

The first place to go is always the course's help channel on Discord. Share your question there so that your Instructor and your peers can help as soon as we can. Peers should jump in and help answer questions (see the Getting and Giving Help sections for some guidelines).

Message your Instructor on Discord

If your question doesn't get resolved within 24 hours on Discord, you can reach out to your instructor directly via Discord DM or Email.

Office Hours

There will be weekly office hours with your Instructor and your TA. Please make use of them!

Tips on Asking Good Questions

Asking effective questions is a crucial skill for any computer science student. Here are some guidelines to help structure questions effectively:

-

Be Specific:

- Clearly state the problem or concept you're struggling with.

- Avoid vague or broad questions. The more specific you are, the easier it is for others to help.

-

Provide Context:

- Include relevant details about your environment, programming language, tools, and any error messages you're encountering.

- Explain what you're trying to achieve and any steps you've already taken to solve the problem.

-

Show Your Work:

- If your question involves code, provide a minimal, complete, verifiable, and reproducible example (a "MCVE") that demonstrates the issue.

- Highlight the specific lines or sections where you believe the problem lies.

-

Highlight Error Messages:

- If you're getting error messages, include them in your question. Understanding the error is often crucial to finding a solution.

-

Research First:

- Demonstrate that you've made an effort to solve the problem on your own. Share what you've found in your research and explain why it didn't fully solve your issue.

-

Use Clear Language:

- Clearly articulate your question. Avoid jargon or overly technical terms if you're unsure of their meaning.

- Proofread your question to ensure it's grammatically correct and easy to understand.

-

Be Patient and Respectful:

- Be patient while waiting for a response.

- Show gratitude when someone helps you, and be open to feedback.

-

Ask for Understanding, Not Just Solutions:

- Instead of just asking for the solution, try to understand the underlying concepts. This will help you learn and become more self-sufficient in problem-solving.

-

Provide Updates:

- If you make progress or find a solution on your own, share it with those who are helping you. It not only shows gratitude but also helps others who might have a similar issue.

Remember, effective communication is key to getting the help you need both in school and professionally. Following these guidelines will not only help you in receiving quality assistance but will also contribute to a positive and collaborative community experience.

Screenshots

It’s often helpful to include a screenshot with your question. Here’s how:

- Windows: press the Windows key + Print Screen key

- the screenshot will be saved to the Pictures > Screenshots folder

- alternatively: press the Windows key + Shift + S to open the snipping tool

- Mac: press the Command key + Shift key + 4

- it will save to your desktop, and show as a thumbnail

Giving Help

Providing help to peers in a way that fosters learning and collaboration while maintaining academic integrity is crucial. Here are some guidelines that a computer science university student can follow:

-

Understand University Policies:

- Familiarize yourself with Kibo's Academic Honesty and Integrity Policy. This policy is designed to protect the value of your degree, which is ultimately determined by the ability of our graduates to apply their knowledge and skills to develop high quality solutions to challenging problems--not their grades!

-

Encourage Independent Learning:

- Rather than giving direct answers, guide your peers to resources, references, or methodologies that can help them solve the problem on their own. Encourage them to understand the concepts rather than just finding the correct solution. Work through examples that are different from the assignments or practice problems provide in the course to demonstrate the concepts.

-

Collaborate, Don't Complete:

- Collaborate on ideas and concepts, but avoid completing assignments or projects for others. Provide suggestions, share insights, and discuss approaches without doing the work for them or showing your work to them.

-

Set Boundaries:

- Make it clear that you're willing to help with understanding concepts and problem-solving, but you won't assist in any activity that violates academic integrity policies.

-

Use Group Study Sessions:

- Participate in group study sessions where everyone can contribute and learn together. This way, ideas are shared, but each individual is responsible for their own understanding and work.

-

Be Mindful of Collaboration Tools:

- If using collaboration tools like version control systems or shared documents, make sure that contributions are clear and well-documented. Clearly delineate individual contributions to avoid confusion.

-

Refer to Resources:

- Direct your peers to relevant textbooks, online resources, or documentation. Learning to find and use resources is an essential skill, and guiding them toward these materials can be immensely helpful both in the moment and your career.

-

Ask Probing Questions:

- Instead of providing direct answers, ask questions that guide your peers to think critically about the problem. This helps them develop problem-solving skills.

-

Be Transparent:

- If you're unsure about the appropriateness of your assistance, it's better to seek guidance from professors or teaching assistants. Be transparent about the level of help you're providing.

-

Promote Honesty:

- Encourage your peers to take pride in their work and to be honest about the level of help they received. Acknowledging assistance is a key aspect of academic integrity.

Remember, the goal is to create an environment where students can learn from each other (after all, we are better together) while we develop our individual skills and understanding of the subject matter.

Academic Integrity

When you turn in any work that is graded, you are representing that the work is your own. Copying work from another student or from an online resource and submitting it is plagiarism. Using generative AI tools such as ChatGPT to help you understand concepts (i.e., as though it is your own personal tutor) is valuable. However, you should not submit work generated by these tools as though it is your own work. Remember, the activities we assign are your opportunity to prove to yourself (and to us) that you understand the concepts. Using these tools to generate answers to assignments may help you in the short-term, but not in the long-term.

As a reminder of Kibo's academic honesty and integrity policy: Any student found to be committing academic misconduct will be subject to disciplinary action including dismissal.

Disciplinary action may include:

- Failing the assignment

- Failing the course

- Dismissal from Kibo

For more information about what counts as plagiarism and tips for working with integrity, review the "What is Plagiarism?" Video and Slides.

The full Kibo policy on Academic Honesty and Integrity Policy is available here.

Course Tools

In this course, we are using these tools to work on code. If you haven't set up your laptop and installed the software yet, follow the guide in https://github.com/kiboschool/setup-guides.

- GitHub is a website that hosts code. We'll use it as a place to keep our project and assignment code.

- GitHub Classroom is a tool for assigning individual and team projects on Github.

- Visual Studio Code is an Integrated Development Environment (IDE) that has many plugins which can extend the features and capabilities of the application. Take time to learn how ot use VS Code (and other key tools) because you will ultimately save enormous amounts of time.

- Anchor is Kibo's Learning Management System (LMS). You will access your course content through this website, track your progress, and see your grades through this site.

- Gradescope is a grading platform. We'll use it to track assignment submissions and give you feedback on your work.

- Woolf is our accreditation partner. We'll track work there too, so that you get credit towards your degree.

Intelligence via Search

You will acquire practical skills in modeling and constructing programs to achieve intelligence by addressing search problems. We will learn how to solve problems such as maze navigation and pathfinding using various search algorithms like depth-first search, breadth-first search, and A* search.

This Week's Work:

- Study the material and complete the practical exercises.

- Complete the quiz and coding assignment for this week. The coding assignment will involve building a shipping route planner using the A* search algorithm.

Upon completing this week's work, you will be able to:

- Develop intelligent software agents capable of solving diverse problems, such as pathfinding and playing games like the 15-puzzle.

- Explain the field of AI and its fundamental concepts.

AI: What and Why

Intelligence

If a robot navigates city streets without colliding with anything, would you consider this robot intelligent? Many would agree, myself included. However, if a human performs the same task, you might hesitate to describe them as intelligent. On the other hand, when a person tackles a challenging math problem or quickly learns a new language, we are more inclined to label them as intelligent.

Some have seen intelligence as the ability to use language, form abstractions and concepts, solve problems, and acquire knowledge.Turing Test

In the 1950s, Alan Turing, a British computer scientist, proposed a test to determine whether a machine can think. The test, known as the Turing test, involves a human evaluator who engages in a natural language conversation with two other parties: one human and one machine. If the evaluator cannot distinguish between the human and the machine, the machine is considered intelligent. This test is still used today to assess the intelligence of machines.

(Optional) Watch the video below to learn more about the Turing test:

Defining Intelligence

A widely accepted definition of intelligence is the ability to make the correct decisions. But what does it mean to make the correct decision? To answer this question, we need to delve into the concept of rationality.

Rationality

From a scientific standpoint, rationality is the quality of being grounded in reason and logic. Thus, a person or a machine is considered rational if their decisions are based on reasoning and logic, and are guided by specific goals.

Example of Rationality:

Suppose we have an agent (software, a person, or a machine) playing chess. In this context, making the move expected to maximize the chances of winning the game is the right decision. This exemplifies rationality because the agent is making a decision based on reasoning and logic, aimed at achieving a specific goal: winning the game.

Another example of rationality:

Imagine an agent operating a vehicle. Here, making choices aimed at maximizing the likelihood of reaching the destination safely, and perhaps quickly, represents the right course of action. This too embodies rationality, with decisions derived from reasoning and logic in pursuit of the specific goal of arriving safely and efficiently.

Human agents rely on sensory organs like eyes and ears, along with limbs such as hands and legs, to perceive and interact with their surroundings. In contrast, robotic agents are equipped with specialized sensors like cameras and infrared range finders, enabling them to sense their environment, while actuators such as motors enable them to move and manipulate objects.

Software agents, on the other hand, interpret inputs such as keystrokes, file contents, and network packets, using them to execute tasks like displaying information on a screen, storing data in files, or transmitting data over networks.

Artificial Intelligence (AI)

Artificial Intelligence (AI) is the field of building systems that can think and act rationally.

AI is about building computer systems that can replicate various human activities, such as walking, seeing, understanding, speaking, learning, responding, and many others.

The AI dream is to someday build machines as intelligent as humans (capable of doing multiple tasks intelligently). This is sometimes called Artificial General Intelligence or AGI.

Machine Learning

Machine learning, a subfield of AI, focuses on building systems that can learn from data or, in other words, make rational decisions based on data.

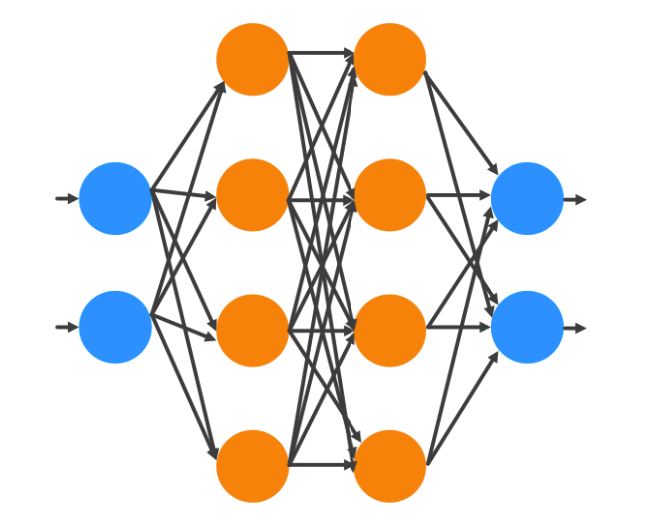

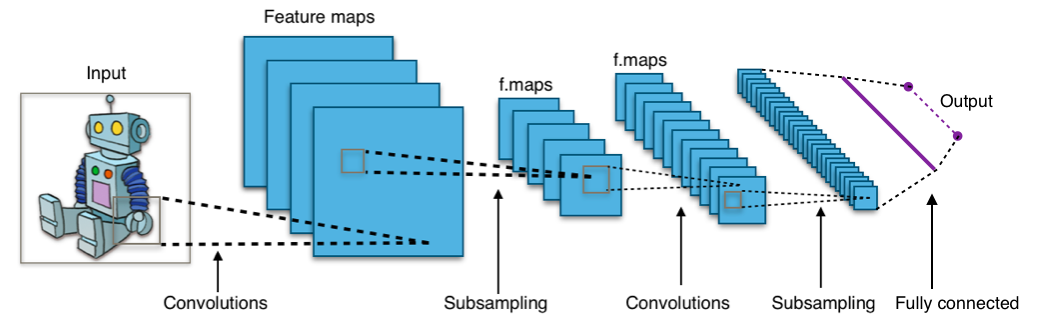

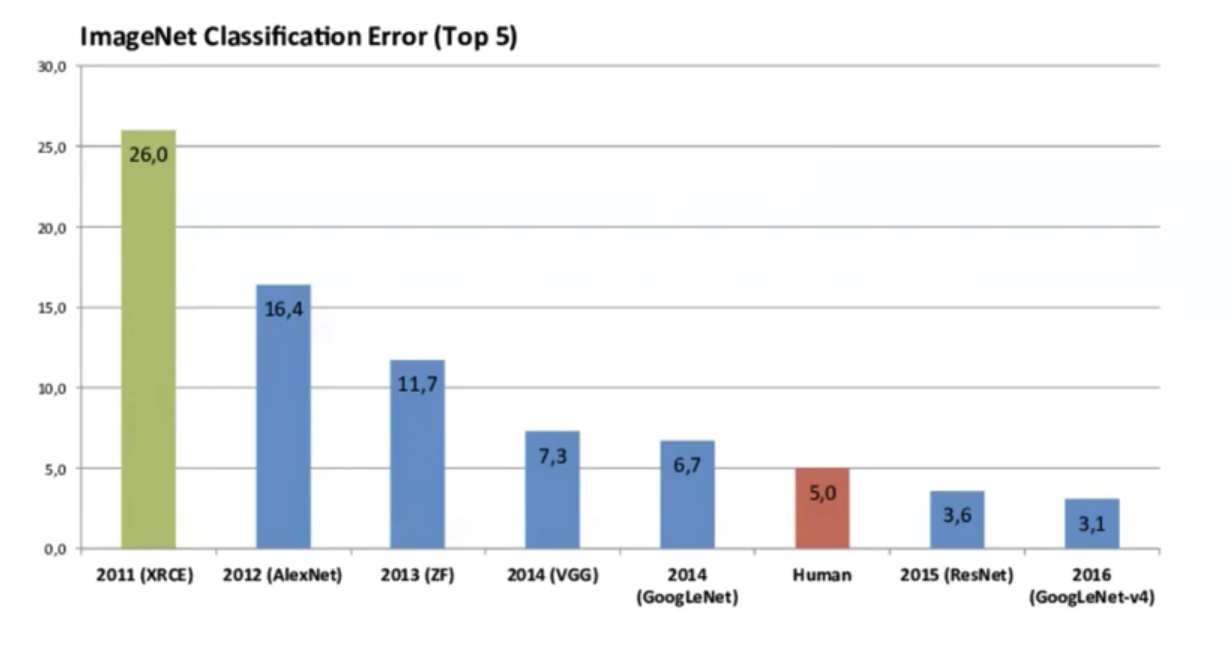

Deep Learning

Deep learning is a subset of machine learning that centers on developing machines capable of learning from data using deep neural networks.

In future lessons, we will discuss machine learning and deep learning in more detail.

Why AI Now?

The history of AI dates back to the 1950s. If you'd like a quick three-minute recap of AI's history, you can watch this video:

The question we want to address here is why AI is currently experiencing a surge in attention. There are several significant factors contributing to this phenomenon; I will highlight the two most important ones:

Data

The availability of extensive public datasets has profoundly transformed the AI landscape. These datasets, often curated and made accessible by organizations, government agencies, or academic collaborations, provide copious amounts of diverse, well-structured, and labeled data. Prominent examples include ImageNet for image recognition, the Common Crawl for natural language processing, and the Human Genome Project for genomics. Access to such extensive public datasets has enabled AI researchers to train their models on data that represents a broader spectrum of real-world scenarios, leading to substantial improvements in AI system performance and accuracy.

Hardware

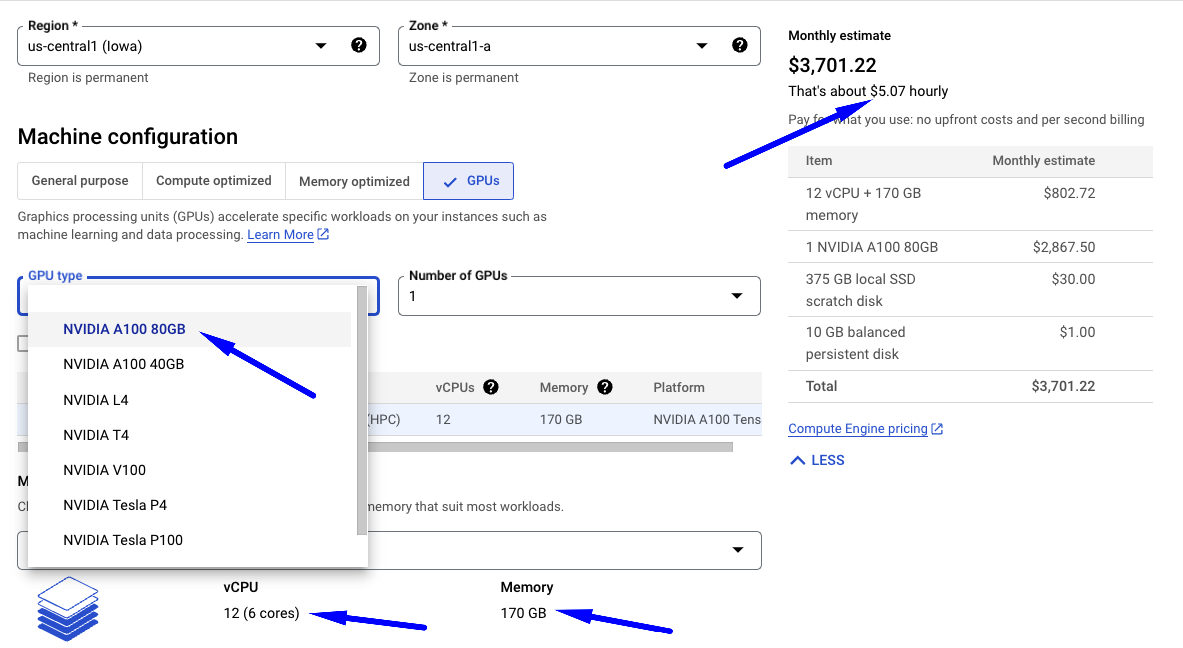

Advancements in hardware technology, especially the development of high-performance graphics processing units (GPUs) and specialized AI accelerators like TPUs (Tensor Processing Units), have significantly increased the computational power available for AI tasks. AI models, particularly deep learning neural networks, demand substantial computational resources for training and inference. The enhancement in computational capabilities has empowered researchers to work with more extensive and complex models, resulting in improved AI performance.

Although GPUs and TPUs can be costly to purchase and operate, they are now widely accessible in the cloud, making them affordable for a broader audience. This accessibility has facilitated AI researchers and practitioners in experimenting with more intricate models and larger datasets without requiring substantial investments in expensive hardware.

Here is a screenshot of the Google Cloud Platform which provides access to GPUs and TPUs:

Summary:

-

AI is the field of building systems that can think and act rationally (guided by reasoning, logic, knowledge, and specific goals).

-

The term "agent" is used to describe an entity striving to achieve specific objectives. An agent can be a person, a machine, software, or a combination of these.

-

AI is getting so much attention now due to many reasons including the availability of large public datasets and the advancement of hardware technologies.

Check your understanding:

- Explain AI to a 10-year-old.

- List two reasons for the current surge of interest in AI.

Explore, Share, and Discuss:

Search the web and find some of the latest hardware devices that empower AI applications.

💬 Share your findings with us on Discord here.

Applications of AI in the Real World

Artificial Intelligence (AI) has become an integral part of our daily lives, revolutionizing various industries and solving complex problems. Below are just a few examples of AI applications in the real world.

Healthcare:

-

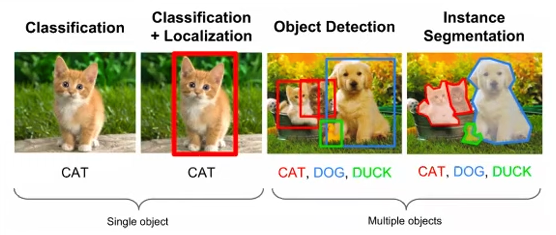

Medical Imaging: AI is used to analyze medical images such as X-rays, MRIs, and CT scans, helping detect diseases, tumors, and anomalies. For example, Google's DeepMind developed an AI system capable of diagnosing eye diseases like diabetic retinopathy from retinal scans.

-

Drug Discovery: AI accelerates drug discovery by predicting potential drug candidates and simulating their effects. Companies like Atomwise use AI to discover new medicines for various diseases, reducing research time.

Finance:

-

Algorithmic Trading: AI is employed to make high-frequency trades, analyze market data, and make split-second decisions. Companies like Renaissance Technologies use AI for hedge fund management.

-

Fraud Detection: Banks and financial institutions use AI to detect fraudulent activities. For instance, PayPal employs AI to spot suspicious transactions and prevent fraud.

Transportation:

-

Self-Driving Cars: Companies like Tesla, Waymo, and Uber are developing self-driving cars that use AI for real-time navigation and collision avoidance.

-

Traffic Management: AI optimizes traffic flow, reduces congestion, and enhances public transportation. Cities like Singapore use AI for traffic light control, reducing travel times.

Education:

-

Personalized Learning: AI-powered educational platforms like Duolingo adapt to individual learning styles and provide personalized learning paths.

-

Plagiarism Detection: AI systems, such as Turnitin, scan student papers and detect instances of plagiarism.

Retail:

-

Recommendation Systems: E-commerce giants like Amazon use AI to recommend products to customers based on their browsing and purchase history.

-

Inventory Management: AI optimizes inventory levels, reducing costs and minimizing waste. Walmart uses AI for demand forecasting and stock replenishment.

Entertainment:

-

Content Recommendation: Streaming services like Netflix and Spotify use AI to suggest movies, shows, and music based on user preferences.

-

Content Creation: AI is used to generate content, such as deepfake videos and AI-written articles, but it also has potential for creative content generation in the future.

Agriculture:

-

Precision Agriculture: AI-driven drones and sensors are used to monitor crops, soil quality, and weather conditions. This helps farmers make informed decisions about irrigation, fertilization, and pest control.

-

Crop Disease Detection: AI systems can identify crop diseases from images, enabling early intervention to save crops. AgShift, for example, uses AI to identify defects in harvested fruits.

Security:

-

Facial Recognition: AI is employed in security systems to identify individuals at airports, in public spaces, and on smartphones.

-

Cybersecurity: AI is used to detect and prevent cyber threats by analyzing patterns of malicious activities. Companies like Darktrace employ AI for real-time threat detection.

Think, Share and Discuss:

Think of a use case that you haven't seen in this lesson and try to explain how AI can be used to solve it. The highest voted answer will be featured in the next lesson.

💬 Share it with us on Discord here.

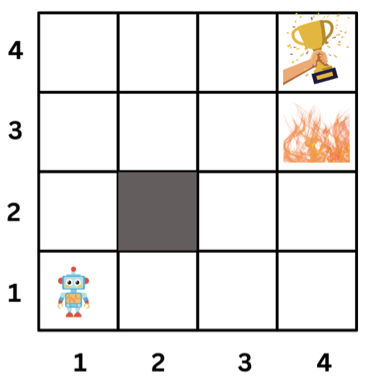

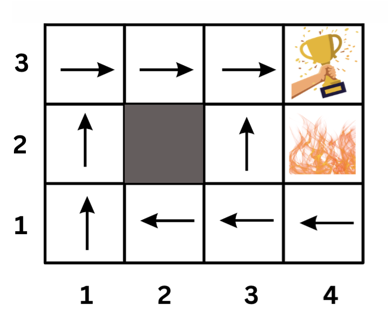

Search Problem Modeling

Ready for some excitement?

Would you be interested in learning how to program a computer to solve mazes, puzzles, find paths, or optimize item arrangements? What if you could also use this knowledge to minimize your time spent in traffic or create a system that plays chess or solves a Rubik's cube? This is precisely the essence of our lesson on "Search."

Search Problems

A search problem is a specific type of computational problem that involves exploring a set of possible states or configurations (known as the state space) and finding a sequence of actions to achieve a goal within this state space.

This is a broad definition, so let's clarify it with an example.

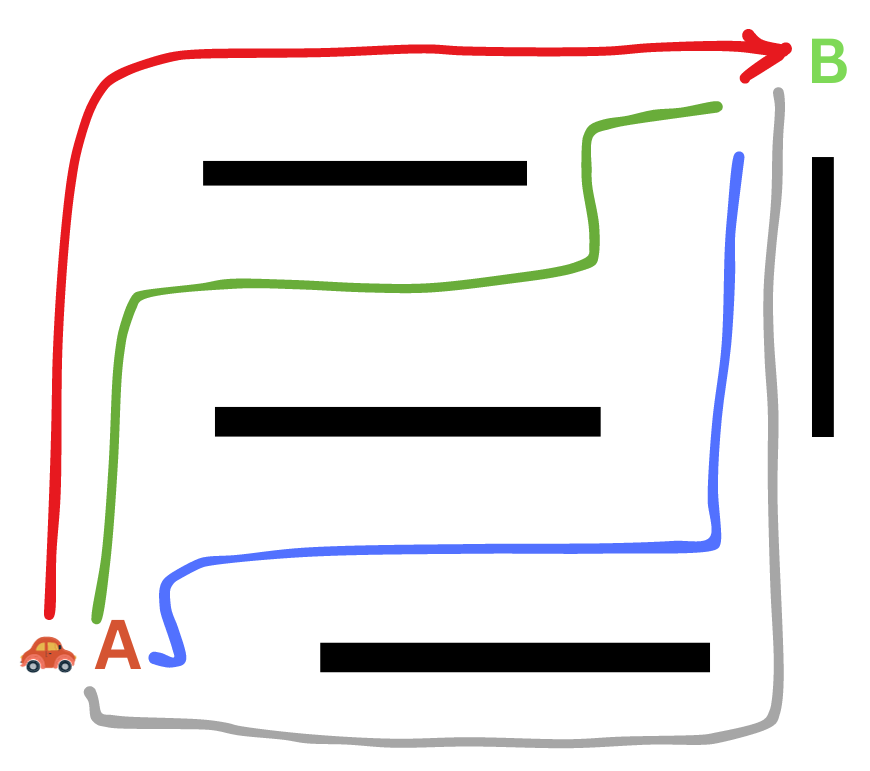

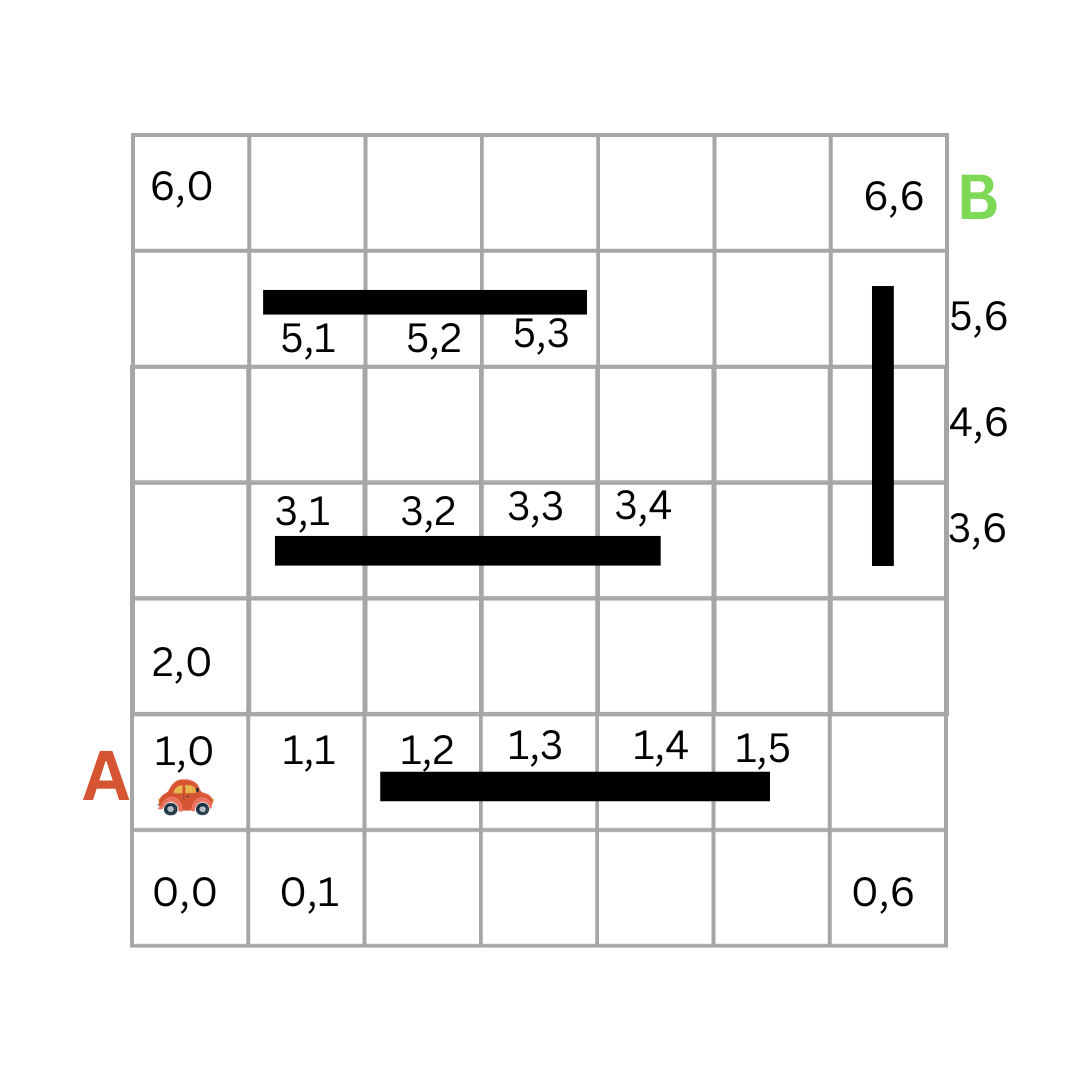

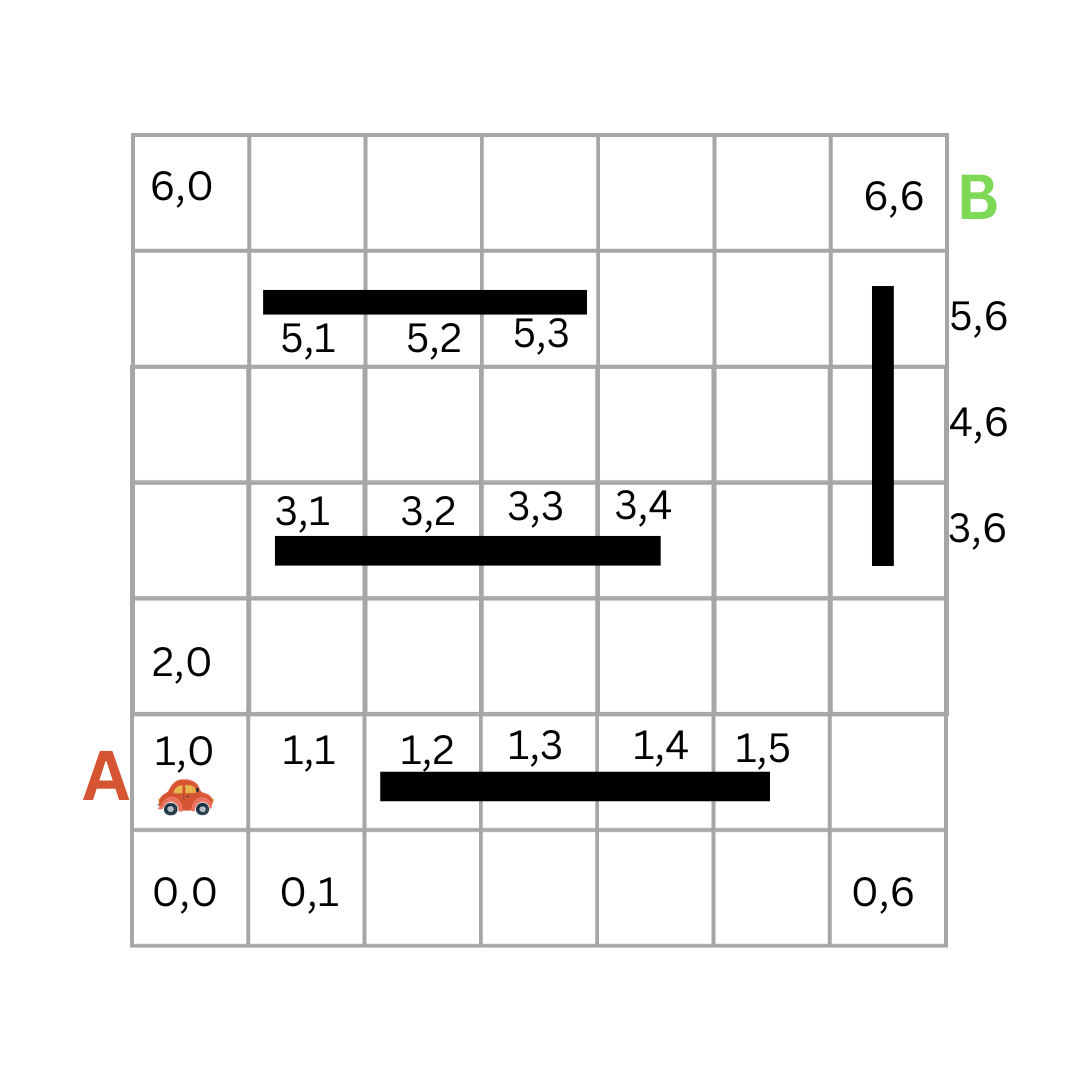

Consider the scenario of a car moving from point A to point B, as illustrated below:

To reach point B, the car can follow various paths, as shown in the image below:

This is a search problem identified by the following:

- We have an agent (the car) that is trying to find a solution (the path) within a defined problem space (the road).

- The agent in this case is called a problem-solving agent.

- The problem space, or state space, consists of various states or configurations (the different positions of the car).

- The goal is to find a sequence of actions that will lead from an initial state (the starting position of the car) to a desired goal state (the destination).

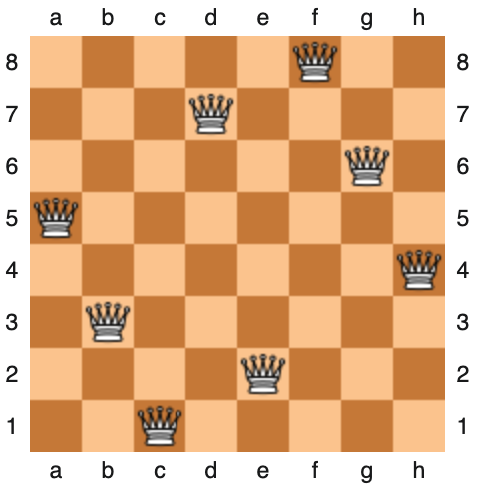

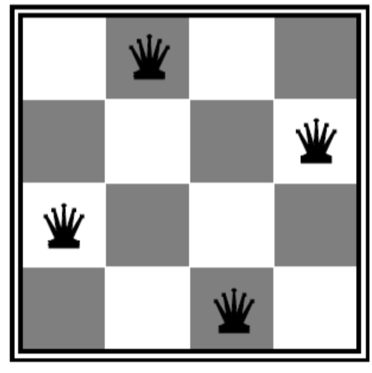

The 8 Queens Problem

Let's consider another example. If we have a chessboard and eight queens, how can we place the queens on the chessboard so that no queen can attack another queen? This is known as the 8 queens problem.

This is also a search problem identified by the following:

- There are various configurations (states) of the queens on the chessboard.

- The goal is to find a sequence of actions that will lead from an initial state (empty board) to a desired goal state (the configuration of the queens on the chessboard so that no queen can attack another queen).

More Examples

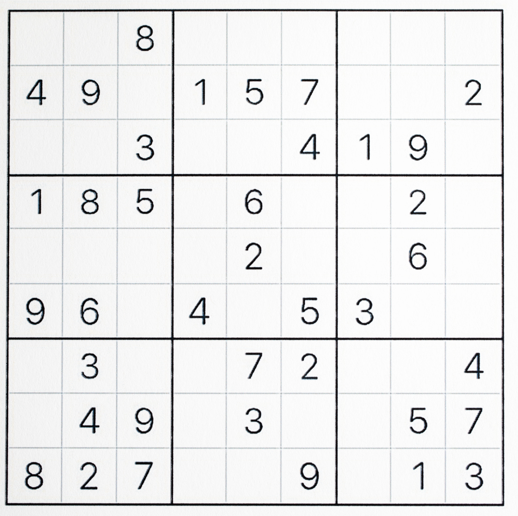

Here are a few more examples of search problems:

- Finding the optimal path for data packets to travel from a source to a destination

- Solving puzzles like Sudoku or Rubik's Cube

- Determining the optimal route between two locations on a map

You will learn how to solve such problems in this course 🚀.

🎯 Understanding Our Objective

One might question whether we are genuinely searching for something in this context. Well, you can think of the solution itself as the object of our search.

The solution is the sequence of actions that will lead us from the initial state to the goal state.

In the car example, our mission is to discover the path leading us to point B (by searching possible states). In the 8-queens problem, our mission is to find the configuration of the queens on the chessboard (by searching possible states starting with an initial one).

Modeling Search Problems

To tackle these problems with a computer, we aim to create a computer-friendly representation, a computational model. A typical model of a search problem consists of the following:

State Space:

This represents the different configurations of the problem space. For instance, the different positions of the car, the different arrangements of tiles in a puzzle, or the different configurations of a robot.

Initial State:

The starting state of the problem. For example, the starting position of the car, the initial arrangement of tiles in a puzzle, the initial position of a robot, etc.

Goal State:

The desired state of the problem. For example, it could be the car's destination, the desired puzzle tile arrangement, or the robot's target position. A goal test is a function that determines whether a given state is a goal state. For instance, checking if the car has reached its destination, if the puzzle tiles are in the correct order, or if the robot has arrived at its destination.

Actions:

Actions are the possible moves or steps that can transition the system from one state to another. These can include actions like moving up, down, left, or right.

Transition Model:

The transition model (or Successor Function) is a function that accepts a state and an action as input and yields an allowable new state as output. For example, the transition model for the car problem would take the car's current position and the desired direction, Up for example, as input and return the new position as output.

Solution:

The solution is a series of actions that lead from the initial state to the goal state. This could be the path the car takes from its starting point to its destination, the sequence of moves needed to solve the puzzle, or the route the robot follows to reach its goal.

Modeling the Car's Journey

Let's explore our previous example of a car journeying from point A to point B and frame it as a search problem. Our focus in this lesson is on modeling the problem's state space, initial state, goal state, goal test, actions, and transition model. The solution will be discussed in an upcoming lesson.

State Space:

To model the state space, we need to consider all the possible configurations of the car. To do that, we need to decide how we will represent the car's position and the environment in which it is moving.

To simplify things, we can represent the car's position as a coordinate on a grid. For example, the car's position at point A can be represented as (1,0), and its position at point B can be represented as (6,6).

So, the state space is all the possible positions of the car on the grid. This is a finite set of states, and we can represent it as a list in Python.

state_space = []

GRID_SIZE = 6

for i in range(GRID_SIZE):

for j in range(GRID_SIZE):

state_space.append(1)

Important Note: The state space in this example is small enough to be represented as a list. However, in most cases, the state space is too large to be represented as a list, as in this example, and we don't need to store the entire state space upfront. More information on this will be provided later.

Initial State:

After modeling the state space, it is clear now that our initial state is the position of the car at point A, which is (1, 0). We can represent the initial state as a tuple in Python.

initial_state = (1, 0)

Goal State and Goal Test:

The goal state is the position of the car at point B, which is (6, 6). Similarly, We can represent the goal state as a tuple in Python.

goal_state = (6, 6)

The goal test will be a function that checks if the car's current position is the same as the goal position. If so, it returns True; otherwise, it returns False.

def goal_test(state):

return state == goal_state

Actions:

Actions are the possible moves or steps that can transition the system from one state to another. In our example, the car can move up, down, left, or right. We can represent the actions as a list in Python.

actions = ["up", "down", "left", "right"]

Transition Model (Successor Function)

At a specific state, the agent can take one of the possible actions: up, down, left, or right. For each specific action, the agent will end up in a new state.

Try it!

Take 10 minutes and try to write a transition model function for our car example. It's a function that should take a state and an action as input and return a new state as output.

def transition_model(state, action):

# Your code here

Recall that the current state is the position of the car within the grid. The action is the direction the car is moving in. For example, if the car is at position (1, 1) and the action is up, the newly returned position will be (2, 1).

Don't rush through it. This is a crucial step in your learning process. If you can't do it, you can check the solution below. But, please, try to do it first.

Unfold the sample code below for an idea of how a transition model for this environment can be implemented.

Transition Model Function

def transition_model(state, action):

x, y = state

if action == 'up':

return (x, y + 1)

elif action == 'down':

return (x, y - 1)

elif action == 'left':

return (x - 1, y)

elif action == 'right':

return (x + 1, y)

else:

raise ValueError(f"Unknown action: {action}")

- Note: The sample code above does not check if the new state is valid or not.

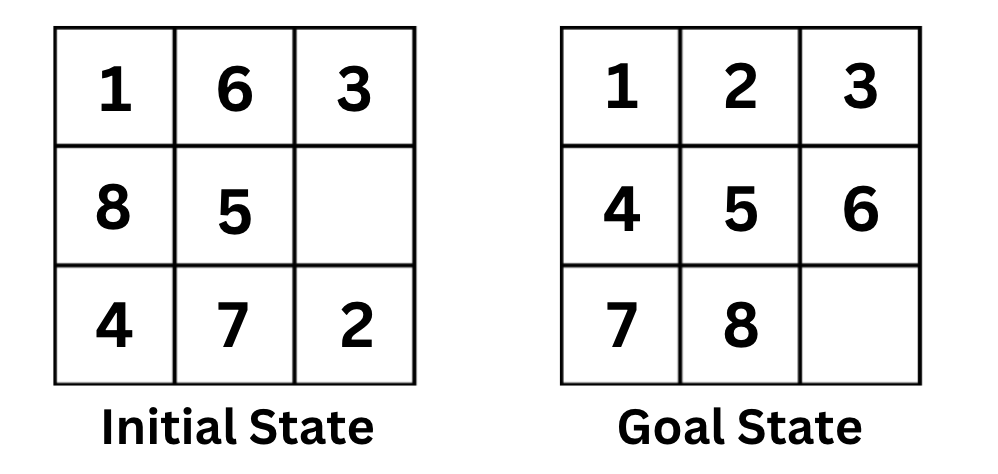

Exercise: The 8-Puzzle Problem

Now, it's your turn to model a search problem.

The 8-puzzle was invented and popularized by Noyes Palmer Chapman in the 1870s. It is played on a 3-by-3 grid with 8 square blocks labeled 1 through 8 and a blank square. Your goal is to rearrange the blocks so that they are in order. You are permitted to slide blocks horizontally or vertically into the blank square.

Take 10-15 minutes and try to model this problem. Describe the state space, initial state, goal test, actions, and transition model.

This is a crucial step in your learning process. Don't rush through it.

🧩 Unfold the solution below and match it with your solution.

Solution

State Space: The state space is all the possible configurations of the puzzle tiles. [[1, 3, 2], [6, 4, 7], [7, 8, None]] and [3, 1, 2], [6, 4, 7], [8, 7, None]] are two examples of states in the state space.

Initial State: The initial state is the starting configuration of the puzzle, which may initially be a scrambled arrangement of the tiles. For example, [[1, 3, 2], [6, 4, 7], [7, 8, None]].

Goal State: The goal state is the desired configuration where the tiles are arranged in ascending order [[1, 2, 3], [4, 5, 6], [7, 8, None]]. The Goal Test is a function that checks if the current state is the goal state.

Actions: Moving the blank tile up, down, left, or right.

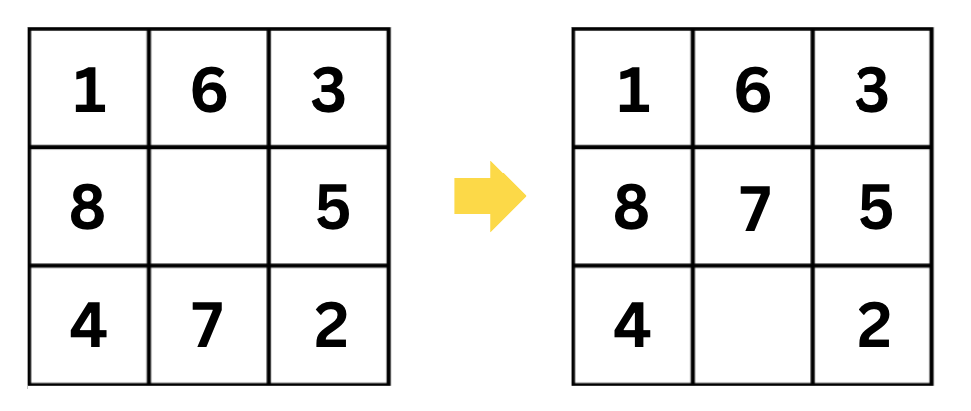

Transition Model: This function defines the outcome of applying an action to a given state, resulting in a new state. For example, if the current state is [[1, 6, 3], [8, None, 5], [4, 7, 2]] and the action is up, the new state will be [[1, 6, 3], [8, 7, 5], [4, None, 2]]

🎉 Congratulations!🎉

You have just completed modeling your first search problem!

State and State Space Representation

In any search problem, the method of representing states and state space significantly influences the efficiency of our search algorithms.

For example, in the car navigation problem, rather than using a tuple representation, we can opt for a graph representation. In this scenario, nodes symbolize specific car configurations, while edges illustrate permissible movements between them.

Likewise, in the 8-puzzle problem, a graph representation is applicable, where each node denotes a specific puzzle configuration, and the edges represent the feasible transitions between configurations.

Choosing a graph representation may provide the flexibility to utilize various graph algorithms, potentially optimizing the search process and improving problem-solving efficiency.

This concept can be expanded further by incorporating additional information into the representation, such as the estimated cost of reaching the goal from a particular state, to guide the search process and enhance the search algorithms' efficiency.

💡 Exploring different methods for representing states and state spaces is an interesting area of research. You could be the next person to devise an innovative representation of states and state spaces for a specific problem, thereby making your mark as a computer scientist in the field.

Can you think of other ways to represent states and state spaces for any of the problems discussed in this lesson?

💬 Discuss and share your thoughts with us here.

Self Assessment!

-

What is a search problem?

-

What are the components of a search problem?

-

Give a complete problem formulation for each of the following problems. Choose a formulation that is precise enough to be implemented.

-

There is an n×n grid of squares, each square initially being either an unpainted floor or a bottomless pit. You start standing on an unpainted floor square and can either paint the square under you or move onto an adjacent unpainted floor square. You want the whole floor painted.

-

Your goal is to navigate a robot out of a maze. The robot starts in the center of the maze facing north. You can turn the robot to face north, east, south, or west. You can direct the robot to move forward a certain distance, although it will stop before hitting a wall.

-

Unfold the sample solutions below to check your answers.

Sample Solutions

1. n×n grid of squares

State space: all possible configurations of the floor tiles and your position.

Initial state: all floor squares unpainted, you start standing on one square unpainted floor square. Actions: paint square, move to adjacent floor square.

Goal test: all floor squares painted.

Successor function: paint current tile, move to adjacent unpainted floor tile.

2. navigate a robot out of a maze

We’ll define the coordinate system so that the center of the maze is at (0, 0), and the maze itself is a square from (−1, −1) to (1, 1).

State space: all possible locations and directions of the robot.

Initial state: robot at coordinate (0, 0), facing North.

Actions: turn north, turn south, turn east, turn west, move forward.

Goal test: either |x| > 1 or |y| > 1 where (x, y) is the current location.

Successor function: move forwards any distance d; change direction robot it facing. Cost function: total distance moved.

The output of the program is:

A* Solution:

# some steps omitted

.....

Move up:

[1, 2, 3, 4]

[5, 6, 11, 7]

[9, 10, 0, 8]

[13, 14, 15, 12]

Move up:

[1, 2, 3, 4]

[5, 6, 0, 7]

[9, 10, 11, 8]

[13, 14, 15, 12]

Move right:

[1, 2, 3, 4]

[5, 6, 7, 0]

[9, 10, 11, 8]

[13, 14, 15, 12]

Move down:

[1, 2, 3, 4]

[5, 6, 7, 8]

[9, 10, 11, 0]

[13, 14, 15, 12]

Move down:

[1, 2, 3, 4]

[5, 6, 7, 8]

[9, 10, 11, 12]

[13, 14, 15, 0]

24

Comparing that with the number of steps taken by the greedy best-first search algorithm, we can see that the A* search algorithm found the optimal solution with 24 steps, while the greedy best-first search algorithm found a solution with 187 steps.

Variants of A* Search

A* (A-star) search is a widely used algorithm for finding the shortest path in a graph or solving optimization problems. It uses a heuristic to guide the search, making it more efficient than traditional uninformed search algorithms. There are different variations of A* search, each tailored to specific types of problems or computational constraints. Here are some notable variations:

Basic A* Search:

The standard A algorithm uses a combination of the cost to reach a node (g) and a heuristic estimate of the cost to reach the goal from that node (h). The evaluation function is f(n) = g(n) + h(n). A expands nodes with the lowest f(n) value.

Weighted A* Search:

Weighted A* introduces a weight factor to the heuristic function, influencing the balance between the cost to reach the node and the estimated cost to the goal. This can be useful for adjusting the algorithm's behavior, favoring either optimality or speed.

Bidirectional A* Search:

In bidirectional A* search, the search is performed simultaneously from both the start and goal states. The algorithm continues until the two searches meet in the middle. This approach can be more efficient for certain types of graphs, especially when the branching factor is high.

Real-Time A* Search:

Real-Time A* is an extension of A* designed for environments where computation time is limited. It uses an incremental approach, exploring nodes in the order of their estimated cost until the available time runs out, returning the best solution found.

These variations address different challenges and requirements, making A* adaptable to a wide range of problem domains and computational constraints. The choice of a specific variation depends on the characteristics of the problem, available resources, and the desired trade-offs between optimality and efficiency.

Summary

The A* search algorithm is an informed search algorithm that uses both the cost of the path and the heuristic function to guide its search. The A* search algorithm is guaranteed to find the optimal solution if the heuristic function is admissible and consistent.

More Heuristics

As discussed in our previous lessons, various heuristic functions can guide the search. We used the number of misplaced tiles as our heuristic function in the 15-puzzle example and the Manhattan distance in our car example. Here are a few more common heuristics with sample implementations for you to explore.

Euclidean Distance

The Euclidean distance is the straight-line distance between two points in Euclidean space. It is named after the ancient Greek mathematician Euclid, who introduced the concept of Euclidean space.

def euclidean_distance(board, goal_board):

distance = 0

for i in range(N * N):

if board[i] != 0: # Skip the empty tile

correct_pos = goal_board.index(board[i])

current_row, current_col = divmod(i, N)

correct_row, correct_col = divmod(correct_pos, N)

distance += math.sqrt((current_row - correct_row)**2 +

(current_col - correct_col)**2)

return distance

Hamming Distance

The Hamming distance is the number of positions at which two strings of equal length differ. It is named after the American mathematician Richard Hamming.

def hamming_distance(board, goal_board):

distance = 0

for i in range(N * N):

if board[i] != 0 and board[i] != goal_board[i]:

distance += 1

return distance

Manhattan Distance

The Manhattan distance is the sum of the horizontal and vertical distances between two points on a grid. It is named after the grid-like layout of the streets in Manhattan.

def manhattan_distance(board, goal_board):

distance = 0

for i in range(N * N):

if board[i] != 0: # Skip the empty tile

correct_pos = goal_board.index(board[i])

current_row, current_col = divmod(i, N)

correct_row, correct_col = divmod(correct_pos, N)

distance += abs(current_row - correct_row) + abs(current_col -

correct_col)

return distance

Linear Conflict

The linear conflict heuristic is a modification of the Manhattan distance heuristic. It is calculated by adding the number of moves required to resolve each linear conflict to the Manhattan distance.

A linear conflict occurs when two tiles are in their goal row or column, but are reversed relative to their goal positions. For example, if the tile 1 is in the second row and the tile 2 is in the first row, then they are in a linear conflict. To resolve this conflict, we need to move one of the tiles out of the way, so that the other tile can move into its correct position. This requires two moves, one for each tile.

def linear_conflict(board, goal_board):

conflict_count = 0

for i in range(N * N):

if board[i] != 0: # Skip the empty tile

correct_pos = goal_board.index(board[i])

current_row, current_col = divmod(i, N)

correct_row, correct_col = divmod(correct_pos, N)

if current_row == correct_row and current_col == correct_col:

continue

if current_row == correct_row:

for j in range(i + 1, N * N):

if board[j] != 0 and goal_board.index(board[j]) < correct_pos:

conflict_count += 1

if current_col == correct_col:

for j in range(i + N, N * N, N):

if board[j] != 0 and goal_board.index(board[j]) < correct_pos:

conflict_count += 1

return conflict_count * 2

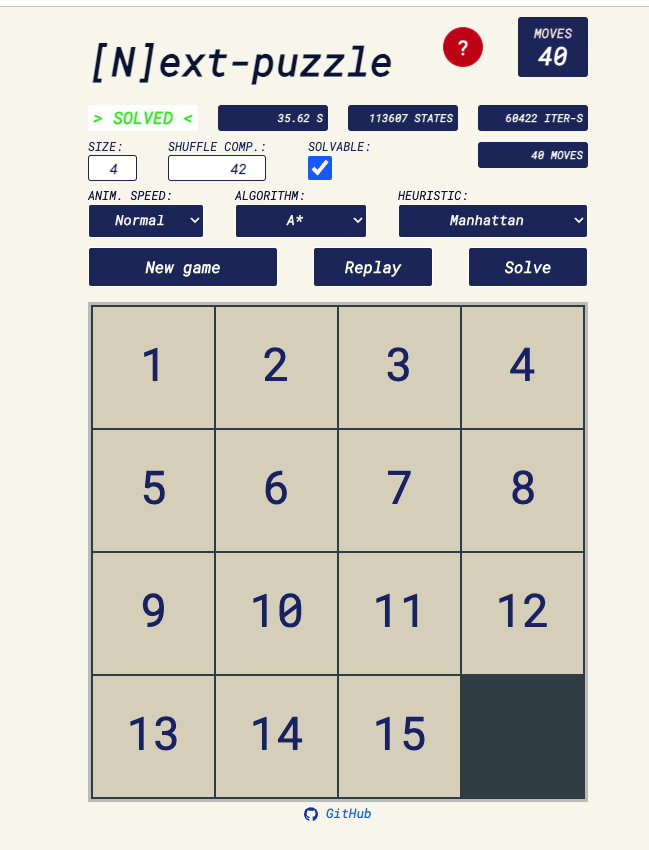

Online N-Puzzle Solver

Here is a cool online repository that solves the 15-puzzle problem using various algorithms and heuristics.

Summary

Heuristic functions are used to guide the search algorithm to find the optimal solution faster. A heuristic function is admissible if it never overestimates the cost of reaching the goal, and it is consistent if the estimated cost of reaching the goal from node n is less than or equal to the cost of reaching node n' from node n plus the estimated cost of reaching the goal from node n'.

Different problems require different heuristic functions. The design of an effective heuristic function is a challenging task and requires a good understanding of the problem. It significantly affects the performance of the search algorithm.

Quiz 1: Intelligence via Search

Before attempting the quiz, read the following carefully.

- This is a timed quiz. You will have only one attempt.

- You will have 40 minutes to complete multiple-choice questions.

- You should first study the lesson materials thoroughly. More specifically, you should learn about search problems, search problem formulation, tracing and running search algorithms, and heuristic functions.

➡️ Access the quiz on Gradescope here.

Week 1 Assignment: Shipping Route Finder

In this assignment, you will implement a shipping route finder program that utilizes the A* search algorithm to determine the shortest path between two shipping points.

➡️ Access the assignment and all details here.

Intelligence via Search II

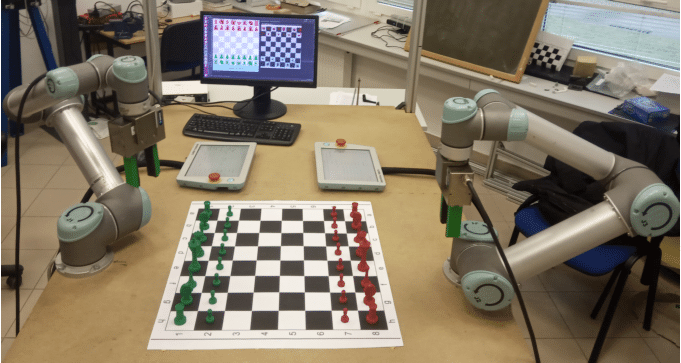

https://www.researchgate.net/figure/Collaborative-robot-system-for-playing-chess_fig4_345377838

Hello, and welcome to the second part of the "Intelligence via Search" lesson. In this lesson, we will continue to explore search algorithms and their applications in the field of artificial intelligence. More specifically, we will discuss game playing and how search algorithms can be used.

This Week's Work:

- Study the material and solve the practical exercises.

- Complete the quiz and coding assignment for this week.

- The coding assignment will involve building a tic-tac-toe game-playing agent using the minimax with alpha-beta pruning algorithm.

Upon completing this week's work, you will be able to:

- Explain the concept of game playing and its relevance to artificial intelligence.

- Describe the minimax algorithm and its application in game playing.

- Develop a game-playing agent capable of playing tic-tac-toe using the minimax algorithm.

Adversarial Search

So far, the problems we have examined have been single-agent problems. In other words, there is only one agent (such as a car or a player of a 15-puzzle game) attempting to solve the problem, such as finding a path to a goal point or rearranging the tiles to the correct order. However, in many problems, especially games, there are two agents trying to solve the problem, each one acting against the other. In this lesson, we will explore how to address such problems.

Adversarial Scenarios

In a pacman game, the pacman agent is trying to eat all the food pellets while avoiding the ghosts. The ghosts are trying to eat the pacman.

In a tic-tac-toe game, the two players are trying to get three of their pieces in a row before the other player does.

In trading, one trader is trying to buy low and sell high, while the other trader is trying to sell high and buy low.

These are all examples of adversarial scenarios. In each case, there are two agents trying to solve the same problem, but each one is acting against the other.

Solving Adversarial Search Problems

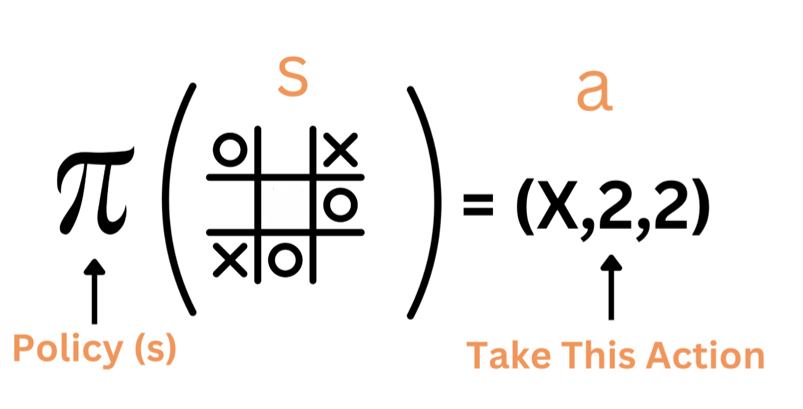

Let's dig deeper into how to solve adversarial search problems. Let's start with the tic-tac-toe game.

In a tic-tac-toe game like the one shown below, if you are the X player, what would be your next move and why?

I bet you chose to place X at position 5. Why? Because it is the move to prevent the O player from winning.

We know this because we are humans and we have played this game many times. But how can we teach a computer to play this game? Fortunately, scientists have come up with many strategies to solve it. One of these strategies is called the minimax algorithm. Let's explore it next.

Minimax Algorithm

The minimax algorithm is a strategy used in decision making, particularly in game theory and artificial intelligence, for minimizing the possible loss while maximizing the potential gain. It's often applied in two-player games like chess or tic-tac-toe. Here's how it works:

In a game, there are two players. One is the maximizer, who tries to get the highest score possible, while the other is the minimizer, who tries to do the opposite and minimize the score.

In a tic-tac-toe game, the maximizer for example is the player who has the X symbol and the minimizer is the player who has the O symbol. When X makes a move, it tries to maximize the score, while O tries to minimize it.

Considering the 3 possible outcomes of the game, X can either win, lose, or draw. If X wins, it gets a score of 1. If X loses, it gets a score of -1. If the game ends in a draw, both players get a score of 0.

To illustrate this, let's consider the game shown below.

It is player O's turn to make a move. O has two possible moves, either place O at position 1 or position 8. The algorithm will look ahead and examine the consequences of each possible move.

If O places its symbol at position 1, the player X will put its symbol at position 8, leading to a game score of 1 (X wins).

If O places its symbol at position 8, the player X will put its symbol at position 1 leading to a game score of 0 (tie).

Because O is a minimizer, it will choose the move that minimizes the score so the minimizer O will choose to place O at position 8.

Utility Values

The scores 1, 0, and -1 are called utility values. They are used to represent the outcome of a particular game state. A score of 1 typically indicates a win for the player whose turn it is to move. A score of 0 often represents a draw or a neutral outcome. A score of -1 usually denotes a loss for the player whose turn it is to move. You can use other numbers to represent the outcome of a game state. For example, you can use 10 to represent a win, 0 to represent a draw, and -10 to represent a loss but the convention is to use 1, 0, and -1.

Many Possible States

In an early stage of the game, there are many possible moves. Each move leads to a different game state. Before each move, the algorithm will look ahead and examine the consequences of each possible move. It will assume that the other player is also playing optimally. (trying to maximize its score). The algorithm will compute the score of each possible move and return the move with the highest score.

Recursive Algorithm

You might have guessed that the algorithm is recursive. It will keep looking ahead until it reaches a terminal state (a state where one of the players wins or the game is a tie).

Watch this video to learn more about the minimax algorithm.

Minimax Tree Traversal Exercise

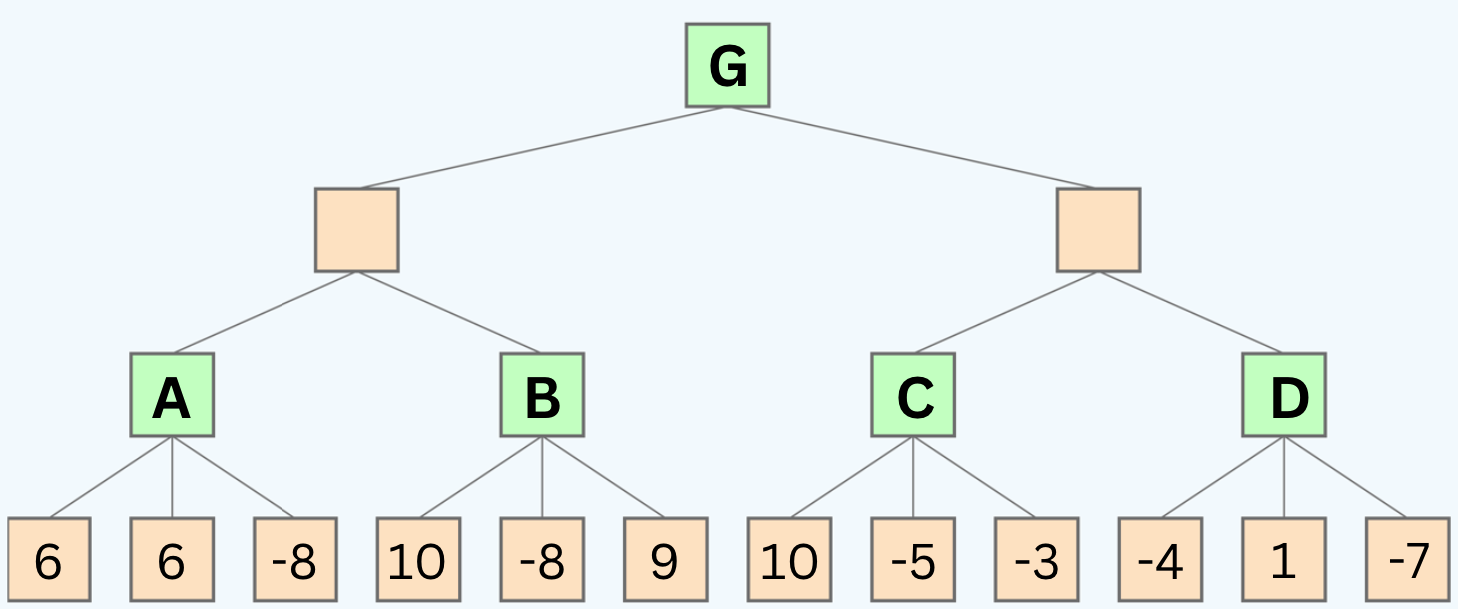

In the below two-ply game tree:

-

Which move should the maximizer choose to maximize its score? 1 or 2?

-

What is the minimizer's best move if the maximizer chooses move 1? 3, 4, 5, or 6

-

What is the minimizer's best move if the maximizer chooses move 2? 7, 8, 9, or 10

Answer

-

We will first examine move #1. If the maximizer chooses move #1, the minimizer, in the following move, will choose between move #3,#4, #5, and #6 terminal states. Because the states are terminal, the algorithm will return the score of each one as given in the picture. Because the minimizer is trying to minimize the score, it will choose move #3 because it has a lower score of 99. Therfore, the score the maximizer will get if it chooses move #1 is 99.

-

Then we examine move #2. If the maximizer chooses move #2, the minimizer will choose between move #7, #8, #9, and #10. Because the minimizer is trying to minimize the score, it will choose move #7 or move #10 because they have the lowest score. Let's go with move #7. So the resulting score of move #2 is 100.

-

Between move #1 and move #2, the maximizer will choose move #2 because it has a higher score. Therefore, the score of move #1 is 100.

Minimax Pseudocode

function mini_max(state, is_maximizer_turn):

if state is terminal

return utility(state)

if state is maximizer's turn

max_value = -infinity

for each action of available_actions(state)

eval = mini_max(result(state,action)) # recursive call

max_value = max(max_value, eval)

return max_value

if state is minimizer's turn

min_value = +infinity

for each action of available_actions(state)

eval = mini_max(result(state,action)) # recursive call

min_value = min(min_value, eval)

return min_value

Here is a sample implementation in Python for the above minimax algorithm.

def minimax(board, is_maximizer_turn):

# recursive terminal condition

is_terminal = is_terminal_state(board)

if is_terminal:

return evaluate_utility(board)

# else, keep expanding

if is_maximizer_turn:

best = float('-inf')

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL: # the empty cell is a potential move

board[i][j] = 'O' # next player is 'O'

score = minimax(board, not is_maximizer_turn) # it's the minimizer's turn

best = max(best, score ) # get the max score of all possible moves

board[i][j] = EMPTY_CELL # Undo the move to keep the board unchanged

return best

else:

best = float('inf')

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL: #the empty cell is a potential move

board[i][j] = 'X' #next player is 'X'

score = minimax(board, not is_maximizer_turn)

best = min(best, score) # get the minimum score of all possible moves

board[i][j] = EMPTY_CELL

return best

Tick-Tac-Toe AI

You may recall the below example of a tic-tac-toe game from your Programming 1 class.

print("Welcome to Tic-Tac-Toe")

print("Here is our playing board:")

# Constants

EMPTY_CELL = ' '

BOARD_SIZE = 3

# The play board

play_board = [[EMPTY_CELL for _ in range(BOARD_SIZE)]

for _ in range(BOARD_SIZE)]

def evaluate_utility(board):

# Checking for Rows for X or O victory.

for row in range(BOARD_SIZE):

if board[row][0] == board[row][1] == board[row][2]:

if board[row][0] == 'O':

return 1

elif board[row][0] == 'X':

return -1

# Checking for Columns for X or O victory.

for col in range(BOARD_SIZE):

if board[0][col] == board[1][col] == board[2][col]:

if board[0][col] == 'O':

return 1

elif board[0][col] == 'X':

return -1

# Checking for Diagonals for X or O victory.

if board[0][0] == board[1][1] == board[2][2]:

if board[0][0] == 'O':

return 1

elif board[0][0] == 'X':

return -1

if board[0][2] == board[1][1] == board[2][0]:

if board[0][2] == 'O':

return 1

elif board[0][2] == 'X':

return -1

# Else if none of them have won then return 0

return 0

def is_terminal_state(board):

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL:

return False

return True

# Prints the board

def print_board():

print(" 1 2 3")

for i in range(BOARD_SIZE):

print(i + 1, end=" ")

for j in range(BOARD_SIZE):

print("[" + play_board[i][j] + "]",

end="") # print elements without new line

print() # print empty line after each row

print('--------------')

# Check for a win

def check_win():

for i in range(BOARD_SIZE):

# Check all rows and columns

if play_board[i][0] == play_board[i][1] == play_board[i][2] != EMPTY_CELL:

return play_board[i][0]

if play_board[0][i] == play_board[1][i] == play_board[2][i] != EMPTY_CELL:

return play_board[0][i]

# Check diagonals

if play_board[0][0] == play_board[1][1] == play_board[2][2] != EMPTY_CELL:

return play_board[1][1]

if play_board[2][0] == play_board[1][1] == play_board[0][2] != EMPTY_CELL:

return play_board[1][1]

return None

# Check for a full board

def is_board_full():

return all(EMPTY_CELL not in row for row in play_board)

print_board()

def get_player_input(player):

while True:

try:

position = input(f'{player}, Enter play position (i.e. 1,1): ')

x, y = map(int, position.split(','))

if x in range(1, BOARD_SIZE + 1) and y in range(1, BOARD_SIZE + 1):

return x, y

else:

print(

"Invalid input. Please enter row and column numbers between 1 and 3."

)

except ValueError:

print(

"Invalid input. Please enter row and column numbers between 1 and 3.")

def play(player, row, col):

if play_board[row-1][col-1] == EMPTY_CELL:

play_board[row-1][col-1] = player

print_board()

return True

else:

print("Position is not empty. Please try again.")

return False

while True:

# Player X's turn

while True:

pos_x, pos_y = get_player_input('X')

if play('X', pos_x, pos_y): # False for not zero_based

break

winner = check_win()

if winner:

print(f"{winner} wins")

break

if is_board_full():

print("The game is a tie!")

break

# AI (Player O's) turn

pos_O_x, pos_O_y = get_player_input('O')

play('O', pos_O_x, pos_O_y) # AI uses zero-based indexing

winner = check_win()

if winner:

print(f"{winner} wins")

break

if is_board_full():

print("The game is a tie!")

break

Let's add the AI logic to the game

Tic-Tac-Toe AI

print("Welcome to Tic-Tac-Toe")

print("Here is our playing board:")

# Constants

EMPTY_CELL = ' '

BOARD_SIZE = 3

# The play board

play_board = [[EMPTY_CELL for _ in range(BOARD_SIZE)]

for _ in range(BOARD_SIZE)]

def evaluate(board):

# Checking for Rows for X or O victory.

for row in range(BOARD_SIZE):

if board[row][0] == board[row][1] == board[row][2]:

if board[row][0] == 'O':

return 1

elif board[row][0] == 'X':

return -1

# Checking for Columns for X or O victory.

for col in range(BOARD_SIZE):

if board[0][col] == board[1][col] == board[2][col]:

if board[0][col] == 'O':

return 1

elif board[0][col] == 'X':

return -1

# Checking for Diagonals for X or O victory.

if board[0][0] == board[1][1] == board[2][2]:

if board[0][0] == 'O':

return 1

elif board[0][0] == 'X':

return -1

if board[0][2] == board[1][1] == board[2][0]:

if board[0][2] == 'O':

return 1

elif board[0][2] == 'X':

return -1

# Else if none of them have won then return 0

return 0

def minimax(board, depth, is_maximizing):

score = evaluate(board)

if score == 1: # AI wins

return score

if score == -1: # Player wins

return score

if is_board_full(): # Tie

return 0

if is_maximizing:

best = -1000

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL:

board[i][j] = 'O' # Assuming AI is 'O'

best = max(best, minimax(board, depth + 1, not is_maximizing))

board[i][j] = EMPTY_CELL

return best

else:

best = 1000

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL:

board[i][j] = 'X' # Assuming human is 'X'

best = min(best, minimax(board, depth + 1, not is_maximizing))

board[i][j] = EMPTY_CELL

return best

def find_best_move(board):

best_val = -1000

best_move = (-1, -1)

for i in range(BOARD_SIZE):

for j in range(BOARD_SIZE):

if board[i][j] == EMPTY_CELL:

board[i][j] = 'O' # AI makes a move

move_val = minimax(board, 0, False)

board[i][j] = EMPTY_CELL # Undo the move

if move_val > best_val:

best_move = (i, j)

best_val = move_val

return best_move

# Prints the board

def print_board():

print(" 1 2 3")

for i in range(BOARD_SIZE):

print(i + 1, end=" ")

for j in range(BOARD_SIZE):

print("[" + play_board[i][j] + "]",

end="") # print elements without new line

print() # print empty line after each row

print('--------------')

# Check for a win

def check_win():

for i in range(BOARD_SIZE):

# Check all rows and columns

if play_board[i][0] == play_board[i][1] == play_board[i][2] != EMPTY_CELL:

return play_board[i][0]

if play_board[0][i] == play_board[1][i] == play_board[2][i] != EMPTY_CELL:

return play_board[0][i]

# Check diagonals

if play_board[0][0] == play_board[1][1] == play_board[2][2] != EMPTY_CELL:

return play_board[1][1]

if play_board[2][0] == play_board[1][1] == play_board[0][2] != EMPTY_CELL:

return play_board[1][1]

return None

# Check for a full board

def is_board_full():

return all(EMPTY_CELL not in row for row in play_board)

print_board()

def get_player_input(player):

while True:

try:

position = input(f'{player}, Enter play position (i.e. 1,1): ')

x, y = map(int, position.split(','))

if x in range(1, BOARD_SIZE + 1) and y in range(1, BOARD_SIZE + 1):

return x, y

else:

print(

"Invalid input. Please enter row and column numbers between 1 and 3."

)

except ValueError:

print(

"Invalid input. Please enter row and column numbers between 1 and 3."

)

def play(player, row, col, zero_based=True):

if zero_based:

if play_board[row][col] == EMPTY_CELL:

play_board[row][col] = player

print_board()

return True

else:

print("Position is not empty. Please try again.")

return False

else:

return play(player, row - 1, col - 1, True)

while True:

# Player X's turn

while True:

pos_x, pos_y = get_player_input('X')

if play('X', pos_x, pos_y, False): # False for not zero_based

break

winner = check_win()

if winner:

print(f"{winner} wins")

break

if is_board_full():

print("The game is a tie!")

break

# AI (Player O's) turn

pos_O_x, pos_O_y = find_best_move(play_board)

play('O', pos_O_x, pos_O_y) # AI uses zero-based indexing

winner = check_win()

if winner:

print(f"{winner} wins")

break

if is_board_full():

print("The game is a tie!")

break

Congratulations!🎉

You've taken your first step into the gaming world by learning how to integrate intelligence into games. Though it's a modest beginning, it's an excellent one. We'll expand upon this knowledge and enhance our AI in the upcoming lessons.

Test your understanding & Challenge

- What is an adversarial search problem? How is it different from a single-agent search problem?

- CS50 AI course has a tic-tac-toe project. Try to implement it.

Eager for more?

If you find yourself in need of further explanations or additional examples, here are some additional resources to assist you:

Optimization Techniques

By now, I hope you've developed a strong awareness of the necessity to optimize our algorithms. Optimization isn't just a desirable feature in most AI problems; it's often a must. Without it, your solution may simply be unfeasible – it won't work!

We've witnessed the limitations of our breadth-first search and depth-first search algorithms when faced with the 15-puzzle problem, underscoring the pressing need for optimization.

Too Slow to be Practical

While MiniMax stands out as a great algorithm for adversarial search, it's just too slow to be practical in its basic form for many games. Our tic-tac-toe game is too small for the difference to be noticeable, but if we were to play a game of chess, we'd be waiting a very long time for the computer to make a move. The number of all possible games in tic-tac-toe is 9! = 362,880, which is a very small number compared to chess for example which has a game tree of 10^120 states.

1) Minimax with Alpha-Beta Pruning

Alpha-beta pruning is a way of finding the optimal minimax solution while avoiding searching subtrees of moves which won't be selected.

It is named alpha-beta pruning because it introduces two additional parameters, alpha and beta, into the minimax function. Alpha is the best value that the maximizer currently can guarantee at that level or above. Beta is the best value that the minimizer currently can guarantee at that level or above. These values are used to prune the tree (More examples and explanation below).

Heads up! Your assignment will include implementing the minimax algorithm with alpha-beta pruning to create a game-playing agent for tic-tac-toe.

Watch this video [updated] on alpha-beta pruning with an example:

Quiz Question:

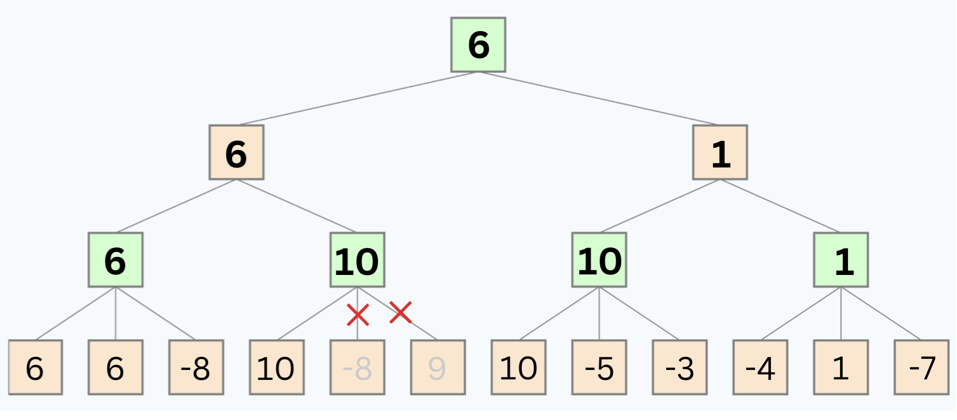

Use the following game tree to answer the following questions:

Questions:

- 1: If we use alpha-beta pruning, what are the values of the nodes labeled A, B, C, and G?

- 2: If we use alpha-beta pruning, which nodes will be pruned?

Note: that the tree is 4 levels deep, not 3. So from top to bottom, the player alternates between MAX and MIN 4 times.

Take your time to trace the algorithm on the above tree before seeing the staff solution below. Remember, this is a crucial step in your learning process. Don't rush through it.

🧩 Unfold the solution below and match it with your solution.

Solution

- 1: After running the whole algorithm, the values of the nodes are:

A = 6 , B = 10, C = 10, D = 1, G = 6

- 2: The nodes that will be pruned are: -8 , 9

2) Depth-Limiting

Another optimization technique is depth-limiting. Depth-limiting is a way of limiting the depth of the search tree. In other words, we can stop searching after a certain number of moves. This is a very simple technique, but it can be very effective.

In fact, it is used in many games, including chess. In chess, the depth limit is usually set to 3 or 4 moves. This is because the number of possible moves in chess is so large that it is not practical to search the entire tree. Applying depth-limiting requires an evaluation function to estimate the utility of a state when the depth limit is reached.

Heuristic Evaluation Functions

A heuristic evaluation function is a function that estimates the utility of a state without doing a complete search (without looking at all of the possible moves). Suppose that, due to limited computational resources, we can only reach a depth of 3 in our search tree. In this case, we need an evaluation function to estimate the utility of the states at that depth level.

Examples of Heuristics:

In chess, a simple heuristic is to count the total value of pieces on the board for each player. Each piece is assigned a value (e.g., pawn = 1, knight = 3, rook = 5, etc.), and the player with a higher total value is generally considered to be in a better position. This heuristic helps to evaluate the board's utility without exploring every possible future move.

In pathfinding problems, such as finding the shortest path in a maze, a common heuristic is the straight-line distance from the current position to the goal. This heuristic helps to prioritize which paths to explore first, even without searching the entire maze.

In games like Connect Four, a heuristic could be the number of three-in-a-row combinations each player has, as these are one step away from a winning four-in-a-row. This helps to estimate the utility of a board state by highlighting positions close to winning, without needing to explore every game continuation.

We can use any of these heuristics to estimate the utility of a state at a given depth without further exploring the tree. The better the heuristic, the better the estimate.

Watch this video to learn more about these optimization techniques:

If you need more explanation, watch these 2 videos:

More Optimization Techniques

Besides the above optimization techniques, there are many other techniques that can be used to improve the performance of our algorithms. Here are some of them:

Dynamic Programming: Breaks the problem down into simpler sub-problems and stores the results of these sub-problems to avoid redundant calculations. This is particularly useful for problems exhibiting overlapping subproblems and optimal substructure.

Iterative Deepening: Combines the space efficiency of depth-first search with the completeness of breadth-first search. It incrementally increases the depth limit until the goal is found.

Simulated Annealing: An optimization technique that tries to avoid getting stuck in local optima by allowing less optimal moves in the early stages of the search.

Beam Search: A heuristic search algorithm that is a combination of BFS and DFS but limits the number of children expanded at each level, keeping only a predetermined number of best nodes at each level.

Branch and Bound: Used in optimization problems, this technique systematically enumerates candidate solutions by splitting them into smaller subsets (branching) and using bounds to eliminate suboptimal solutions.

Bidirectional Search: This method runs two simultaneous searches—one forward from the initial state and the other backward from the goal—hoping that the two searches meet in the middle. This can dramatically reduce the search space.

Parallelization: This technique involves running multiple searches in parallel. This can be done by running multiple searches on different processors or by running multiple searches on the same processor using multithreading.

And many more...

Summary

- Optimization is a crucial part of AI. Without it, our algorithms may be too slow to be practical.

- Alpha-beta pruning is a way of finding the optimal minimax solution while avoiding searching subtrees of moves which won't be selected.

- Depth-limiting is a way of limiting the depth of the search tree. In other words, we can stop searching after a certain number of moves. This is a very simple technique, but it can be very effective.

- A heuristic evaluation function is a function that estimates the utility of a state without doing a complete search ( without looking at all of the possible moves). It works well with depth-limiting.

- Beside the above optimization techniques, there are many other techniques that can be used to improve the performance of our algorithms including: Dynamic Programming, Iterative Deepening, Simulated Annealing, Beam Search, Branch and Bound, Bidirectional Search, Parallelization, and many more...

Practice Quiz

Q1. What is the main purpose of alpha-beta pruning in the minimax algorithm?

- A) To increase the number of nodes in the search tree.

- B) To find the optimal minimax solution while avoiding unnecessary subtree searches.

- C) To evaluate heuristic functions.

- D) To implement depth-first search.

Q2. In the context of chess, why is depth-limiting used as an optimization technique?

- A) Because chess requires a complete search of the game tree.

- B) To limit the depth of the search tree due to the large number of possible moves in chess.

- C) To enhance the efficiency of the minimax algorithm.

- D) To calculate the exact number of moves ahead.

Q3. Which of the following is a better heuristic evaluation function in chess?

- A) Counting the total number of moves made by a player.

- B) Calculating the straight-line distance to the king.

- C) Counting the total value of pieces on the board for each player.

- D) The number of possible checkmates in two moves.

Q4. Which optimization technique combines the space efficiency of DFS with the completeness of BFS?

- A) Dynamic Programming

- B) Iterative Deepening

- C) Simulated Annealing

- D) Beam Search

Q5. What is a unique feature of bidirectional search compared to other search techniques?

- A) It involves breaking the problem down into simpler sub-problems.

- B) It uses a heuristic to estimate the utility of a state.

- C) It runs two simultaneous searches, one forward from the initial state and the other backward from the goal.

- D) It limits the depth of the search tree to a specific number of moves.

Please try the quiz first before jumping into the solution below.

Answer Key

- Q1. B) To find the optimal minimax solution while avoiding unnecessary subtree searches.

- Q2. B) To limit the depth of the search tree due to the large number of possible moves in chess.

- Q3. C) Counting the total value of pieces on the board for each player.

- Q4. B) Iterative Deepening

- Q5. C) It runs two simultaneous searches, one forward from the initial state and the other backward from the goal.

Game Theory

Game theory, a fascinating branch of mathematics with widespread applications in various disciplines, offers a systematic framework for analyzing strategic interactions among rational decision-makers. Originating from economics, game theory has evolved to become a crucial tool in fields such as political science, biology, sociology, and artificial intelligence. At its core, game theory investigates how individuals, referred to as players, make decisions when their outcomes depend not only on their own choices but also on the actions of others. It explores scenarios where conflicting interests or cooperation shape the dynamics, emphasizing the interplay of strategies and the resulting outcomes.

Notable concepts within game theory include Nash equilibrium, where no player has an incentive to change their strategy unilaterally, and the distinction between zero-sum and non-zero-sum games, where the sum of gains and losses is either constant or variable. The pervasive influence of game theory underscores its significance in unraveling the complexities of decision-making, offering valuable insights into human behavior and strategic reasoning across a spectrum of real-world scenarios.

Optional: Watch this video on game theory:

Game Playing in AI

The evolution of game-playing artificial intelligence (AI) has marked significant milestones in the realm of computational achievement.

In 1950, the world witnessed the emergence of the first computer player for checkers.

The year 1994 saw a historic moment when the computer program Chinook became the first-ever computer champion, ending the remarkable 40-year reign of human champion Marion Tinsley. Utilizing a complete 8-piece endgame, Chinook's victory marked a pivotal moment in the intersection of artificial intelligence and board games.

The landmark event continued in 2007 when checkers, as a game, was declared solved by computational methods.

Chess, a game long regarded as the pinnacle of intellectual prowess, witnessed a groundbreaking event in 1997 when Deep Blue defeated human champion Gary Kasparov in a six-game match. Deep Blue's computational capabilities were awe-inspiring, examining 200 million positions per second and employing sophisticated evaluation methods, some undisclosed, to extend search lines up to 40 ply.

Deep Blue computer beats world chess champion – archive, 1996

Deep Blue computer beats world chess champion – archive, 1996

Fast forward to 2016, and the game of Go experienced a revolution with AlphaGo defeating a human opponent. AlphaGo's success was attributed to its utilization of Monte Carlo Tree Search and a learned evaluation function.

These triumphs in game-playing AI underscore the instrumental role of games in tracking the progress of artificial intelligence, offering tangible benchmarks for advancements in computational abilities and strategic decision-making.

Different Types of Games

Deterministic games are those in which the outcome is fully determined by the moves of the players. Games like chess, checkers, and Go are deterministic.

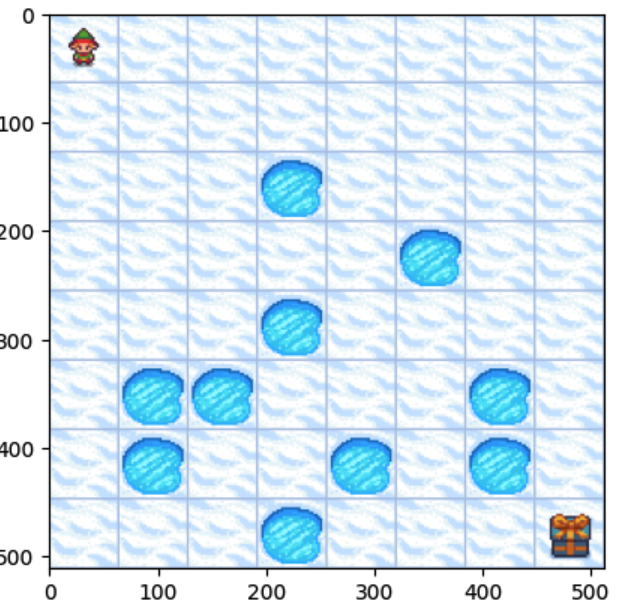

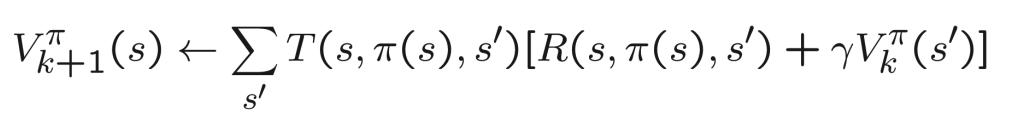

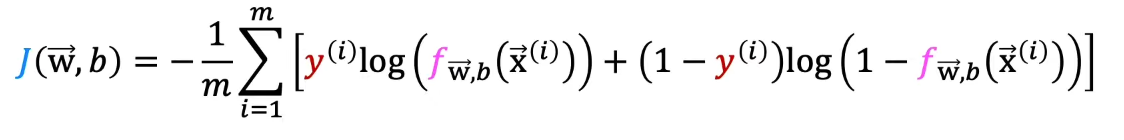

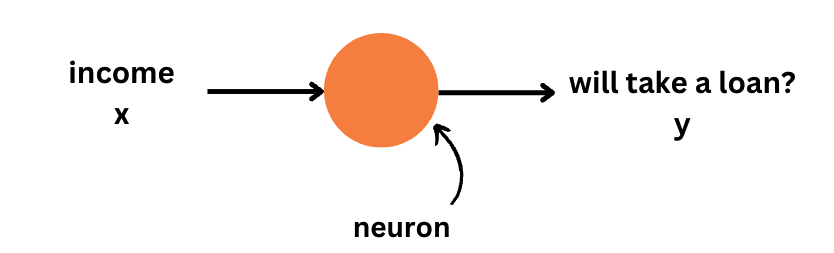

Stochastic games are those in which chance or randomness is involved in the outcome of the game. Games like backgammon, snakes and ladders, and Monopoly are stochastic.