Introduction to Data Science

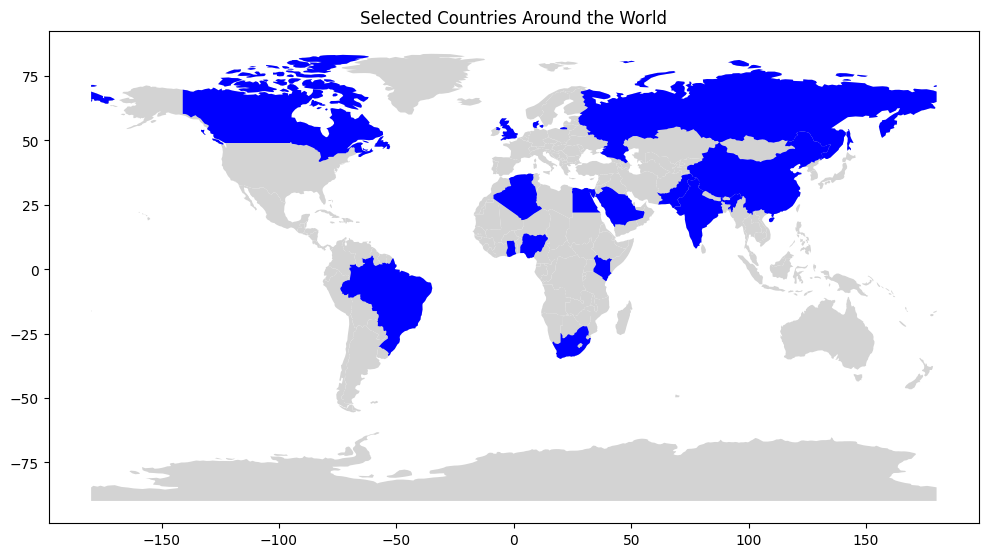

Welcome to Intro to Data Science! You are joining a global learning community dedicated to helping you learn and thrive in data science.

Course Description

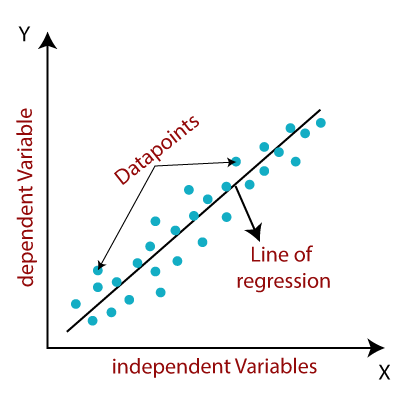

Data science is applicable to a myriad of professions, and analyzing large amounts of data is a common application of computer science. This course empowers students to analyze data, and produce data-driven insights. It covers the foundational suite of concepts needed to solve data problems, including preparation (collection and processing), presentation (information visualization), and analysis (statistical and machine learning).

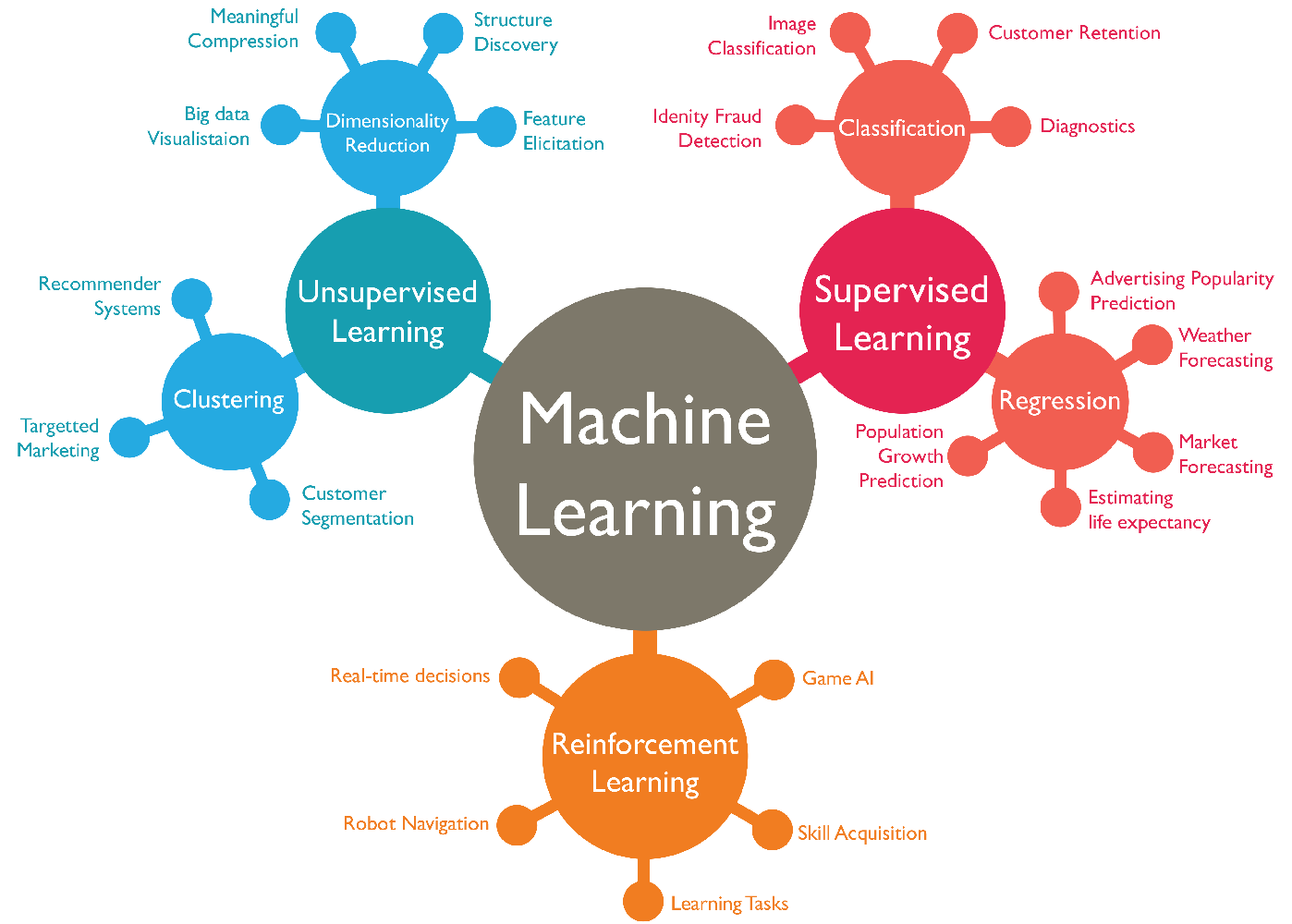

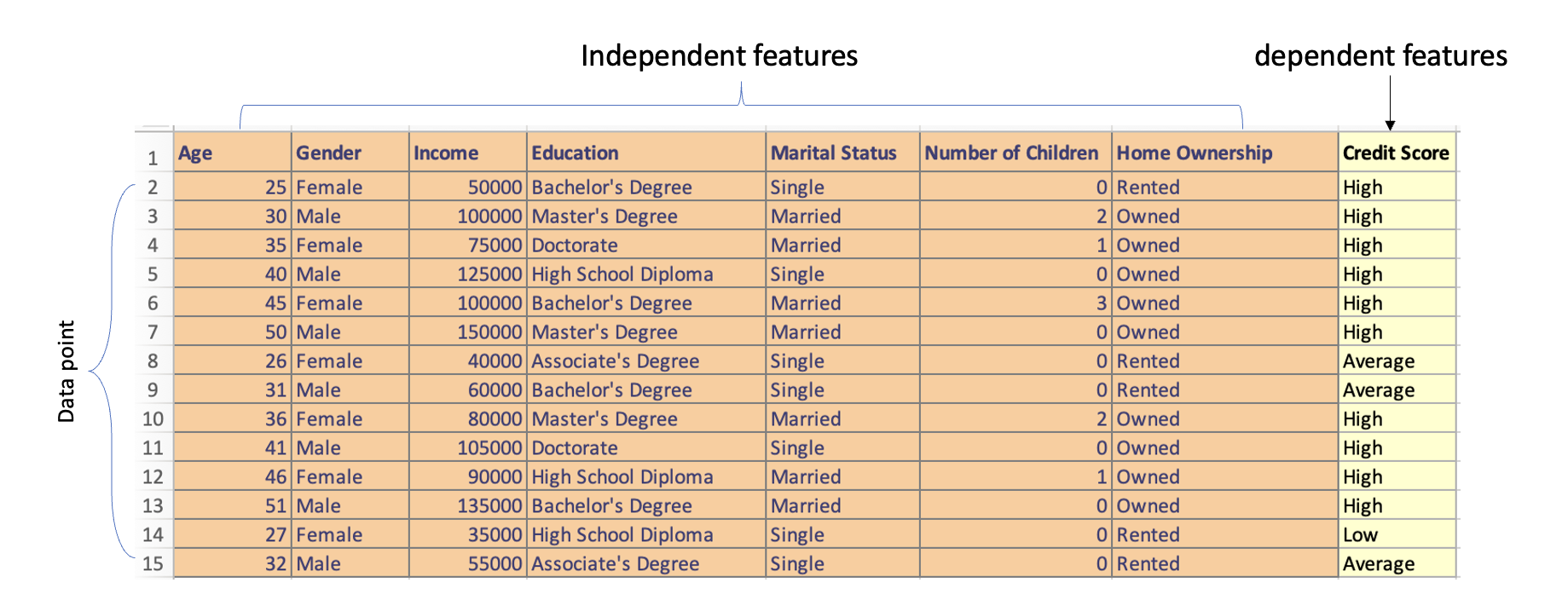

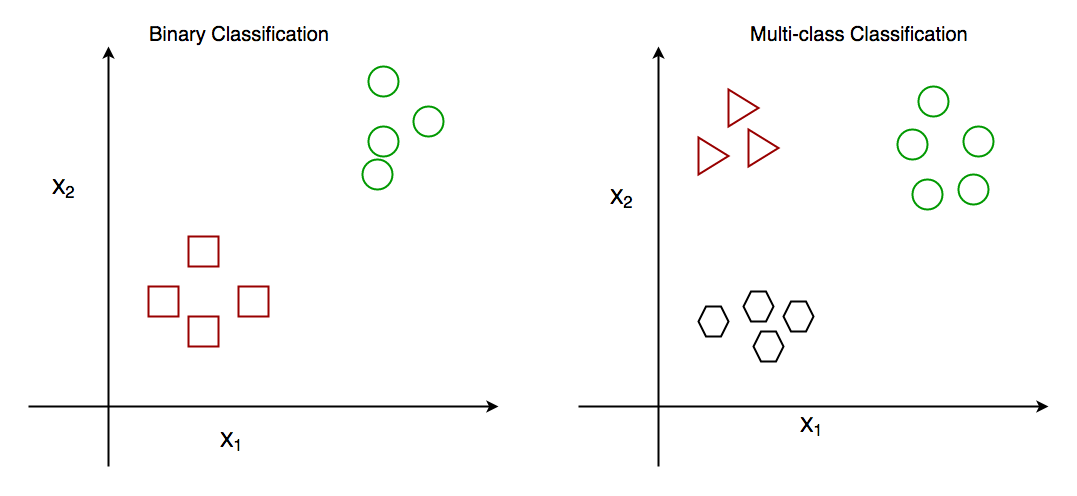

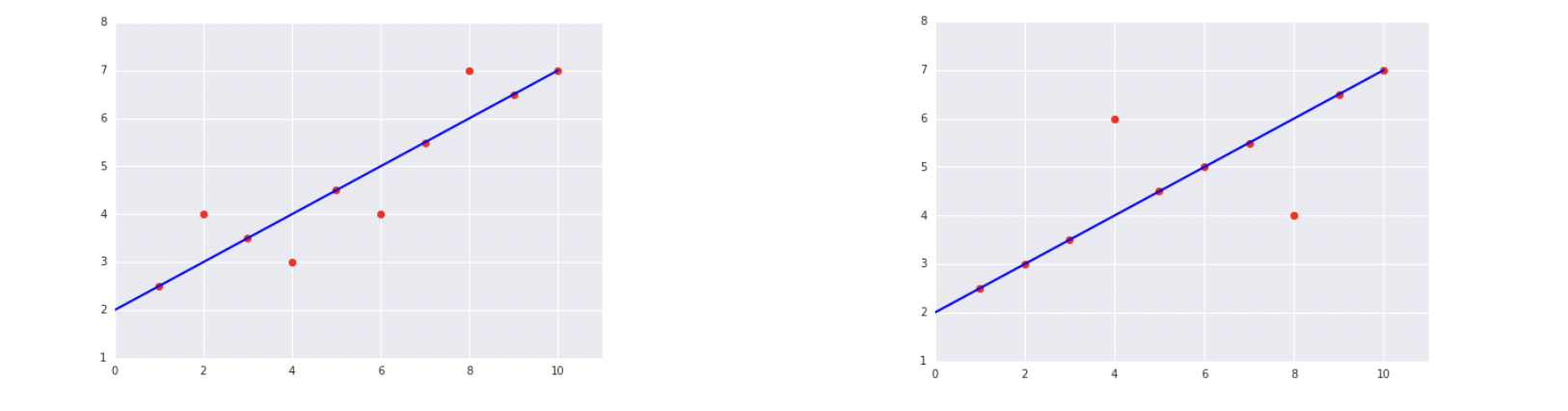

Data analysis requires acquiring and cleaning data from various sources including the web, APIs, and databases. As a student, you will learn techniques for summarizing and exploring data with tools like Spreadsheets, Google Colab, and Pandas. Similarly, you'll learn how to create data visualizations using Power BI and Seaborn, and practice communication with data. Likewise, you'll be introduced to machine learning techniques of prediction and classification, and explore Natural Language Processing (NLP). Lastly, you'll learn the fundamentals of deep learning, which will prepare you for advanced study of data science.

Throughout the course, you will work with real datasets and attempt to answer questions relevant to real-life problems.

Course Objectives

At the end of the course, student should be able to:

- Explain the basics of data science, its relevance, and applications in 21st century.

- Describe various data collection and cleaning techniques, using necessary tools.

- Apply different visualization tools to generate insights that drive business decisions.

- Demonstrate understanding of machine learning concepts, and its application to real-world problems.

Instructor

- Name: Wasiu Yusuf

- Email: wasiu.yusuf@kibo.school

Live Class Time

Note: all times are shown in GMT.

- Wednesday at 3:00 PM - 4:30 PM GMT

Office Hours

- Thursday at 2:00 - 3:00 PM GMT

Live Classes

Each week, you will have a live class (see course overview for time). You are required to attend the live class sessions.

Video recordings and resources for the class will be posted after the classes each week. If you have technical difficulties or are occasionally unable to attend the live class, please be sure to watch the recording as quickly as possible so that you do not fall behind.

| Week | Topic | Slides | Live Class |

|---|---|---|---|

| 1 | Intro to Data Science | Slide_1 | Video_1 |

| 2 | Data Collection and Cleaning | Slide_2 | Video_2 |

| 3 | Data Visualization & Insight | Slide_3 | Video_3 |

| 4 | Exploratory Data Analysis | Slide_4 | Video_4 |

| 5 | Feature Engineering | Slide_5 | Video_5 |

| 6 | Intro to Machine Learning | Slide_6 | Video_6 |

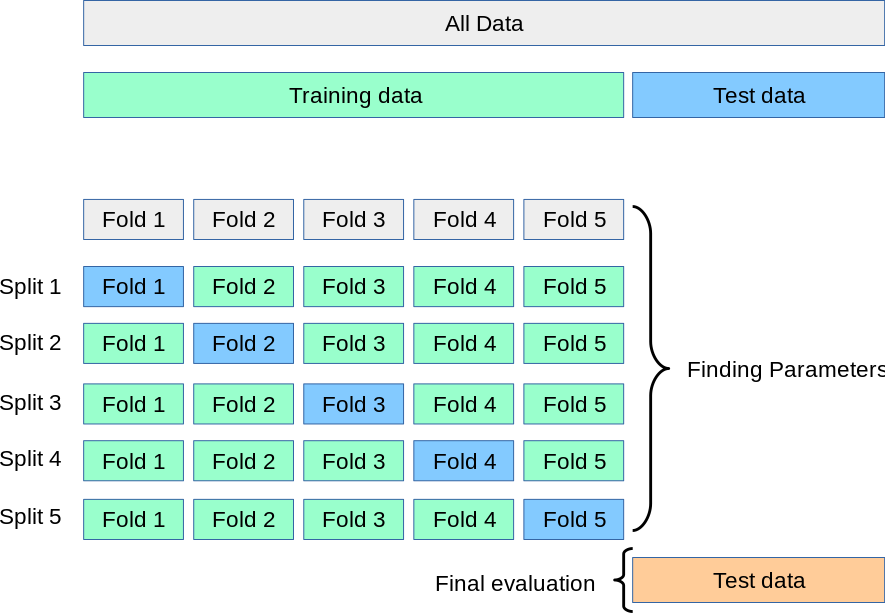

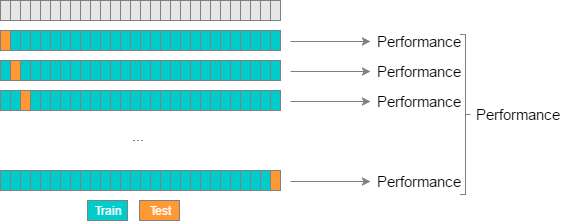

| 7 | Model Evaluation Techniques | Slide_7 | Video_7 |

| 8 | Natural Labguage Processing | Slide_8 | Video_8 |

| 9 | Deep Learning Fundamentals | Slide_9 | Video_9 |

| 10 | Final Project Week | NIL | NIL |

If you miss a class, review the slides and recording of the class and submit the activity or exercise as required.

Assessments

Your overall course grade is made up of the following components:

- Practice Exercises: 18%

- Weekly assignments: 42%

- Midterm Project: 15%

- Final Project: 25%

Practice Exercises

Each week, there are activities in the lessons and practice exercises at the end of the lesson. Learning takes lots of practice, so you should complete all of these practice activities. Some of the practice exercises must be submitted, though you will not get quick feedback on your work unless you reach out on Discord or to the instructor directly (perhaps via office hours) for feedback. The purpose of the practices if for you to apply what you are learning and prove to yourself that you understand the concepts. It is very easy to convince yourself that you understand something when the correct answer is sitting right in front of you. By doing the exercises, you will be able to determine if you truly understand the material.

Practice tips

- It's good to look at other solutions, but only after you've tried solving a problem. If you come up with a solution that works, try to notice how someone else solved the same problem, and what you might do to revise your solution.

- It can be good to try solving the same problem a second time, after some days or weeks have passed. Has the problem gotten easier, now that you have solved it before?

- It's fun to solve problems with friends. If you have a solution you really like, you can share it with the squad or community. Remember to use spoiler tags so that you don't ruin the problem in case someone else wants to try it and this is only for practice exercises that are not graded.

- Practice should be challenging, but you shouldn't spend hours stuck on a problem without making progress. If you are stuck, take a break, ask for help, try another problem, and return to the problem later.

- Take a break! It's often helpful to walk around, drink water, eat a bite of food, then return to a problem refreshed. Some problems that seem impossible become very easy when approached with a fresh mind.

Weekly Assignments

Most weeks, you'll have a assignment to complete, usually as an individual, though it will be specified within the assignment description if you can work in a team. The assignment will bring together the skills you learn that week with the skills that you learned in prior weeks. The course topics and assignments build upon each other during the term. It is critically important that you stay caught up and complete all the assignments. If you skip an assignment, you will be at a disadvantage in future assignments.

On weeks that you have projects (midterm or final), you will not have an assignment to complete.

Projects

Approximately midway through the term and during the last two weeks of the term, you will be given a project. These projects are summative in nature--this is your opportunity to demonstrate to the instructor that you understand what you are doing. Note that these two projects make up a significant percentage of your final grade, so it is critical that you begin the projects early.

Getting Help

If you have any trouble understanding the concepts or stuck on a problem, we expect you to reach out for help!

Below are the different ways to get help in this class.

Discord Channel

The first place to go is always the course's help channel on Discord. Share your question there so that your Instructor and your peers can help as soon as we can. Peers should jump in and help answer questions (see the Getting and Giving Help sections for some guidelines).

Message your Instructor on Discord

If your question doesn't get resolved within 24 hours on Discord, you can reach out to your instructor directly via Discord DM or Email.

Office Hours

There will be weekly office hours with your Instructor and your TA. Please make use of them!

Tips on Asking Good Questions

Asking effective questions is a crucial skill for any computer science student. Here are some guidelines to help structure questions effectively:

-

Be Specific:

- Clearly state the problem or concept you're struggling with.

- Avoid vague or broad questions. The more specific you are, the easier it is for others to help.

-

Provide Context:

- Include relevant details about your environment, programming language, tools, and any error messages you're encountering.

- Explain what you're trying to achieve and any steps you've already taken to solve the problem.

-

Show Your Work:

- If your question involves code, provide a minimal, complete, verifiable, and reproducible example (a "MCVE") that demonstrates the issue.

- Highlight the specific lines or sections where you believe the problem lies.

-

Highlight Error Messages:

- If you're getting error messages, include them in your question. Understanding the error is often crucial to finding a solution.

-

Research First:

- Demonstrate that you've made an effort to solve the problem on your own. Share what you've found in your research and explain why it didn't fully solve your issue.

-

Use Clear Language:

- Clearly articulate your question. Avoid jargon or overly technical terms if you're unsure of their meaning.

- Proofread your question to ensure it's grammatically correct and easy to understand.

-

Be Patient and Respectful:

- Be patient while waiting for a response.

- Show gratitude when someone helps you, and be open to feedback.

-

Ask for Understanding, Not Just Solutions:

- Instead of just asking for the solution, try to understand the underlying concepts. This will help you learn and become more self-sufficient in problem-solving.

-

Provide Updates:

- If you make progress or find a solution on your own, share it with those who are helping you. It not only shows gratitude but also helps others who might have a similar issue.

Remember, effective communication is key to getting the help you need both in school and professionally. Following these guidelines will not only help you in receiving quality assistance but will also contribute to a positive and collaborative community experience.

Screenshots

It’s often helpful to include a screenshot with your question. Here’s how:

- Windows: press the Windows key + Print Screen key

- the screenshot will be saved to the Pictures > Screenshots folder

- alternatively: press the Windows key + Shift + S to open the snipping tool

- Mac: press the Command key + Shift key + 4

- it will save to your desktop, and show as a thumbnail

Giving Help

Providing help to peers in a way that fosters learning and collaboration while maintaining academic integrity is crucial. Here are some guidelines that a computer science university student can follow:

-

Understand University Policies: Familiarize yourself with Kibo's Academic Honesty and Integrity Policy. This policy is designed to protect the value of your degree, which is ultimately determined by the ability of our graduates to apply their knowledge and skills to develop high quality solutions to challenging problems--not their grades!

-

Encourage Independent Learning: Rather than giving direct answers, guide your peers to resources, references, or methodologies that can help them solve the problem on their own. Encourage them to understand the concepts rather than just finding the correct solution. Work through examples that are different from the assignments or practice problems provide in the course to demonstrate the concepts.

-

Collaborate, Don't Complete: Collaborate on ideas and concepts, but avoid completing assignments or projects for others. Provide suggestions, share insights, and discuss approaches without doing the work for them or showing your work to them.

-

Set Boundaries: Make it clear that you're willing to help with understanding concepts and problem-solving, but you won't assist in any activity that violates academic integrity policies.

-

Use Group Study Sessions: Participate in group study sessions where everyone can contribute and learn together. This way, ideas are shared, but each individual is responsible for their own understanding and work.

-

Be Mindful of Collaboration Tools: If using collaboration tools like version control systems or shared documents, make sure that contributions are clear and well-documented. Clearly delineate individual contributions to avoid confusion.

-

Refer to Resources: Direct your peers to relevant textbooks, online resources, or documentation. Learning to find and use resources is an essential skill, and guiding them toward these materials can be immensely helpful both in the moment and your career.

-

Ask Probing Questions: Instead of providing direct answers, ask questions that guide your peers to think critically about the problem. This helps them develop problem-solving skills.

-

Be Transparent: If you're unsure about the appropriateness of your assistance, it's better to seek guidance from professors or teaching assistants. Be transparent about the level of help you're providing.

-

Promote Honesty: Encourage your peers to take pride in their work and to be honest about the level of help they received. Acknowledging assistance is a key aspect of academic integrity.

Remember, the goal is to create an environment where students can learn from each other (after, we are better together) while we develop our individual skills and understanding of the subject matter.

Academic Integrity

When you turn in any work that is graded, you are representing that the work is your own. Copying work from another student or from an online resource (including generative AI tools like ChatGPT) and submitting it is plagiarism.

As a reminder of Kibo's academic honesty and integrity policy: Any student found to be committing academic misconduct will be subject to disciplinary action including dismissal.

Disciplinary action may include:

- Failing the assignment

- Failing the course

- Dismissal from Kibo

For more information about what counts as plagiarism and tips for working with integrity, review the "What is Plagiarism?" Video and Slides.

The full Kibo policy on Academic Honesty and Integrity Policy is available here.

Course Tools

In this course, we are using these tools to work on code. If you haven't set up your laptop and installed the software yet, follow the guide in https://github.com/kiboschool/setup-guides.

- GitHub is a website that hosts code. We'll use it as a place to keep our project and assignment code.

- GitHub Classroom is a tool for assigning individual and team projects on Github.

- Google Colab is your code editor. It's where you'll write code to analyze your dataset.

- Chrome is a web browser we'll use to access Google Colab and other online resources. Other browsers may have similar features, but the course is designed to be completed using Chrome.

- Anchor is Kibo's Learning Management System (LMS). You will access your course content through this website, track your progress, and see your grades through this site.

- Gradescope is a grading platform. We'll use it to track assignment submissions and give you feedback on your work.

- Woolf is our accreditation partner. We'll track work there too, so that you get credit towards your degree.

Core Reading

The following materials were key references when this course was developed. Students are encouraged to use these materials to supplement their understanding or to diver deeper into course topics throughout the term.

- Adhikari, A., DeNero J. (2020). Computational and Inferential Thinking: The Foundations of Data Science

- Aggarwal R., Ranganathan P., (2017). Common pitfalls in statistical analysis. NCBI

- Luciano, F., Mariarosaria T., (2016). What is data ethics?. Royal Society Publishing

Supplemental Reading

This course references the following materials. Students are encouraged to use these materials to supplement their understanding or to diver deeper into course topics throughout the term.

- Hamel G. (2020). Python for Data Analysis Playlist

- Datacamp.com. Pandas Cheat Sheet

Intro to Data Science

Welcome to week 1 of the Intro to data science course 🤝

This week, we will explore the fundamental concepts and techniques used in data science. We will start by understanding what data science is and its importance in today's world. We will then dive into the data science building blocks and workflows. Next, we will learn about data types and spreadsheet softwares. Furthermore, you'll learn how to use Microdoft Excel to explore, manipulate, clean, and visualize sample dataset. Finally, you'll be introduced to some data science tools.

Whatever your prior expereince, this week you'll touch on basics of data science and the tools you'll be using. You'll also start practising learning and working together. The internet is social, and technologists build it together. So, that's what you'll learn to do too.

Learning Outcomes.

After this week, you will be able to:

- Explain the basics and building blocks of data science.

- Describe different data types used in data science.

- Apply different data cleaning techniques on messy dataset.

- Generate and visualize data with Microsoft Excel.

An overview of this week's lesson

Intro to Data Science

Data is the new electricity- Satya Nadella

We live in a time where huge amount of data are generated every second through website visit, social media likes and posts, online purchase, gaming, and online movie streaming among others. With an estimated 2.5 quintillion bytes of data generated each day, it is now inevitable for individuals and businesses to strategize on ways to derive valuable insight from this huge data lying around.

Now that you have an idea about the data boom, let’s look at what data science is all about.

What is Data Science?

In summary...

- Data science is an multidisciplinary field that involves the processes, tools, and techniques needed to uncover insight from raw data.

- Data science plays a critical role in enabling businesses to leverage their data assets and stay competitive in today's data-driven economy.

Now that you have an idea of what data science is, let understand why data science is important, and its role in businesses.

Data science in today's business

Given its significance in modern-day organizations...

- data science holds crucial importance to decision making and business success.

- there is a growing need for professionals who are equipped with data science skills... could that be you?

Who is a data scientist?

As an important part of every business, the role of a data scientist includes the following:

-

Collecting, processing, and analyzing data to identify patterns and insights that inform decision-making processes.

-

Developing predictive models that can be used to forecast future trends or outcomes based on historical data.

-

Creating data visualizations that make complex data sets easy to understand and communicate to stakeholders.

-

Collaborating with cross-functional teams to identify business problems and opportunities that can be addressed using data-driven insights.

-

Developing and deploying machine learning algorithms and other advanced analytical techniques to solve complex problems and generate insights.

-

Ensuring the accuracy, integrity, and security of data throughout the data lifecycle.

-

Staying up-to-date with the latest trends and tools in data science, and continuously improving skills and knowledge through ongoing learning and development.

👩🏾🎨 Practice: Data and Businesses

- Why is data science important for businesses?

- Highlight 2 things a data scientist doesn't do in an organization.

Answer these questions in the padlett below.

https://padlet.com/curriculumpad/data-and-businesses

👉🏾 In the next section, we'll explore the building blocks and typical workflow of data science.

🛃 Building blocks and Workflow

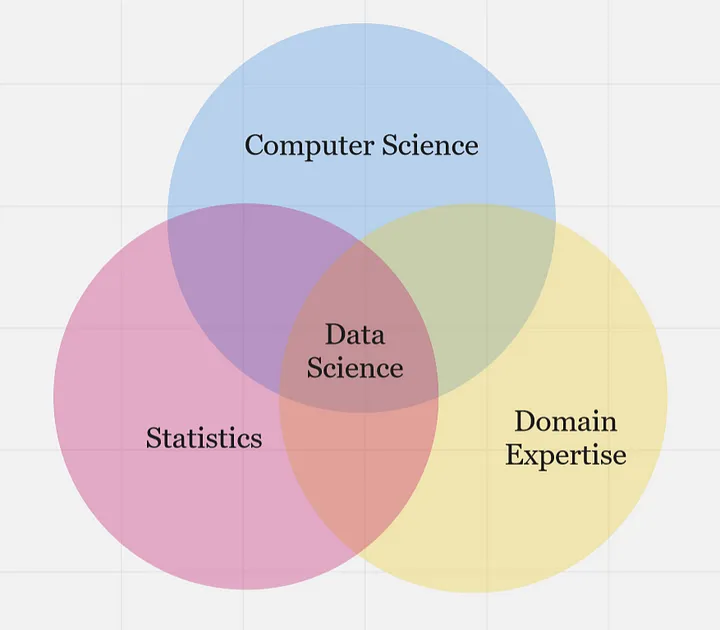

Building blocks

Previously, we described data science as a multidisciplinary field. At the high level, data science is typically an intersection of 3 core areas - statistics, computer science, and domain expertise. Altogether, these three areas form the building blocks of data science, allowing practitioners to collect, process, analyze, and visualize data in a way that generates valuable insights and informs decision-making processes in various industries and domains.

...statistics, computer science, and domain knowledge are all essential components of data science, and each plays a critical role in the data science process as highlighted below.

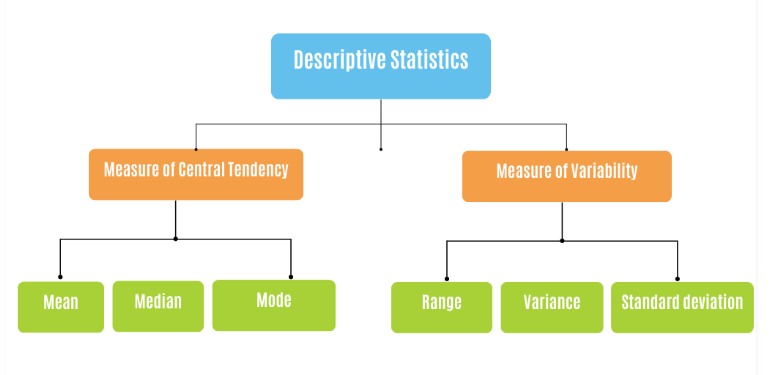

- Statistics - provides the foundational concepts and methods for collecting, analyzing, and interpreting data. This is essential for understanding the data itself, including identifying patterns, testing hypotheses, and making predictions.

- Computer Science - provides the computational and programming tools needed to manipulate, process, and visualize data at scale, such as tools and infrastructure necessary to work with data at scale. This includes programming languages like Python and R, as well as tools like SQL, Hadoop, and Spark.

- Domain Expertise - refers to expertise in a specific field or industry, which is critical for understanding the context of the data being analyzed and generating insights that are relevant and useful. Domain knowledge is particularly important in fields like healthcare, finance, and engineering, where specialized knowledge is required to make informed decisions based on data.

Overall, data science building blocks are an intersection of statistical methods, computer science tools, and domain knowledge, which are used together to extract insights and generate value from data. Now, how does a typical data science project looks like while using this building blocks?

Data science workflow

Each phase includes different dependent tasks and activities needed to achieve the overall goal of the project. Overall, the workflow serve as guidelines throughout the project life cycle. A typical end-to-end journey of a sample data science project using this workflow is explained in the next video.

In summary, a typical data science project workflows includes;

-

Problem formulation: involves work with stakeholders to clearly define the problem they are trying to solve, identify the key objectives, and develop a plan for data-driven decision-making.

-

Data collection: This involves obtaining data from various sources, including databases, APIs, and web scraping.

-

Data Preparation: This involves cleaning, transforming, and structuring data in a way that is suitable for analysis.

-

Exploratory Data Analysis (EDA): This involves exploring and analyzing data using statistical and machine learning techniques to identify patterns and trends.

-

Data Modelling: This involves using algorithms to develop predictive models that can be used to make informed decisions based on data.

-

Visualization and Communications: This involves creating visual representations of data to communicate insights and findings to stakeholders.

Throughout the entire data science workflow, data scientists need to collaborate closely with stakeholders, communicate their findings clearly, and continuously refine their methods and models based on feedback and new insights. In subsequent weeks, we'll be diving into each of the phases in the data science workflow.

Practice: Draw your building block

👩🏾🎨 Draw your version of the data science building blocks. Some ideas to include in your image: statistics, computer science, and domain expertise.

- Draw using whatever tool you like (such as paper, tldraw, or the built-in Padlet draw tool)

- Take a screenshot, a phone picture, or export the image if you use a drawing tool

- Upload the image to the Padlet (click the + button in the bottom-right, then add your image)

- You can also choose to Draw from the Padlet "more" menu.

👉🏾 Next, we'll look at the role of data in decision-making, and understand different data categories.

Data Types

What is data?

Data is increasing rapidly due to several factors...

- rise of digital technologies

- growing use of the internet and social media

- increasing number of devices and sensors that generate data.

In fact, it is estimated that the amount of data generated worldwide will reach 180 zettabytes by 2025, up from just 4.4 zettabytes in 2013. This explosion of data presents both opportunities and challenges for data scientists, who must find ways to extract insights and value from this vast and complex data landscape.

👩🏾🎨 ...Data is the new electricity in town...

Just as electricity transformed industries such as manufacturing, transportation, and communications, data is transforming modern-day businesses and organizations across various domains. Currently, it is being generated and consumed globally at an unprecedented rate, and it has become a valuable resource that drives innovation, growth, and competitiveness. Consequently, we now live in the era of big data.

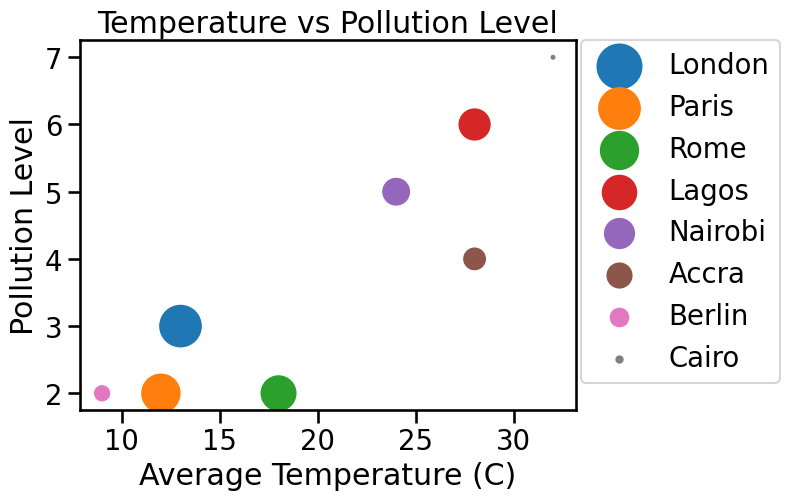

Data Types

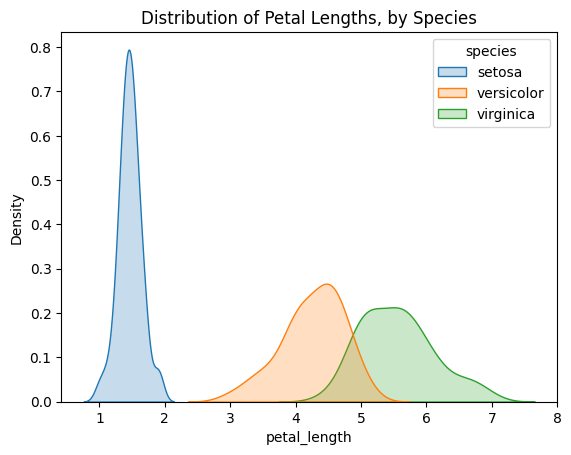

The data we have today are in different forms such as social media likes and posts, online purchase, gaming, business transactions, and online movie streaming among others. Understanding the types of data that you are working with is essential in ensuring that you are using the appropriate methods to analyze and manipulate it. Data types refer to the classification or categorization of variables based on the nature of the data they represent. Common data types are represented in the image below.

These data types are essential for understanding the characteristics and properties of the data and determining appropriate analysis techniques. Let's take a look at each of this data tpes...

-

Numerical Data: This includes any data that can be represented by numbers, such as height, weight, temperature, or time.

-

Categorical Data: This includes data that falls into categories or groups, such as gender, race, or occupation.

-

Text Data: This includes any data in the form of written or spoken language, such as customer reviews, social media posts, or news articles.

-

Time Series Data: This includes data that is collected over time, such as stock prices, weather patterns, or website traffic.

-

Spatial Data: This includes data that is associated with a specific location or geographic area, such as GPS coordinates or city population.

-

Image and Video Data: This includes any data in the form of digital images or videos, such as satellite imagery, medical scans, or security footage.

-

Graph and Network Data: This includes data that is organized in the form of nodes and edges, such as social networks or transportation networks.

-

Sensor Data: This includes data collected from sensors, such as pollution sensors, traffic sensors, temperature sensors, pressure sensors, or motion sensors.

-

Transactional Data: This includes data associated with business transactions, such as sales data, customer orders, or financial transactions.

Note: Sometimes, it is required to convert from one data type to another before analysis or visualization. This conversion is part of data wrangling or data preprocessing.

👩🏾🎨 Practice: Data type taxonomies

With your knowledge of data and different data types, check your understanding by attempting the foling questions:

-

Group the following sample data into their suitable data types.

- age

- income

- GPS coordinates

- maps

- product type

- stock prices

- web traffic

- moview reviews

- ethnicity

-

Do you think any of the sample data should be in more than one category?

👉🏾 In the next section, we'll look at data science tools and explore some sample dataset.

🔢 Data and Spreadsheets

As a multidisciplinary field, data science uses myriads of tools for different tasks within the phases of the data science workflow, and we'll explore some of these tools in this course. In this section, we'll start by looking at spreadsheets, and further explore a popular spreadsheet software - Microsoft Excel. To start with, let understand what we mean by spreadsheets and why we need them as data scientist.

What are spreadsheets?

Spreadsheets are softwares that allows a user to capture, organize, and manipulate data represeted in rows and columns. They are often designed to hold numeric and short text data types. Today, there are many spreadsheet programs out there which can be used locally on your PC or online through your browsers. They provide different features to ease data manipulation as shown below.

Benefits of spreadsheets

- Ease of use - Spreadsheets are widely used and familiar to many people, making them easy to use.

- Data organization - Spreadsheets provide a structured way to organize data, making it easier to sort, filter, and analyze datasets.

- Data analysis - Spreadsheets provide a range of functions and formulas that allow for basic data analysis, such as summing, averaging, and finding min/max values.

- Collaboration - Spreadsheets can be easily shared and edited by multiple users, making them a useful tool for collaboration and teamwork.

- Cost-effective - Many spreadsheet programs are available for free or at a low cost, making them an affordable option for data analysis.

Overall, spreadsheets are a useful tool for data science tasks, particularly for tasks that involve organizing, manipulating, and analyzing data on a smaller scale. However, for more complex data analysis tasks or larger datasets, specialized software tools and/or programming languages may be required.

How can i use spreadsheet?

Popular spreadsheet softwares currently available includes Microsoft Excel, Apple Numbers, LibreOffice, OpenOffice, Smartsheet, and Zoho Sheet among others. However, Microsoft Excel is the most popular within the data science communities. For this week, we'll be using Microsoft Excel.

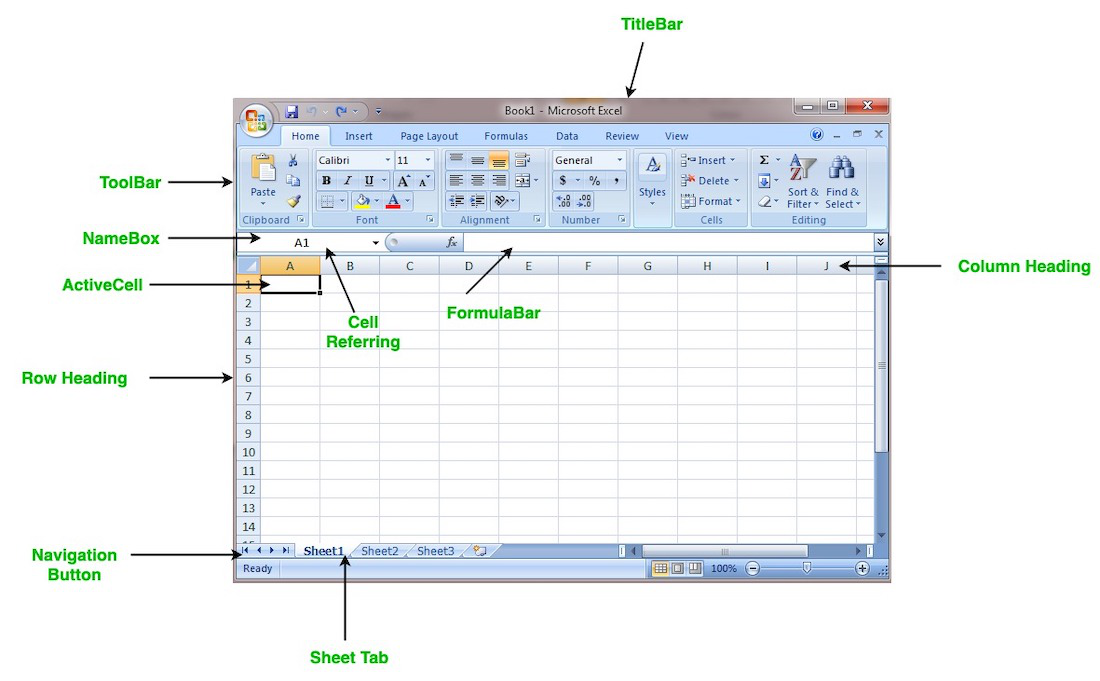

A brief recap of Microsoft Excel...

- Microsoft Excel is a free spreadsheet program create by

Microsoft. - By default, it comes pre-installed as part of your operating system.

- To create a new sheet, launch your Excel app locally or use Office365.

- select a blank workbook or use prdefined templates.

- Enter your data in rows and columns across the worksheet.

- Microsoft Excel app doesn't automatically save your work, unless you configure it to do so.

- There are predefined

built-infunctions to help you with basic and complex arithmetics. Some basic ones are;- AVG - finds the average of a range of cells

- SUM - adds up a range of cells

- MIN - finds the minimum of a range of cells

- MAX - finds the maximum of a range of cells

- COUNT - counts the values in a range of cells

Next, we'll explore a sample dataset using Microsoft Excel. As we've learnt in the previous video, you can have more than one worksheet in a workbook. In this sample dataset, we have 3 worksheets with different dataset.

- corona_virus - official daily counts of COVID-19 cases, deaths and vaccine utilisation.

- movies - contains information about movies, including their names, release dates, user ratings, genres, overviews, and others.

- emissions - contains information about methane gas emissions globally.

➡️ In the next section, we'll introduce you to

data cleaning🎯.

♻️ Data Cleaning

In data science, unclean data refers to a dataset that contains errors, inconsistencies, or inaccuracies, making it unsuitable for analysis without preprocessing. Such data may have missing values, duplicate entries, incorrect formatting, inconsistent naming conventions, outliers, or other issues that can impact the quality and reliability of the data. These problems can arise from various sources, such as...

Cleaning the data involves identifying and addressing these issues to ensure that the dataset is accurate, complete, and reliable before further analysis or modeling takes place.

Data cleaning with Excel

In Excel, data cleaning can involve tasks such as removing duplicate values, correcting misspellings, handling missing data by filling in or deleting the values, and formatting data appropriately. Excel provides us with various built-in functions and tools, such as filters, conditional formatting, and formulas, that can help with data cleaning tasks.

When we carry out data cleaning in Excel, we can improve the quality of our datasets and ensure that the data is ready for further analysis or visualization. To have a good understanding of how to clean a dataset using Microsoft Excel...

- Watch the next video 📺.

- Pause and practice along with the tutor.

A brief recap of data cleaning using Excel...

In the video above, we have covered the following techniques in data cleaning

- Separating Text - separating multiple text in a column into different cells.

- Removing Duplicates - removing duplicate data with

unique()formula andreplacefeature. - Letter cases - using

proper()to remove inconsistent capital letters. - Spacing fixes - removing spacing with

trim()formula. - Splitting text - flash fill to automatically separate data such as city and country

- Percentage formats - changing numbers to percentages

- Text to Number - text to values for further calculations

- Removing Blank Cells - removing blank cells from a dataset.

👩🏾🎨 Practice: Clean the smell... 🎯

A smaller sample of the global COVID-19 dataset is provided here for this exrcise.

- Create a copy of the dataset for your own use.

- Explore the dataset to have a sense of what the it represent.

- By leveraging your data cleaning skills, attempt the following...

- Remove duplicate data if exist

- Handle blank space

- Convert the column from text to number

- Implement other cleaning techniques of your choice

- Submit this exercise using this form.

👉🏾 Next, we'll deep dive into creating cool visualization with Excel.

Data visualization

Rather than looking at rows and columns of numbers or text, imagine using colors, shapes, and patterns to represent information in a visual way. This makes it much simpler for our brains to process and interpret the data, thereby helping us understand information and data more easily. With visualizations, we can see trends, patterns, and relationships that might not be apparent in raw data. Then how can we visualize our data?

Visualization tools

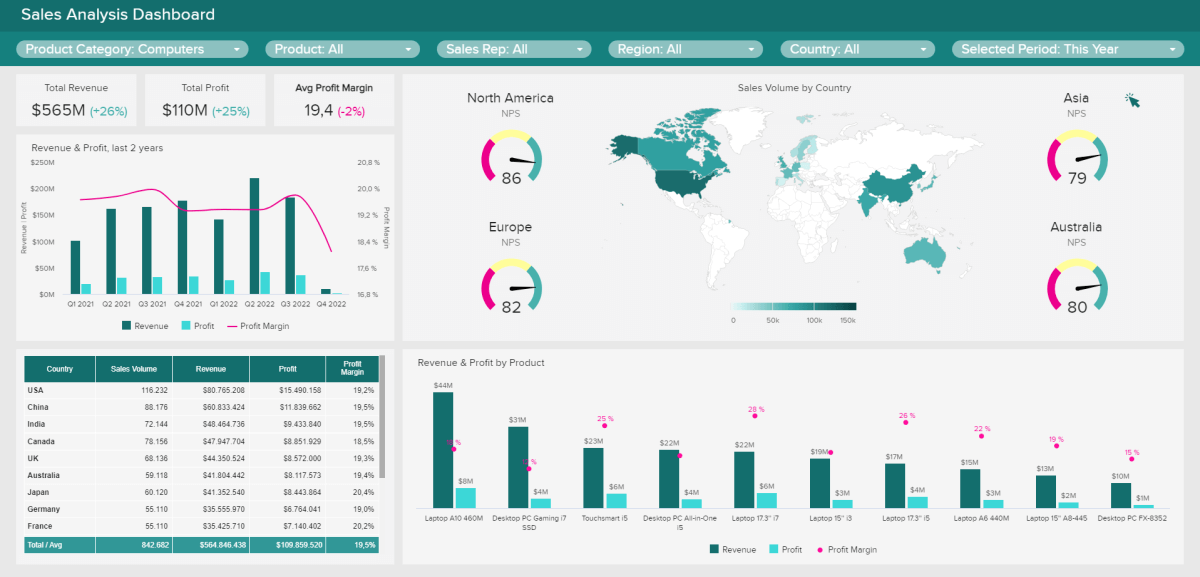

Data visualization tools are software programs that we can use to create visual representations of data in an easy and interactive way. They provide a user-friendly interface where we can input our data and choose from various charts, graphs, and other visual elements to display the information visually. For instance, Excel allows us to create simple charts and graphs directly from spreadsheet data.

Power BI, Tableau, Seaborn, and Matplotlib are more advanced tools that offer a wider range of customization options and advanced visualization techniques. For example, Tableau enables us to create interactive dashboards and explore data from multiple perspectives. Seaborn and Matplotlib are Python libraries that provide extensive options for creating complex and aesthetically pleasing visualizations. In this lesson, you'll only learn data visualization using Excel. Other tools will be explored in week 4.

Visualization with Excel

Data visualization using Excel allows us to present data in a visual and easy-to-understand way, even for people without technical expertise. Imagine you have a spreadsheet full of numbers and information. With Excel's charting and graphing features, you can transform those numbers into colorful and meaningful visual representations. For example, you can create bar charts to compare different categories, line graphs to track trends over time, or pie charts to show proportions. These visualizations help us see patterns, relationships, and insights that might be hidden in rows and columns of data.

By presenting information visually, Excel makes it easier for us to grasp and interpret the data, enabling better decision-making and communication. Visualizations also make it easier to share and communicate information with others, as it provides a clear and intuitive way to present complex data. Whether it's in business, science, or everyday life, data visualization helps us make better decisions and gain insights from the vast amounts of information around us.

👩🏾🎨 Practice: The Pandemic 🎯

Using the COVID-19 dataset you cleaned in the last practice exercise, create visualizations that provides information about the COVID-19 pandemic.

- Explore the dataset to have a sense of what it represent.

- Create visuals as you deemed fit. No answer is wrong!

- Share your visualization using this padlet.

- You can like other cool visuals on the padlet as well.

👉🏾 Next, we'll explore some common tools in data science.

Data Science Tools

As previously stated, data scientist use different combination of tools on a daily basis to capture, organize, manipulate, analyze, visualize,a and communicate their findings. In this section, we are going to explore the most popular popular tools used by data scienctist. In this lesson, we'll be focus on some tools as listed below, however, other tools will be explored as we progress with the course.

Python

Just the same way we use natural languages like swahili, english, french, arabic, and spanish to communicate, we also need to communicate with computers using some predefined languages known as programming languages, so that our instruction can be executed. As you've probably learnt in your programming 1 & 2 courses, Python is a powerful programming language that is applicable to many areas. One of such area is data science. If you need a refresher on Python, you can use the interactive platform below.

Quick intro to Python

In subsequent weeks, we'll be using Python and its libraries to gather, explore, clean, and manipulate our data. But before then, let us look at some popular tools and python libraries which is common among data scientists.

❓ How can i work with data using Python?

Previously, we've seen how it is possible to capture, clean, manipulate, and visualize data using Excel. However, you're limited to only the features provided by Excel, even though there is more you can do as a data scientist. This is why you need python to programatically do everything you have in Excel and many more. To do that, we'll be using Jupter Notebook.

Jupyter Notebook

The unique feature of Jupyter Notebook is that it allows you to write code in small, manageable chunks called cells, which can be executed independently. This interactive nature makes it easy to experiment with code, test different ideas, and see immediate results. You can write code in languages like Python or R, and with the click of a button, execute the cell to see the output.

Jupyter Notebook also supports the inclusion of visualizations, images, and formatted text, making it an excellent tool for data analysis, data visualization, and presenting your findings.

For this course, we'll be using a cloud version of jupyter notebook called Google Colab!. With this, you can avoid the need for installation and configuration for jupyter notebook. Let's look at what Google Colab is all about.

With Colab, you can do everything you've done using the python shell and more. To wrap up, let look at the benefit of Colab for a data scientist.

- Free Resources:Provision of free cloud computing resources.

- Collaboration: allows multiple users to work on the same notebook simultaneously

- Integration with Google Drive: Colab integrates with Google Drive, allowing users to easily access and store data files and notebooks.

- Pre-installed libraries: comes with many pre-installed libraries and frameworks commonly used in data science, such as TensorFlow, PyTorch, and Scikit-learn.

- Code execution: allows users to execute code in real-time and see the results immediately.

- Visualization: provides support for data visualization tools such as Matplotlib and Seaborn.

Overall, Google Colab is a powerful tool for data scientists, providing access to powerful computing resources, collaboration tools, and a range of features for data analysis and machine learning.

- From the list of Python libraries below, group each library as one of the following -

visualization,machine learning,data manipulation, andUtilities.

- Pandas

- Bokeh

- Numpy

- Maplotlib

- Pytorch

- Keras

- SciKit-Learn

- Polar

- Tensorflow

- OpenCV

- Share your answers using this padlet.

- You can like other cool answers on the padlet as well.

👉🏾 Next week, we'll deep dive into

data collectionandcleanings.

Practices

1. Football Player Data 🎯

The data is of ten hypothetical soccer players, their sleep duration, sleep quality, soreness, stress, as well as GPS metrics such as total distance, acceleration count, deceleration count, max acceleration, max deceleration, and max speed.

TODO

Using your knowledge of data cleaning, clean this dataset by...

- Saving a copy of the dataset for this exercise.

- Handling all missing values.

- Fixing the duplicates data for each player.

- Using other data cleaning techniques you've learnt.

Submission

You are required to submit documentation for practice exercises over the course of the term. Each one will count for 1/10 of your practice grade, or 2% of your overall grade.

- Practice exercises will be graded for completion not correctness.

- You have to document that you did the work, but we won't be checking if you got it right.

- You MUST attempt the quiz

Practices - Intro to DSon Gradescope after the exercise to get the grade for this exercise.

Your log will count for credit as long as it:

- is accessible to your instructor, and

- shows your own work.

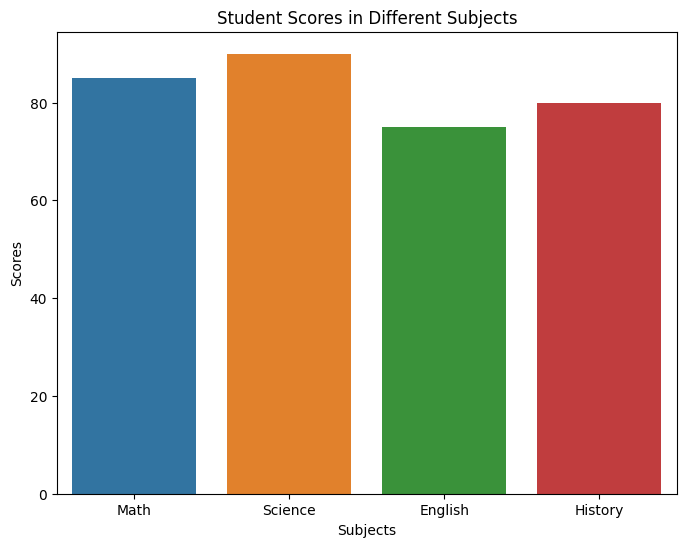

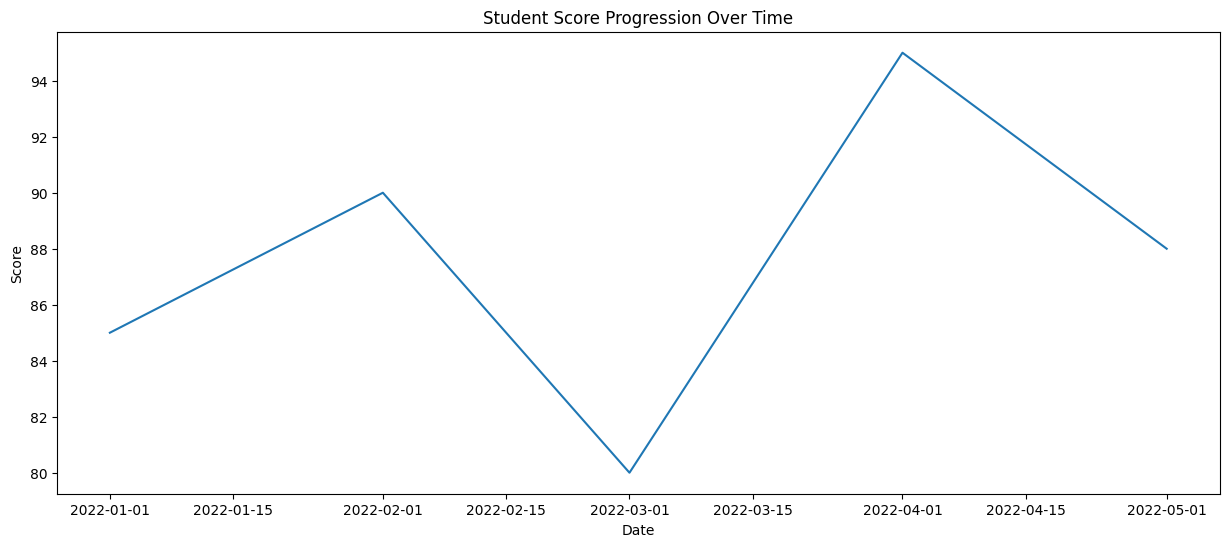

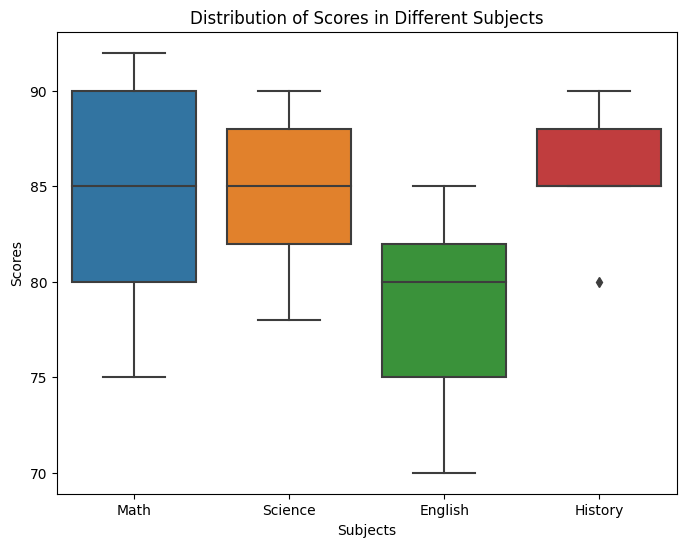

Assignment - Student Performance Analysis

Student Performance Analysis

This assignment is all about data cleaning and visualization using Microsoft Excel. The dataset for this assignment are student data from a hypothetical school, which consists of 7 Columns and contains information about gender, race, scores of students in different subjects, and more.

TODOs

- Clone the assignment repository using the link above

- Look through the data -

student_performance_data.csv. - Read the questions below to have an idea of what is required to do with the data.

- Put all your charts/graphs in a single file as this will be submitted as part of the assignment on gradescope.

- Once you have the answers to the questions below, goto assignment on Gradescope

- Look for Assignment - Intro to DS

- Attempt the questions

- Submit once you're done

Questions

- How many UNIQUE data points or samples are in the dataset?

- What are the percentages based on gender 2.1 What is the percentage of Male student 2.2 What is the percentage of Female student

- What percentage of student "completed" the test preparation course

- What percentage of student had a "standard" Lunch

- What percentage of parent has MORE THAN "high school" level of education

- Which group in Race/ethnicity has the lowest percentage.

- Distribution of scores in subject What distribution score range has the highest frequency for Math score What distribution range has the highest frequency for Reading score What distribution range has the highest frequency for Writing score

- Who score higher in Math? Male or female?

- Which race/ethnicity scored the HIGHEST in Math?

- Mention ONE insight you derive from the data

Data Collection and Cleaning

Welcome to week 2 of the Intro to data science course! In the first week, we looked at data science broadly, including its building blocks and workflow, and and also understand data types, spreadsheets, python, and Google Colab.

This week, we'll be more specific while looking at data collection and cleaning. First, we'll look at different data sources such as databases, APIs, web scraping, data streams. Next, we'll deep dive into data loading and exploration. Similarly, we'll touch on data cleaning and transformation. And finally, we'll look at data validation and privacy.

Whatever your prior expereince, this week you'll touch on basics of data collection and cleaning. You'll also continue practising how to learn and work together.

Learning Outcomes

After this week, you will be able to:

- Explain and differentiate various data sources.

- Describe different data loading and cleaning techniques.

- Outline the importance of data quality.

- Compose documentation of relevant information about the analysis process.

An overview of this week's lesson

Data Sources and Collection

With data collection, 'the sooner the better' is always the best answer — Marissa Mayer

As a data scientist, you'll work with different types of data from different sources. It is important to understand not just these data sources but also how to collect the data therein. The process of data collection involves identifying the relevant data sources, collecting and extracting the data, and ensuring its quality and integrity. To achieve this, we'll be looking at 4 different data sources; databases, APIs, web scraping, and data streams.

What are data sources?

Note: Data sources can be diverse, including structured data from databases, spreadsheets, and APIs, as well as unstructured data from social media, text documents, and sensor devices.

As a data scientist, you need a good understanding of different data sources and collection techniques to gather the necessary information for analysis. However, collecting data requires careful planning, attention to detail, and basic knowledge of appropriate tools and techniques to ensure the data is accurate, complete, and representative of the problem being addressed. Owing to this, let's explore different data sources while simultaneously looking at how to collect data from these sources.

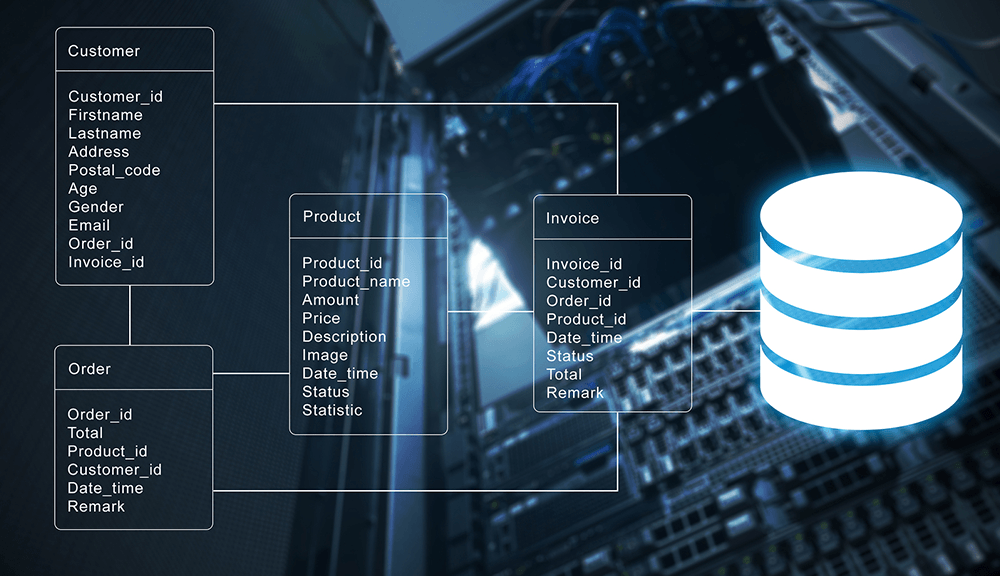

1. Databases

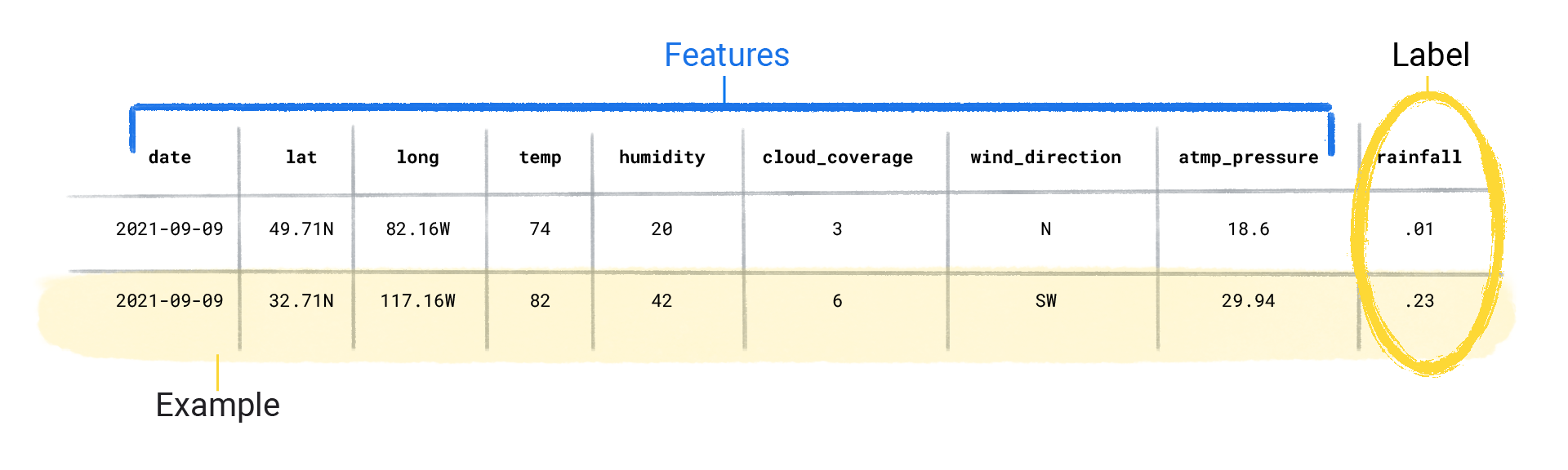

A database is an organized collection of structured information, or data, typically stored electronically in a computer system. Databases can store information about people, products, orders, transactions, or anything else using one or multiple tables. Each table is made up of rows and columns in a relational database, and records within each table is identified using a primary key. For example, the image below shows 4 tables; Customer, Order, Product, and Invoice. Each of this table has a primary key (Customer_id, Order_id, Product_id, Invoice_id) to uniquely identifies each record.

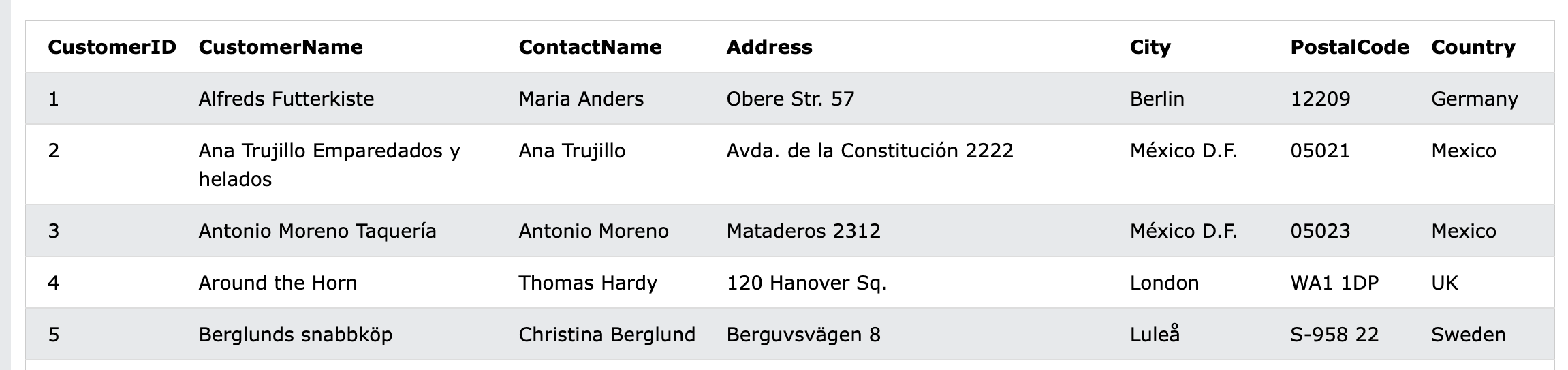

As shown above, multiple tables are linked together for easy access and retrieval. All this is usually controlled by a database management system (DBMS), where data can then be easily accessed, managed, modified, updated, and organized using Structured Query Language (SQL). An example SQL query to retrieve customers' record from a database is given below.

Note: the table name and the retrieved data in the query below are imaginary

Query Description SELECT * FROM CUSTOMER Retrieve all records or data from the customer table

After running the query above, an example data that could be retrieved is given in the table below. It is evident that data are modelled into rows and columns where each row represent a customer information.

After data retrieval from databases, if required, you can store the retrieved data in a different format (such as .csv) or a separate location for further analysis or integration with other data sources. If you're curious to have better understand of SQL, check out the link below.

Quick intro to SQL

2. Application Programming Interface (API)

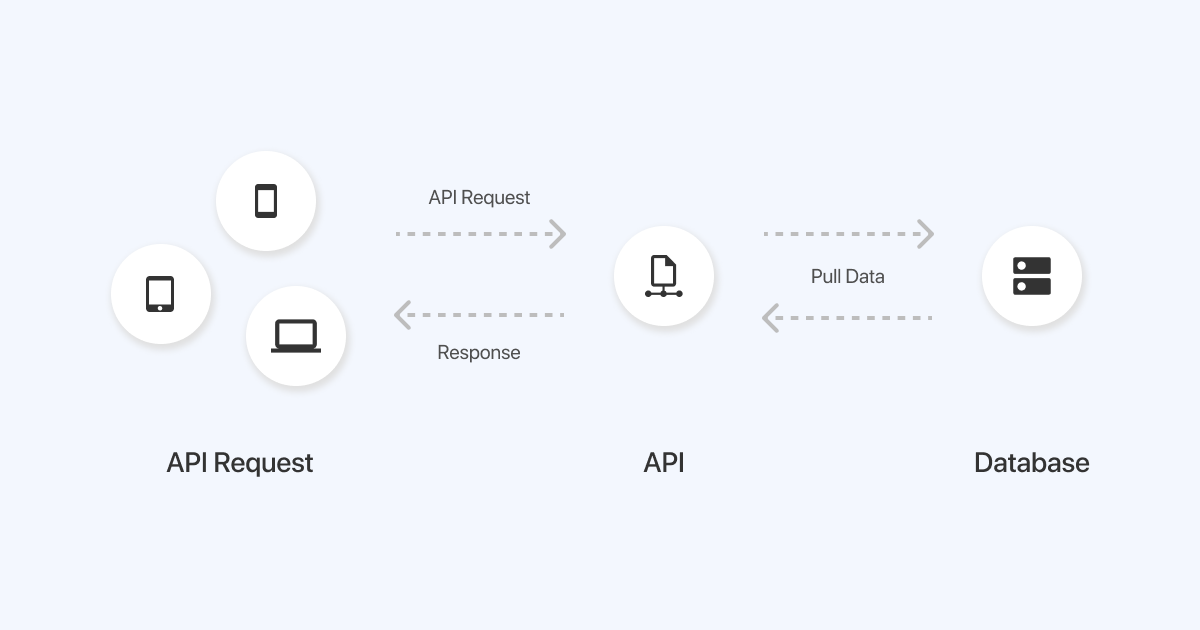

When we pull or check our phone for weather data, a request will be sent to your weather app which resides on a server that stores all the weather information. Behind the scene, an API is used to send a request to the weather app server, which sends back a response (i.e., weather information) to your phone through the API. As depicted below, an API serves as an intermediary that allows a data scientist to access data from public repositories or other sources.

For more detailed information about API, refer back to your previous Web Application Development course.

Now that we have an understanding of what API does, let us look at different format of data we can get while using an API. As a data scientist, the most common data format are csv, json, and xml. Below is a summary with example of how each of this data format looks like.

JSON

JSON is a key-value pair data format and has become one of the most popular format of sharing information in recent times. A file contain json data is saved using .json file extension. A sample json data about a pizza oder is given below

{

"crust"": "original",

"toppings"": ["cheese","pepperoni"", "garlic""],

"price"": "29.99",

"shipping"": "delivery",

"status"": "cooking"

}

CSV

Comma Separated Value (CSV) is a popular data format in the data science communities, with a plain text file that uses specific structuring to arrange tabular data. It uses a comma (,) to separate each specific data value. A csv data is saved in a file with .csv extension. A sample csv data is given below. In this example, each row in the CSV file represents an employee's details, and each column represents a specific attribute of the employee. The first row is the header row, which provides the names of each attribute.

EmployeeID,FirstName,LastName,Department,Position,Salary

1,John,Doe,Marketing,Manager,50000

2,Jane,Smith,Finance,Accountant,40000

3,Michael,Johnson,IT,Developer,60000

4,Sarah,Williams,HR,HR Manager,55000

5,David,Brown,Sales,Sales Representative,45000

Following the header row, each subsequent row contains the corresponding data for each employee. For example, the first employee has an EmployeeID of 1, a FirstName of John, a LastName of Doe, works in the Marketing department, holds the position of Manager, and has a salary of 50000.

Extensible Markup Language (XML)

XML is the data exchange format for API prior to JSON. It’s a markup language that’s both human and machine readable, and represent structured information such as documents, data, configuration, books, transactions, invoices, and much more. Data in xml format can be saved in a file with .xml extension.

An example is given below showing data about a message from John to Bruce. This XML structure represents a basic representation of an email, including important details like sender, recipient, subject, body, and timestamp.

<email>

<sender>John</sender>

<recipient>Bruce</recipient>

<subject>Greetings</subject>

<body>

Dear Bruce,

I hope this email finds you well. I wanted to reach out and say hello.

Best regards,

John

</body>

<timestamp>2023-05-15 09:30:00</timestamp>

</email>

In this example, the <email> element represents the entire email structure. Inside the email, there are child elements such as <sender>, <recipient>, <subject>, <body>, and <timestamp>. The <sender> element contains the name of the sender, which is "John" in this case. The <recipient> element represents the recipient of the email, which is "Bruce".

The <subject> element contains the subject of the email, which is "Greetings". The main content of the email is enclosed within the <body> element, and it contains the message text. The <timestamp> element represents the date and time when the email was sent, specified in a specific format, such as "2023-05-15 09:30:00".

3. Web Scraping

📺 What is web scraping? listen to PyCoach! 👨🏾💻

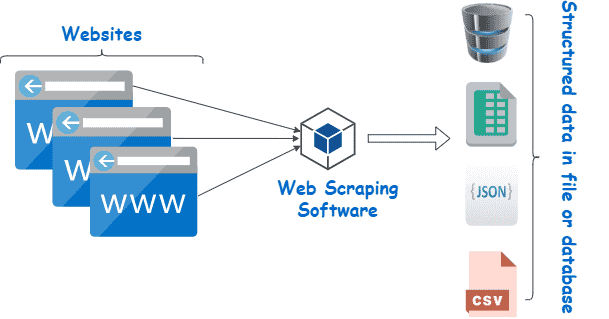

While it is possible to scrape all kinds of web data from search engines and social media to government information, it doesn’t mean this data is always available. Depending on the website, you may need to employ a few tools and tricks to get exactly what you need, and also covert it into a format suitable for your project. In Python, we can use libraries like BeautifulSoup and Scrappy to scrape web pages. The process involves sending a request to a web page's URL, retrieving the HTML content of the page, and then parsing the HTML to extract the desired data.

NOTE: It's important to note that web scraping should be done ethically and responsibly, respecting the website's terms of service and not overloading their servers with excessive requests.

For example, if we want to scrape the prices of products, we can locate the HTML elements that contain the prices and use Python to extract and save them.

Learning web scraping in Python can be empowering as it allows you to automate data collection from the vast amount of information available on the web, making it easier to analyze and make informed decisions based on that data.

4. Data Streams

📺 What is data streaming? watch this video from Confluent! 👨🏾💻

In summary, remember the following about data streams...

- they are continuous sequences of data that are generated in real-time.

- they require specialized techniques and platforms for processing and analysis to derive insights and make informed decisions.

- Data scientists and analysts need specialized techniques to handle data streams effectively.

- it has numerous applications across industries, including real-time analytics, fraud detection, recommendation systems, and monitoring of network or infrastructure performance.

- organizations can gain valuable insights, respond quickly to emerging trends or events, and make data-driven decisions

👩🏾🎨 Practice: Describe JSON and XML Data... 🎯

In this exercise, you'll access data from sample APIs using your browser. With this, you'll have hands-on experience on JSON and XML data. Try the following in your browser.

-

Open your browser

-

copy and paste each of the url below in your browser

-

Describe what each data from the APIs is all about in the padlett below

➡️ In the next section, you'll be introduced to

data loadinganddata exploration🏙️.

Data Loading and Exploration

So far we've explored some tools used in data science, and now is the time to start using them. Remember we looked at data and different sources or orgin of data. However, as a data scientist, you need to know how to import or get this data from different sources, and work with them. In this lesson, you'll learn how to import and use data from a file (.csv) and API using a popular python libray called Pandas.

Data loading

In data science, one of the fundamental tasks is loading data into our analysis environment. We'll be work with diverse data sources, ranging from structured datasets stored in CSV files to real-time data obtained through APIs. In this section, we will explore how to load data from CSV files and APIs using Pandas. Before we get started with Pandas, let first look at how we can create a notebook (i.e., the file containing our codes and analysis) on google colab, VSCode, and Jupyter Notebook.

📺 How to create a notebook 👨🏾💻

In summary...

- Remember you need an active google account to do this.

- In your browser, goto https://colab.research.google.com

- click on

fileand create a new notebook.

Now that you understanding how to create a notebook, we'll begin by looking at how we can load data from a csv file using Pandas. For this, we'll be using the COVID-19 dataset you explored in section 1.4.

Pandas

Loading data from CSV

Loading data from CSV files using Pandas is a fundamental skill for every data scientist. It provides a convenient way to import data into a structured format for further analysis and exploration. To load a CSV file using Pandas, the first step is to import the Pandas library in your notebook.

import pandas as pd

Using the alias pd allows you to refer to Pandas as pd. Next, we can use the read_csv() function provided by Pandas to read the CSV file into a Pandas DataFrame. The read_csv() function takes the file path (or file location) as input and returns a DataFrame object. To read a CSV file from google drive, you can either specify a file path to your google drive after mounting it or upload it to colab.

df = pd.read_csv('path/to/your/corona_virus.csv')

By default, read_csv() assumes that the CSV file has a header row containing column names. If the CSV file does not have a header row, we can set the header parameter to None.

df = pd.read_csv('path/to/your/corona_virus.csv', header=None)

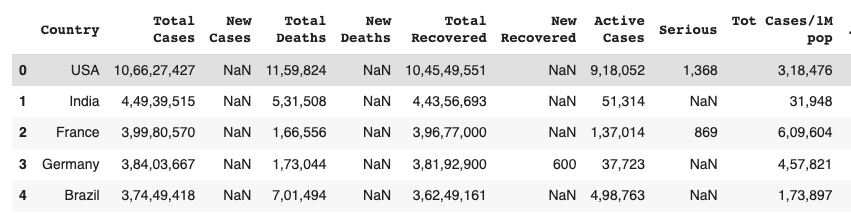

Once the data is loaded into a DataFrame, Pandas offers a wide range of methods for data exploration and manipulation. You can examine the data using functions like head(), tail(), and describe() to get a glimpse of the dataset's structure and statistical summaries. Now that you've successfully loaded your dataset into Pandas DataFrame, let's see what the data looks like by viewing some rows using Pandas .head() function.

df.head()

✨ Awesome! you've successfully loaded your first CSV file using Python and Pandas.

Loading data from API

In addition to loading data from static files like CSV, data scientists often work with real-time data obtained through APIs. To load data from an API, we typically make HTTP requests and retrieve the data in a structured format, such as JSON (JavaScript Object Notation). Pandas provides convenient functions to handle JSON data and convert it into a DataFrame.

To fetch data from an API, we can use the requests library in Python to send HTTP requests, and then use Pandas to parse and structure the retrieved data. For this, we'll be using the previous API we used in First, let's import both Pandas and the request libary.

import pandas as pd

import requests

Next, we send an HTTP GET request to the specified API and receive a response. The response is typically in JSON format, which can be directly converted into a DataFrame using Pandas.

# Make a request to the API

response = requests.get('https://api.unibit.ai/v2/stock/historical/?tickers=AAPL&accessKey=demo')

# Convert JSON response to DataFrame

data = pd.DataFrame(response.json())

👩🏾🎨 Practice: Explore Pandas function 🎯

In this lesson, we've seen how to read data from CSV and API, and how to get a view of our data using head() function. Now you need to explore other Pandas functions.

-

Using the DataFrame you loaded from the CSV, what type of information do you get when you use

describe()andtail()function? -

Share your answer using the padlet below.

➡️ In the next section, you'll be introduced to

data cleaning🏙️.

🔢 Data cleaning techniques

As a data scientist, we'll be working with lots of messy (or smelling 😖) data everyday. However, it is critical to ensure the accuracy, reliability, and integrity of the data by carefully cleaning the data (without water 😁). In this lesson, we'll be looking at different data cleaning techniques needed to get the data ready for further analysis. First, we'll start by exploring techniques needed to handle missing data, and then we'll dive into what to do with duplicate data.

Data cleaning aims to improve the integrity, completeness, and consistency of the data. When cleaning a data, our goal will be to produce a clean and reliable dataset that is ready for further analysis. By investing time and effort into data cleaning, we can improve the accuracy and credibility of our analysis results, leading to more robust and reliable insights. To understand this more, we'll be looking at the following

- Handling missing data

- Removing duplicate data values

1. Handling missing data

Missing data is one of the most frequently occuring problems you can face as a data scientist. Watch the next video to have an idea of how important it is to understand this problem, and possible causes.

📺 How important are missing data? 👨🏾💻

As a data scientist, there are many ways of dealing with missing values in a data. For this lesson, we'll be looking at 4 different techniques of handling missing data - _dropping, filling with constant, filling with statistics, and interpolation.

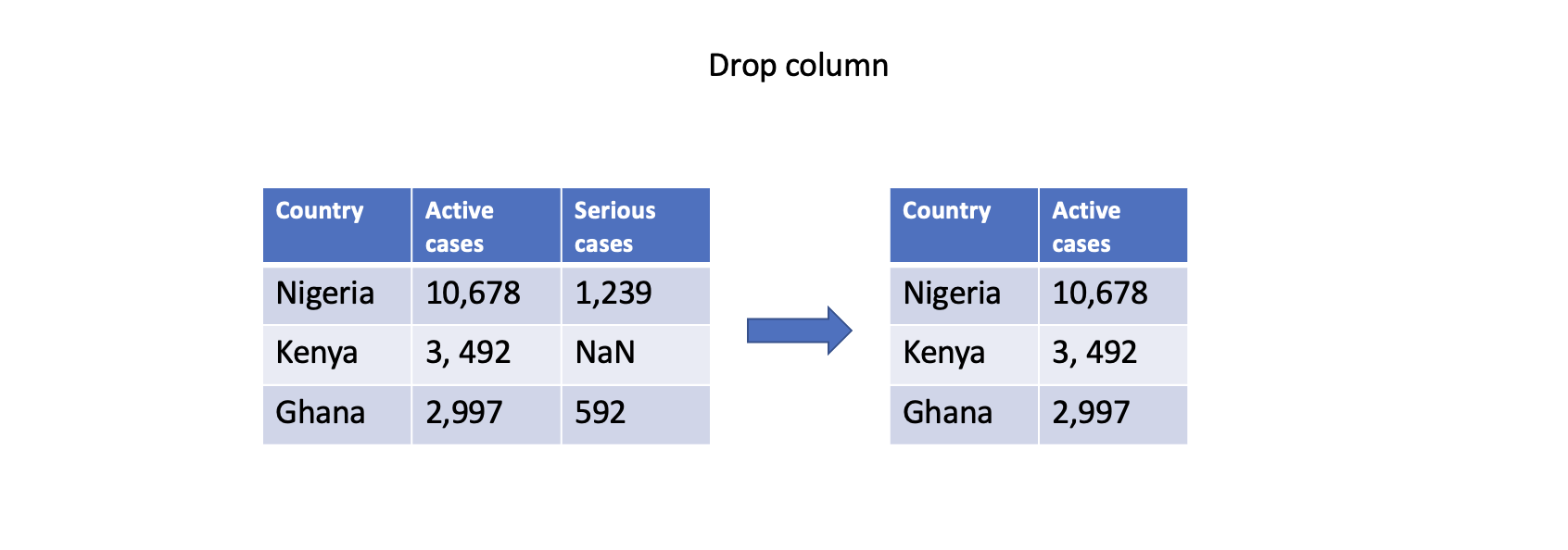

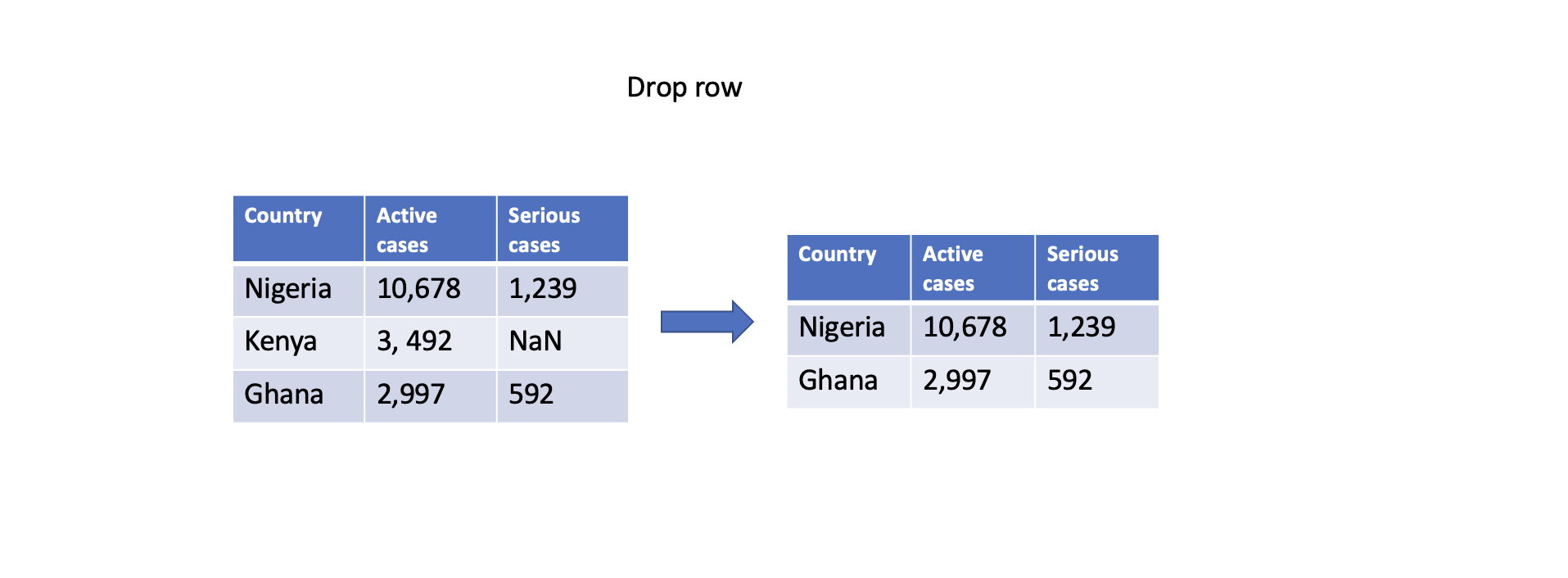

Dropping missing values

One straightforward approach is to remove rows or columns with missing values using the dropna() function. By specifying the appropriate axis parameter, you can drop either rows (axis=0) or columns (axis=1) that contain any missing values. However, this approach should be used with caution as it may result in a loss of valuable data.

# Drop column with any missing values

df.dropna(axis=1, inplace=True)

# Drop rows with any missing values

df.dropna(axis=0, inplace=True)

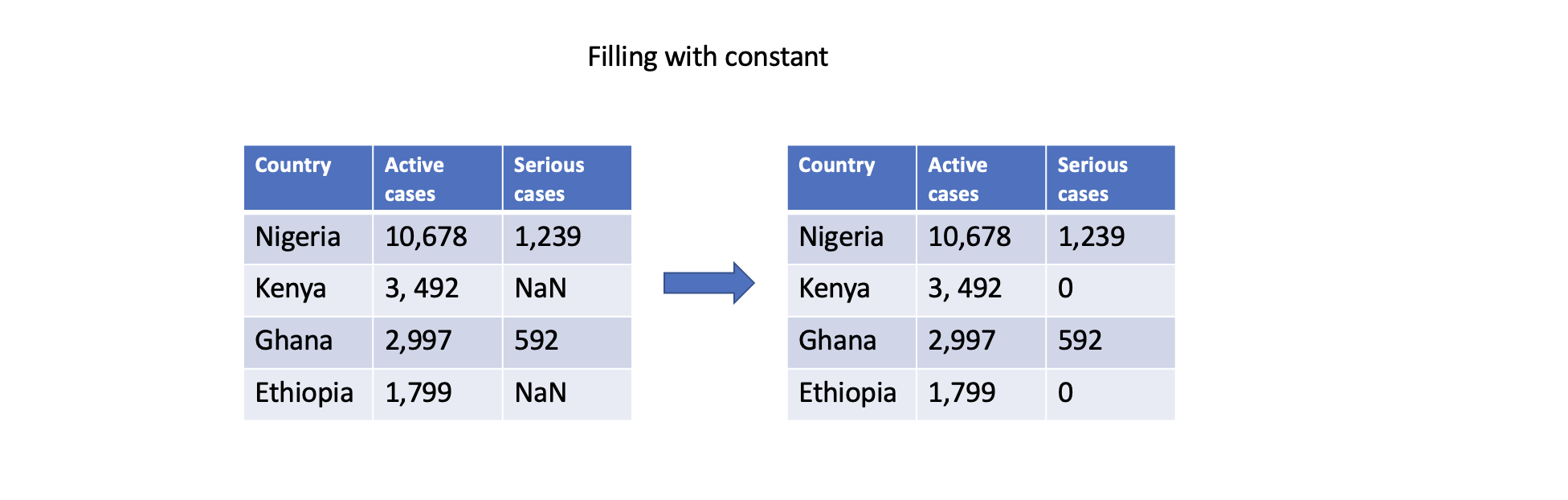

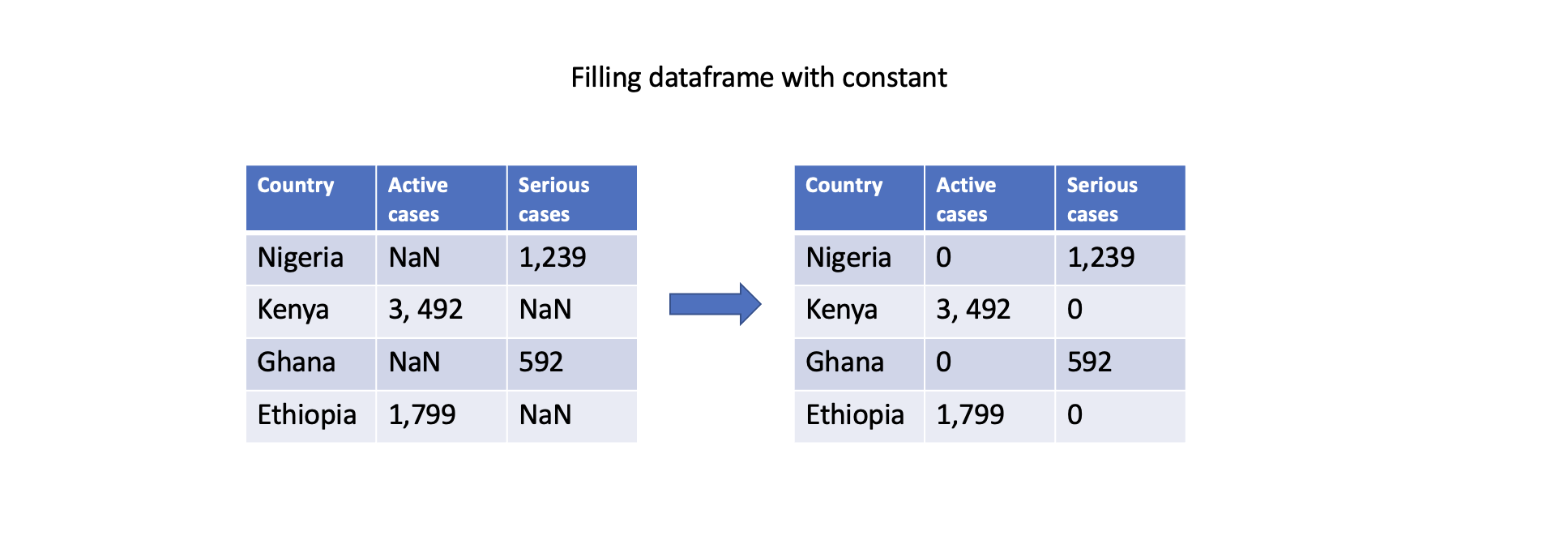

Filling with constant

You can also fill missing values with a constant value using the fillna() function. This can be done for specific columns or the entire DataFrame. For example, filling missing values with zero:

# Fill missing values in a specific column

df['Serious cases'].fillna(0, inplace=True)

# Fill missing values in the entire DataFrame

df.fillna(0, inplace=True)

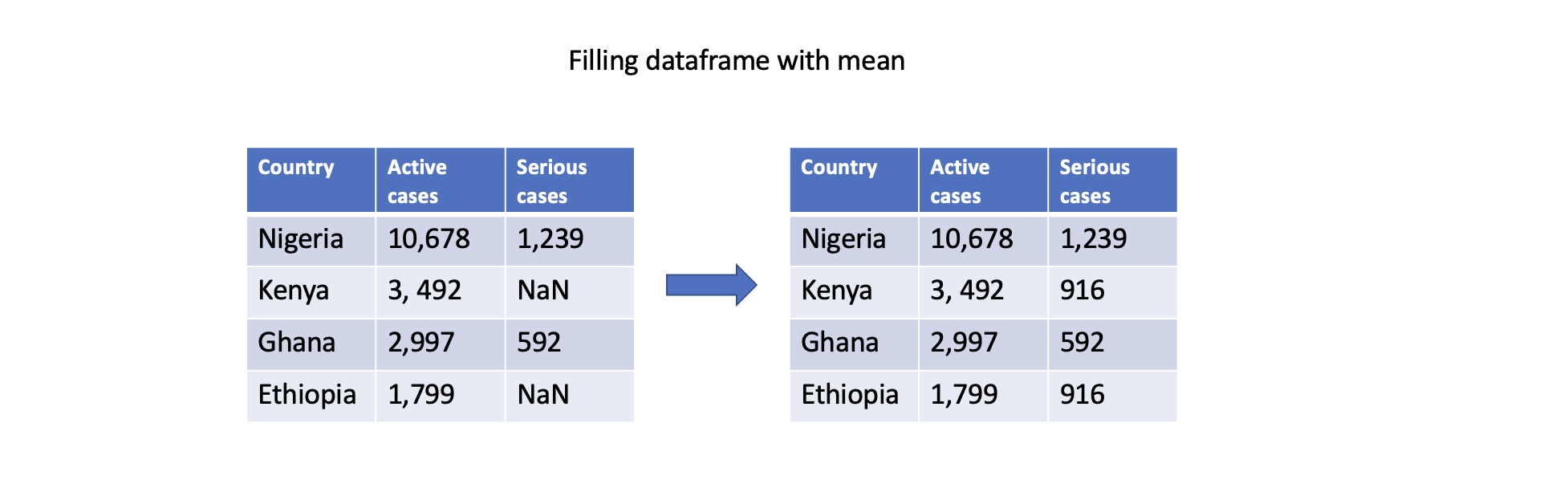

Filling missing values with statistics

Another approach is to fill missing values with summary statistics, such as mean, median, or mode. Pandas provides convenient functions like mean(), median(), and mode() to compute these statistics. For example, filling missing values with the mean of column Serious cases:

# Fill missing values in a specific column with the mean

df['Serious cases'].fillna(df['Serious cases'].mean(), inplace=True)

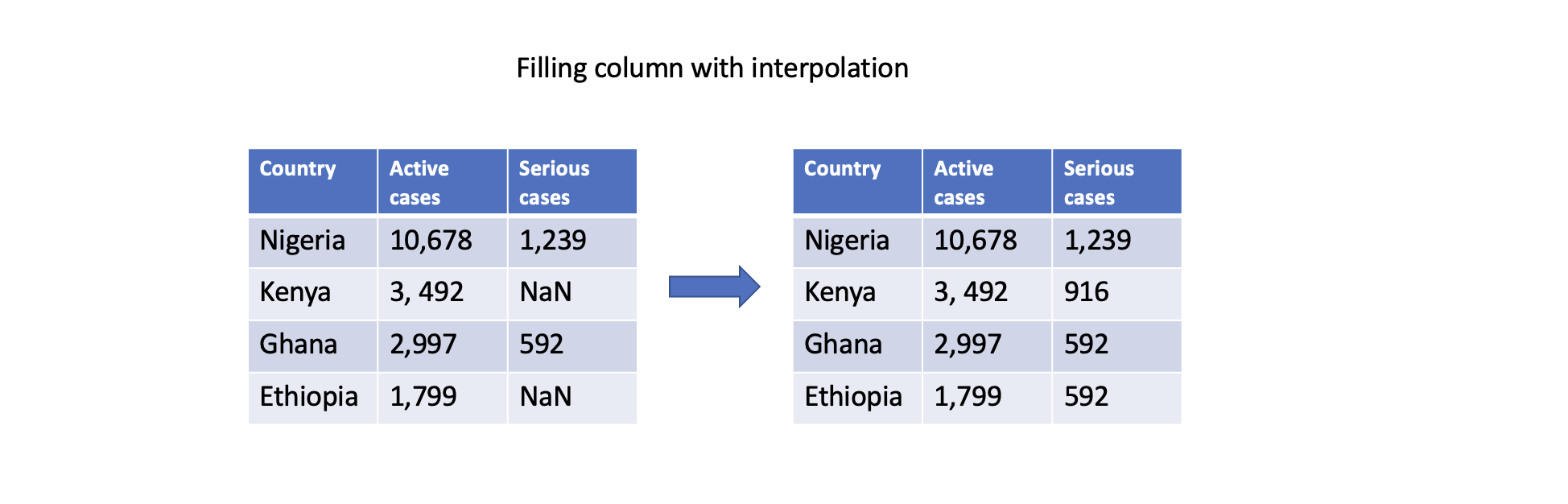

Filling with interpolation

Pandas supports different interpolation methods to estimate (i.e., predict) missing values based on existing data points. The interpolate() function fills missing values using linear interpolation, polynomial interpolation, or other interpolation techniques.

# Interpolate missing values in a specific column

df['Serious cases'].interpolate(inplace=True)

These are just a few examples of how Pandas can handle missing data. The choice of approach depends on the specific dataset, the nature of the missing values, and the analysis goals. As a recap, watch the video below to summarize what has been discussed.

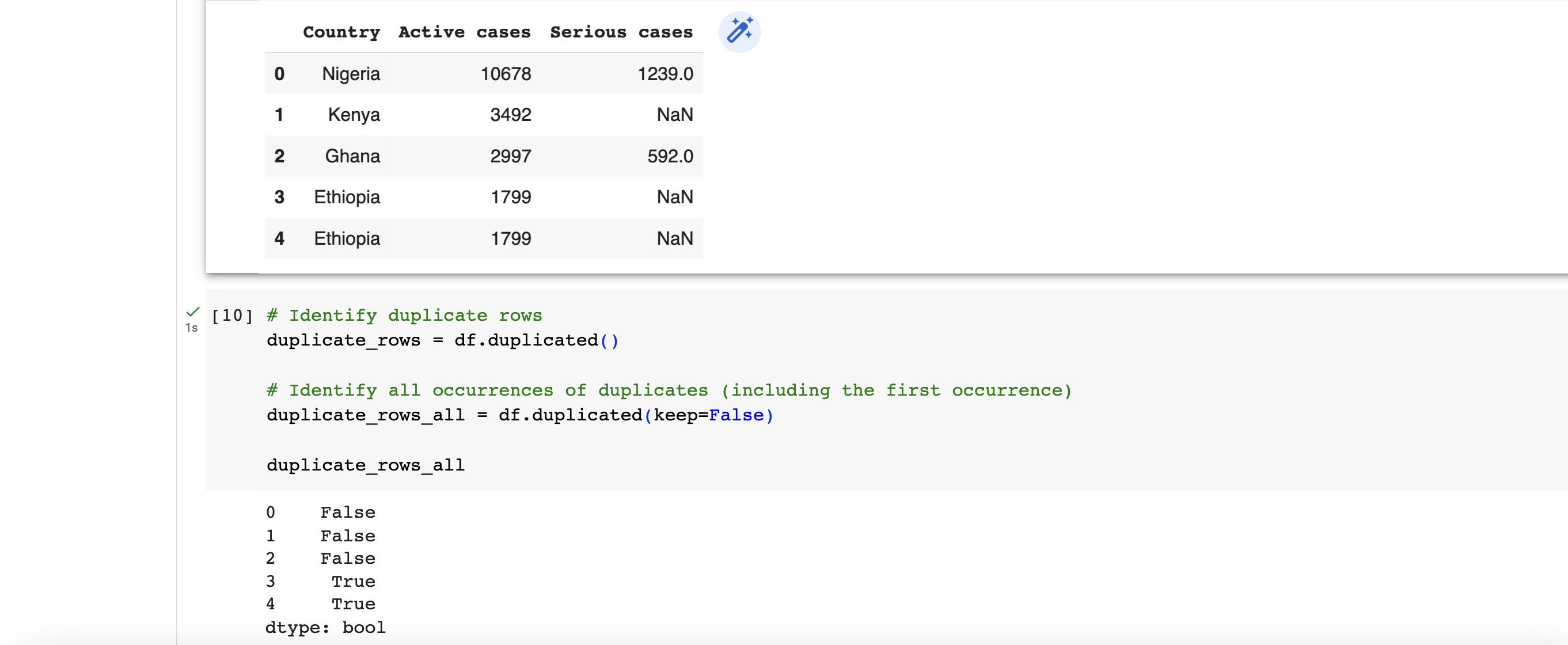

2. Removing duplicates

Duplicate data are rows or records within a dataset with similar or nearly identical values across all or most of their attributes. This can occur due to various reasons, such as data entry errors, system glitches, or merging data from different sources. As a data scientist, there are number of ways to handle duplicate data in a small or large dataset. First, let's have a look at how we can identify if our data set has duplicate record, and in which column they exist.

Identifying duplicate data

To identify existence duplicate data in a dataset, the duplicated() function in Pandas is suitable for this. It returns a boolean value of either True or False for each of the rows. By using the keep parameter, you can control which occurrence of the duplicated values should be considered as non-duplicate. For example, we can check for duplicate using

# Identify duplicate rows

duplicate_rows = df.duplicated()

# Identify all occurrences of duplicates (including the first occurrence)

duplicate_rows_all = df.duplicated(keep=False)

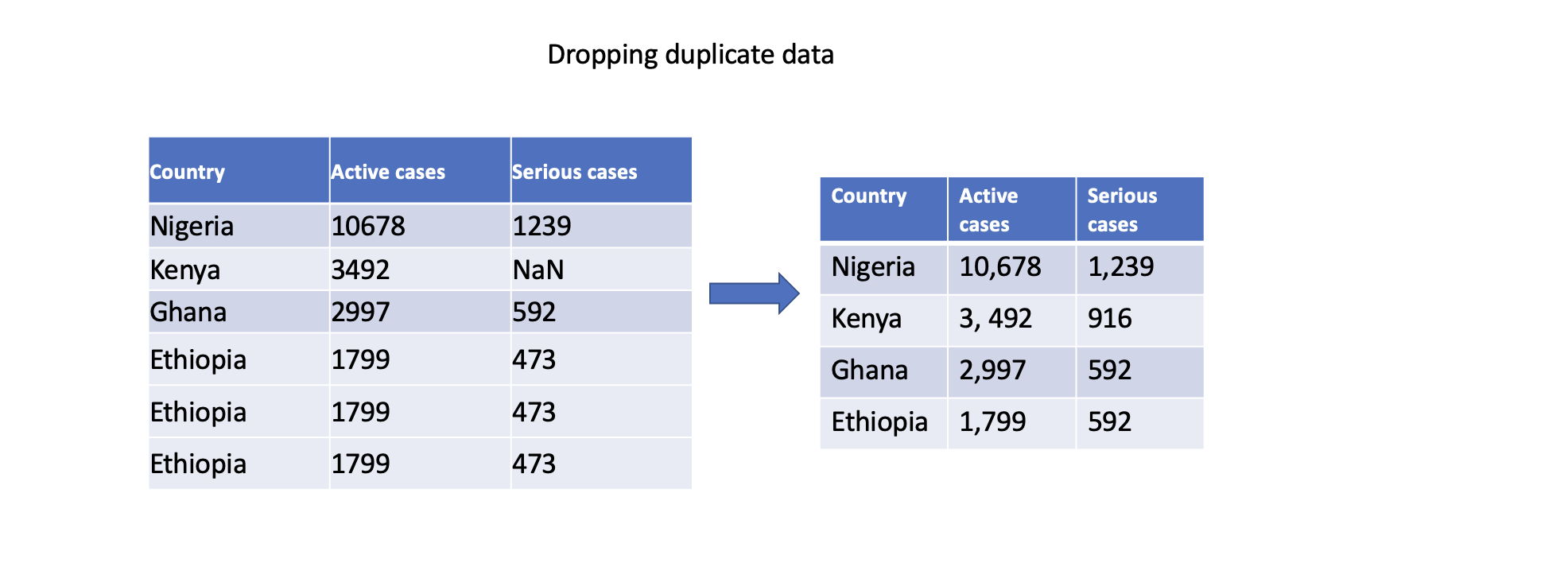

Dropping duplicate data

To remove duplicate data, a common option is to drop (or remove) the entire row. There are 3 main types of data duplication -

- Exact duplicates: rows with the same values in all columns.

- Partial duplicates: rows with the same values in some columns.

- Duplicate keys: rows with the same values in one or more columns, but not all columns.

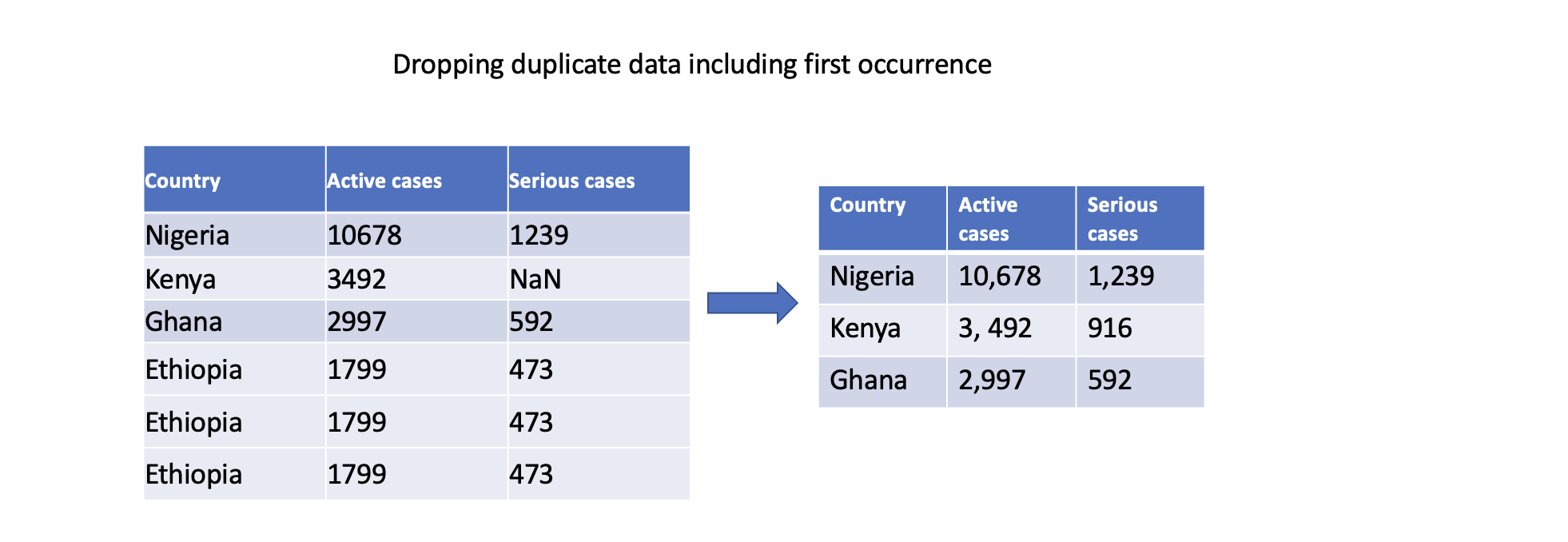

We'll only focus on exact duplicates in this section. To remove duplicate rows from a DataFrame, you can use the drop_duplicates() function. This function drops duplicate rows, keeping only the first occurrence by default. However, if you want to remove duplicates including the first occurence, then you can use the keep parameter.

# Drop duplicate rows, keeping the first occurrence

df.drop_duplicates(inplace=True)

# Drop all occurrences of duplicates (keeping none)

df.drop_duplicates(keep=False, inplace=True)

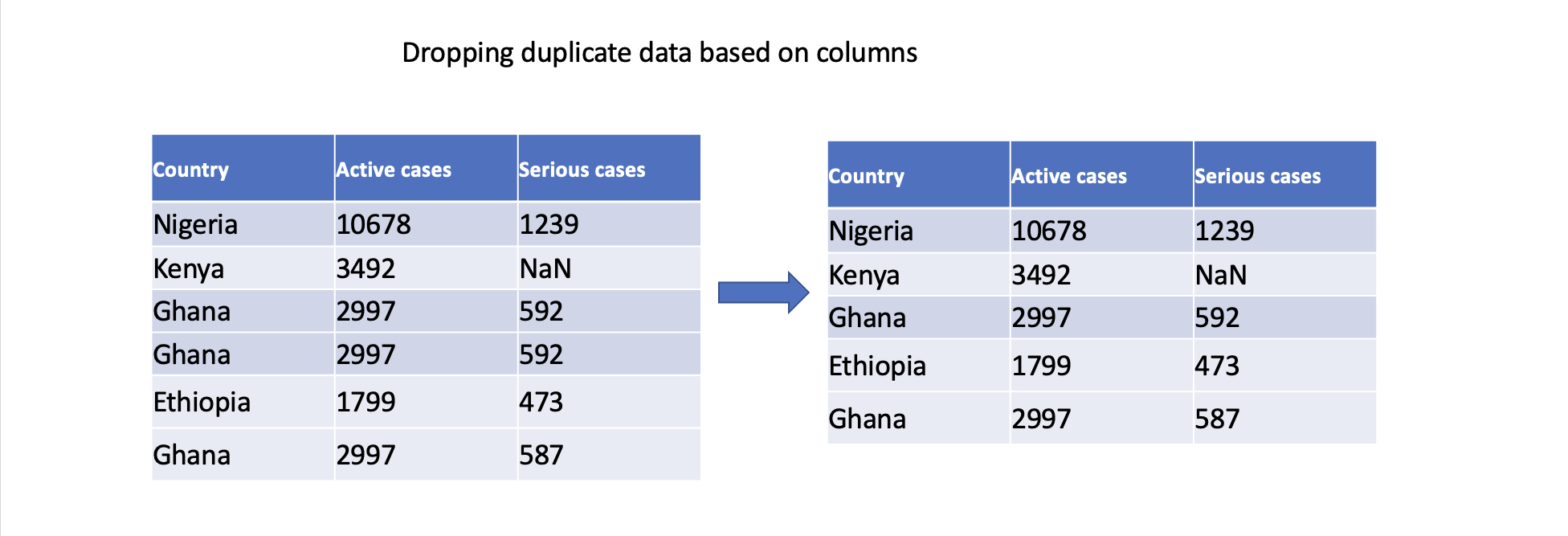

We can also specify specific columns to determine duplicates. Only rows with identical values in the specified columns will be considered duplicates.

# Drop duplicate rows based on specific columns

df.drop_duplicates(subset=['Serious cases'], inplace=True)

In conclusion, addressing duplicate data is crucial to ensuring accurate analysis, maintain data integrity, derive reliable insights, and support consistent decision-making. By effectively handling duplicate data, we can work with clean and reliable datasets, leading to more robust and trustworthy analysis outcomes.

👩🏾🎨 Check your understanding 🎯

Consider a dataset containing information about students' test scores and their demographic details. The dataset has missing values that need to be addressed before performing any analysis. Use the provided dataset to answer the following questions:

Dataset:

| Student_ID | Age | Gender | Test_Score | Study_Hours |

|---|---|---|---|---|

| 1 | 18 | Male | 85 | 6 |

| 2 | 20 | Female | NaN | 7 |

| 3 | 19 | Male | 78 | NaN |

| 4 | NaN | Female | 92 | 5 |

| 5 | 22 | Male | NaN | NaN |

Questions:

- What is missing data in a dataset?

- Why is it important to handle missing data before performing analysis?

- In the given dataset, how many missing values are in the "Test_Score" column?

- How many missing values are in the "Study_Hours" column?

- What are some common strategies to handle missing data? Briefly explain each.

- For the

Test_Scorecolumn, which strategy would you recommend to handle missing values? Why? - For the

Study_Hourscolumn, which strategy would you recommend to handle missing values? Why? - Can you suggest any Python libraries or functions that can help you handle missing data in a dataset?

- Calculate the mean value of the

Test_Scorecolumn and fill the missing values with it. - Fill the missing values in

Study_Hourswith the median value of the column.

🎯 Make sure you first attempt the questions before revealing the answers

👩🏾🎨 Reveal the Answer

-

What is missing data in a dataset?

- Missing data refers to the absence of values in certain cells of a dataset.

-

Why is it important to handle missing data before performing analysis?

- Handling missing data is important because it can lead to inaccurate analysis and modeling. Missing data can introduce biases and affect the reliability of results.

-

In the given dataset, how many missing values are in the "Test_Score" column?

- There are 2 missing values in the "Test_Score" column.

-

How many missing values are in the "Study_Hours" column?

- There are 3 missing values in the "Study_Hours" column.

-

What are some common strategies to handle missing data? Briefly explain each.

- Imputation/Filling: Replacing missing values with estimated values, such as the mean, median, or mode of the column.

- Deletion: Removing rows or columns with missing values.

- Interpolation: Using machine learning algorithms to predict missing values based on other variables.

-

For the "Test_Score" column, which strategy would you recommend to handle missing values? Why?

- Imputation with the mean value is a reasonable strategy because it provides a representative estimate of missing values without drastically affecting the distribution.

-

For the "Study_Hours" column, which strategy would you recommend to handle missing values? Why?

- Imputation with the median value might be a suitable strategy as well, but you can still use interpolation.

-

Can you suggest any Python libraries or functions that can help you handle missing data in a dataset?

- Python libraries like Pandas provide functions such as

.isna(),.fillna(), and.dropna()for handling missing data.

- Python libraries like Pandas provide functions such as

-

In the

Test_Score, calculate the mean value of the column and fill the missing values with it.test_score_mean = df['Test_Score'].mean() df['Test_Score'].fillna(test_score_mean, inplace=True) -

In the

Study_Hours, fill the missing values with the median value of the column.

study_hours_median = df['Study_Hours'].median()

df['Study_Hours'].fillna(study_hours_median, inplace=True)

➡️ In the next section, you'll be introduced to

Data inconsistenciesandOutliers.

Data Outliers

Handling data outliers is crucial because outliers can significantly impact the accuracy and reliability of data analysis. Imagine a dataset representing the weight of individuals in a class, where all values range from 35kg to 60kg, except for one extreme value of 109kg. This extreme outlier, possibly due to an error or anomaly, can skew the average weight calculation, making it highly misleading.

By identifying and handling outliers, we aim to ensure that our analysis is based on reliable and representative data, enabling us to make more accurate decisions and draw meaningful insights from the data.

Outlier

Outliers can occur due to various reasons, such as measurement errors, data entry mistakes, equipment glitches, or unusual circumstances. They can have a significant impact on data analysis because they can skew (or change) statistical measures and affect the overall trends and patterns observed in the data. Let's look at some examples...

Example 1

Imagine you have a dataset representing the heights of a group of people. Most of the heights fall within a certain range, but there may be a few extreme values that are much higher or lower than the rest. These extreme values are outliers.

Example 2

Consider a small dataset sample...

[15, 101, 18, 7, 13, 16, 11, 21, 5, 15, 10, 9]

Which of the data point is the outlier?

Reveal data outlier

By looking at it, one can quickly say 101 is an outlier because it is much larger than the other values.

Now let's look at the impact of this outlier on the data using the table below.

| Without outlier | With outlier |

|-----------------------|-------------------------|

| Mean: 20.08 | Mean: 12.72 |

| Median: 14.0 | Mean: 13.0 |

| Mode: 15 | Mode: 15 |

| Variance: 614.74 | Variance: 21.28 |

| Std dev: 24.79 | Std dev: 4.61 |

We can obviously see how the outlier has affected the dataset. Hence, identifying and handling outliers is important because they can have a significant impact on our data analysis, and may lead to misleading conclusions. Imagine having numerous outlier in patient health data, thereby leading to wrong diagnosis or prescription 🤦🏾♂️. Consequently, we need to find a way to handle outliers in our dataset.

Finding outliers

There are several techniques to find outliers in a dataset. One simple technique is using the range rule. Let's say we have a dataset representing the number of hours students study each day, ranging from 1 to 10 hours. If we consider any value below 1 hour or above 10 hours as an outlier, we can easily identify them by looking at the data.

Another technique is using z-scores. We can calculate the z-score for each data point, which measures how far each value is from the mean in terms of standard deviations. If a z-score is significantly larger or smaller than 0 (e.g., above 2 or below -2), we can consider it as an outlier.

Additionally, we can use box plots to visualize the distribution of the data. Any data points that fall outside the whiskers of the box plot can be considered outliers. More on visualization will be discussed in subsequent weeks.

Lastly, the percentile approach identifies outliers by comparing data points to percentiles. For instance, if a data point is above the 95th percentile or below the 5th percentile, it might be considered an outlier. This is the approach we'll adopt for this lesson.

Handling data outliers

There are several techniques to handle outlier but we'll only be looking at 3 in this course...

- Trimming

- Replacing

- Windsorization

1. Trimming

Handling outliers using the trimming technique involves capping or trimming extreme values to a specified range. This approach allows us to keep the bulk of the data while removing or adjusting the outliers. Let's explain this concept using a simple example and provide a code sample using pandas.

Trimming example

Imagine we have a dataset of student grades, and we suspect there are outliers that might be affecting our analysis. We can use the trimming technique to remove the extreme values beyond a certain threshold. Let's say we decide to trim the top and bottom 5% of the data.

Here's an example code snippet using pandas to demonstrate how to handle outliers using the trimming technique:

import pandas as pd

# Load the dataset

data = pd.read_csv('your_dataset.csv')

# Define the threshold as 3 standard deviations from the mean

threshold = 3 * data['column_name'].std()

# Identify outliers

outliers = data['column_name'] > threshold

# Trim the dataset by removing outliers

trimmed_data = data[~outliers]

# Print the trimmed dataset

print(trimmed_data)

In code above, the threshold is defined as 3 times the standard deviation of the column values. Rows that have values above this threshold are considered outliers. The ~ operator is used to negate the boolean condition, selecting all the rows that do not contain outliers. Finally, the trimmed dataset is printed.

By applying the trimming technique, you remove extreme outliers from the dataset, allowing for a more representative analysis of the majority of the data.

2. Replacing

Handling outliers using the replacing technique involves replacing extreme outlier values with more representative values in the dataset. This approach aims to mitigate the impact of outliers on data analysis without completely removing them. Using pandas, you can handle outliers using the replacing technique by following these steps:

-

Identify Outliers: Use pandas to identify the outliers in the dataset. You can determine outliers based on statistical measures like z-scores or percentiles, or based on domain-specific knowledge.

-

Replace Outliers: Once the outliers are identified, you can replace them with more representative values. One common approach is to replace outliers with the median or mean value of the feature.

-

Update the Dataset: Modify the dataset by replacing the outliers with the chosen representative values. This can be done using pandas functions like

fillna()orreplace().

Here's an example code snippet using pandas to handle outliers using the replacing technique:

import pandas as pd

# Load the dataset

data = pd.read_csv('your_dataset.csv')

# Identify outliers

outliers = data['column_name'] > threshold

# Replace outliers with the median value

median_value = data['column_name'].median()

data.loc[outliers, 'column_name'] = median_value

# Print the updated dataset

print(data)

In the code snippet above, the outliers are identified based on a condition, such as values greater than a certain threshold. The outliers are then replaced with the median value of the column using the loc accessor. Finally, the updated dataset is printed.

By applying the replacing technique, you replace extreme outliers with more representative values, allowing for a more accurate analysis of the data while still retaining the information from the outliers.

3. Winsorization

Handling outliers using the winsorization technique involves capping extreme values by replacing them with values that are closer to the rest of the data. This approach helps to minimize the impact of outliers on data analysis without completely eliminating them.

In pandas, you can handle outliers using the winsorization technique by following these steps:

-

Define the Threshold: Determine the threshold beyond which the values will be considered outliers. This threshold can be based on domain knowledge or statistical measures like z-scores or percentiles. We'll use percentile in this example.

-

Winsorize the Data: Use pandas'

clip()function to perform winsorization. This function allows you to set upper and lower limits for the values. Any values above the upper limit will be replaced with the maximum value within that limit, and any values below the lower limit will be replaced with the minimum value within that limit.

Here's an example code snippet using pandas to handle outliers using the winsorization technique:

import pandas as pd

# Load the dataset

data = pd.read_csv('your_dataset.csv')

# Define the upper and lower thresholds for winsorization

upper_threshold = data['column_name'].quantile(0.95)

lower_threshold = data['column_name'].quantile(0.05)

# Winsorize the data

winsorized_data = data['column_name'].clip(lower=lower_threshold, upper=upper_threshold)

# Update the dataset with winsorized values

data['column_name'] = winsorized_data

# Print the updated dataset

print(data)

The upper and lower thresholds are defined using quantiles, such as 0.95 for the upper threshold and 0.05 for the lower threshold. The clip() function is then used to winsorize the data by...

- replacing values above the upper threshold with the maximum value within that limit

- replacing values below the lower threshold with the minimum value within that limit.

- Finally, the dataset is updated with the winsorized values and printed.

By applying the winsorization technique, extreme outlier values are capped, bringing them closer to the rest of the data distribution. This helps in reducing the impact of outliers while retaining valuable information from the dataset.

👩🏾🎨 Check your understanding: Handling Outliers 🎯

Consider the following dataset representing the test scores of students:

| Student ID | Test Score |

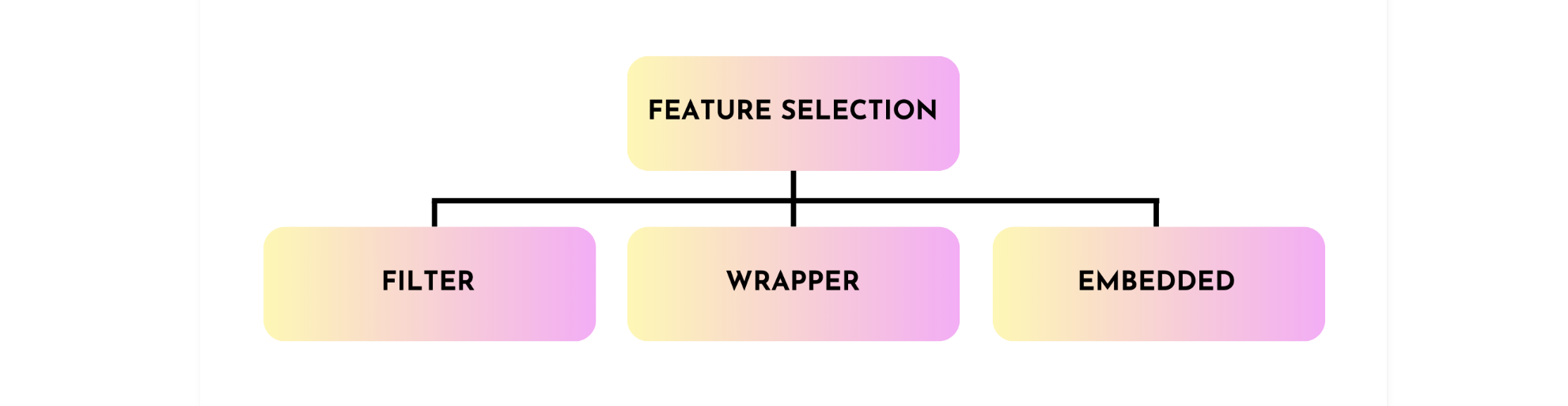

|---|---|